ΑΙhub.org

#ICLR2023 invited talks: exploring artificial biodiversity, and systematic deviations for trustworthy AI

The 11th International Conference on Learning Representations (ICLR) is taking place this week in Kigali, Rwanda, the first time a major AI conference has taken place in-person in Africa. The program includes workshops, contributed talks, affinity group events, and socials. In addition, a total of six invited talks covered a broad range of topics. In this post we give a flavour of the first two of these presentations.

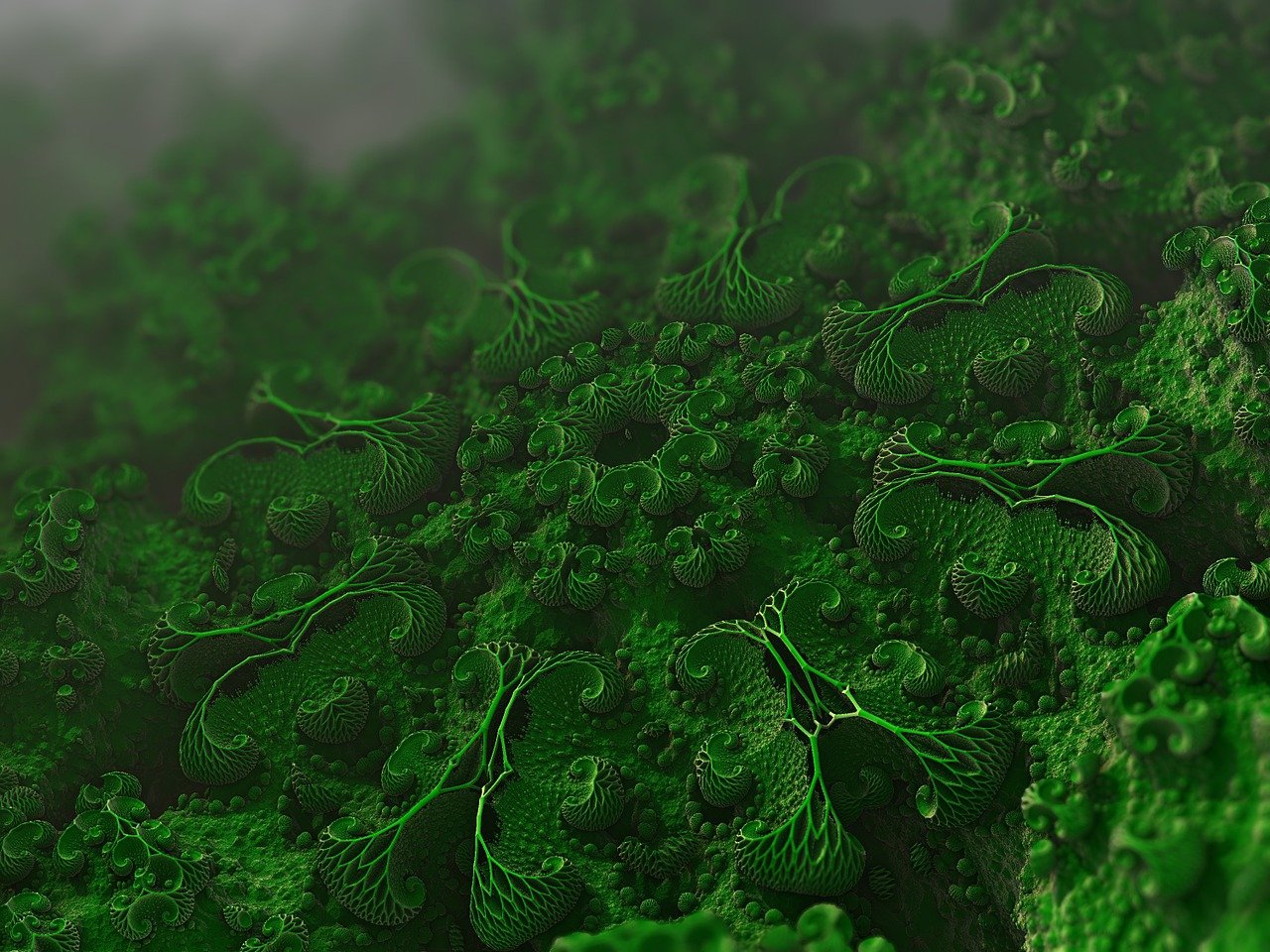

Entanglements, exploring artificial biodiversity – Sofia Crespo

Sofia Crepso is an artist who explores the interaction between biological systems and AI. Her fascination with the natural world began as a child when, on receiving a microscope as a present, she immediately became captivated by the variety of microstructures she observed through the lens. While out with friends it was not unusual for her to divert to collect a sample of pond water to examine under the microscope later. It interested her that it was a piece of human-developed technology that had allowed her to get closer to the natural world. When she was introduced to AI, this new human-developed technology had a similar impact. When creating artwork, AI algorithms allow her to see things in a different way. Her artwork centres on generating artificial lifeforms and, through that, exploring nature, biodiversity, and our representation of the natural world.

Rather than use off-the-shelf packages, Sofia prefers to train her own models and create her own datasets. Her first projects involved the generation of 2d images, however, she was soon keen to be more ambitious and create 3d art. In one project, she collaborated with a colleague to train a model to generate insect lifeforms. It was a challenge to work how to train a neural network to generate 3d insect forms. Their solution was to take 3d meshes of insects and create 3d MRI scan-type images, then use these to train a regular 2d GAN. The generated 2d images could then be reconstructed afterwards to form a 3d structure. Finally, they used 3d style transfer to generate the surface textures of their new creations. This project was exhibited in the form of an 11-metre high inflatable sculpture in Shanghai. You can view some of the insects generated, and find out more about this project, here.

In other projects, Sofia has generated jellyfish, looked into critically endangered species, and created an aquatic ecosystem. The last of these involved diving expeditions using aquatic drones and hydrophones to create datasets of the flora and fauna in the oceans and the sounds of this watery world. To close, Sofia invited the audience to think about how we use technology to engage with nature, and to consider how we catalogue and represent the natural world.

Understanding systematic deviations in data for trustworthy AI – Girmaw Abebe Tadesse

Datasets form a critical component of AI systems. In order to develop trustworthy AI solutions, a clear understanding of the data being used is vital. In many datasets, there are trends observed in a subset of the data that are not evident globally. Investigating these differences manually is a difficult task. Therefore, Girmaw Abebe Tadesse and colleagues have developed methods to automatically discover what are called “systematic deviations”, and which can alert researchers to anomalous subsets.

When devising such methods, one needs to consider the difference between 1) the reality (the data) and 2) the expectation (what is taken as normality). Systematic deviation concerns quantifying the divergence between these two and identifying a subset where the difference is much more than you would expect. The expectation that you set depends greatly on the kind of data you are looking at. For instance, it may be that the expected value is simply the global average of your data. Or it could be an average for a specific condition. If, for example, you were working on adversarial attacks, your expectation would come from clean samples that you are confident about. On the other hand, if you were working in healthcare investigating heterogeneous treatment effects, your knowledge from the control group becomes your expectation.

Girmaw gave some examples of how he and his team have used their methods to gain practical insights. One such example concerned systematic deviation informing longitudinal change in healthcare scenarios. Looking at child mortality rates reported from demographic health surveys in different countries, the global averages suggest a significant decline over the last two decades. However, the country-wide averages given in these reports do not give the full picture. Using their methodology, the team were able to establish that there were indeed departures from the national averages and the reasons for these differed depending on the country. For example, in Burkina Faso access to health facilities was the key determining factor, whereas in Nigeria the geographical region the most critical. You can find out more about this work here.

tags: ICLR2023