ΑΙhub.org

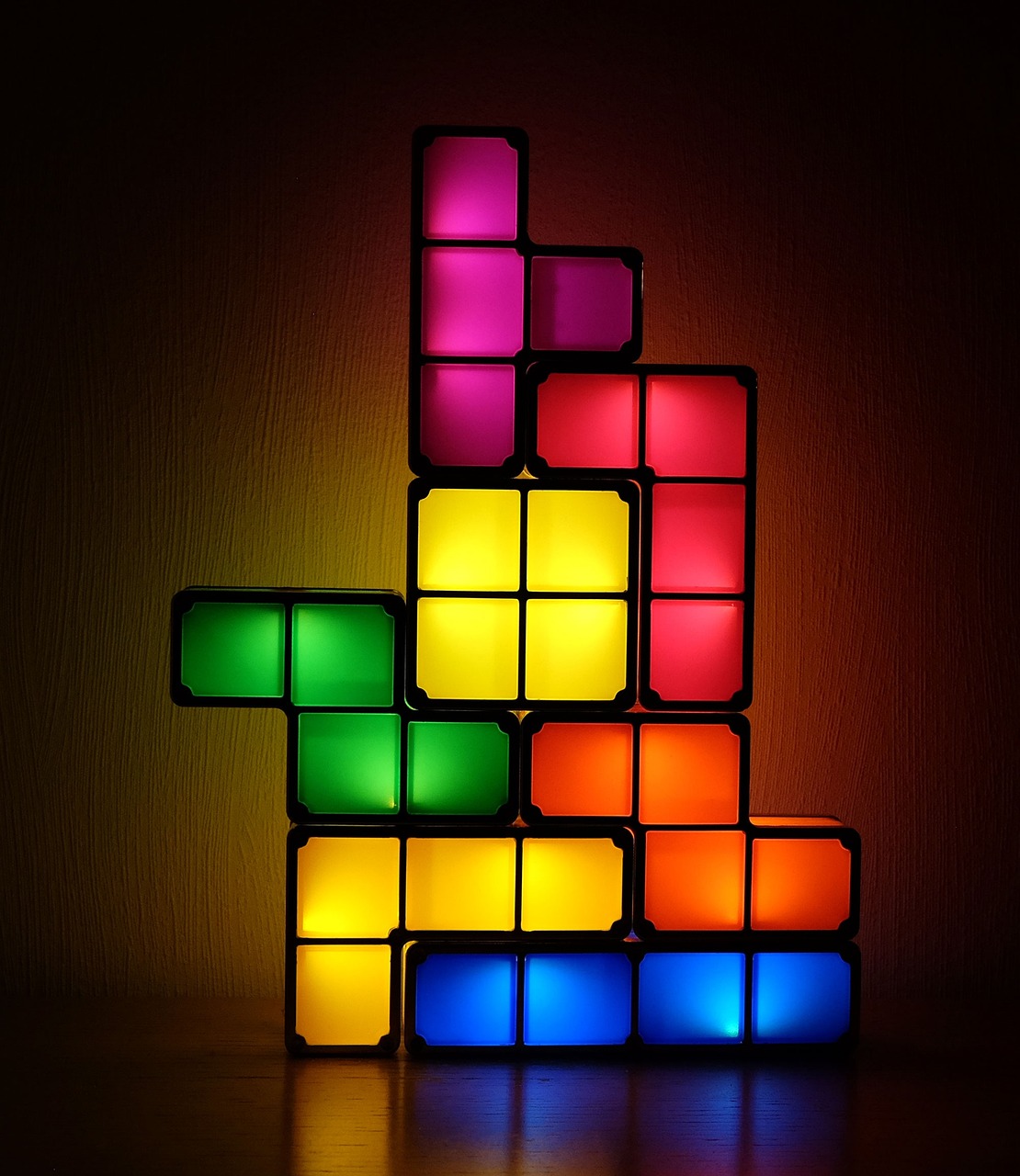

Tetris reveals how people respond to an unfair AI algorithm

By Tom Fleischman

An experiment in which two people play a modified version of Tetris – the 40-year-old block-stacking video game – revealed that players who get fewer turns perceive the other player as less likable, regardless of whether a person or an algorithm allocates the turns.

“We expected that people working in a team would care if they are treated unfairly by another human or an AI,” said Malte Jung, associate professor of information science in the Cornell Ann S. Bowers College of Computing and Information Science, whose group conducted the study.

Most studies on algorithmic fairness focus on the algorithm or the decision itself, but Jung sought to explore the relationships among the people affected by the decisions.

“We are starting to see a lot of situations in which AI makes decisions on how resources should be distributed among people,” Jung said. “We want to understand how that influences the way people perceive one another and behave towards each other. We see more and more evidence that machines mess with the way we interact with each other.”

Houston B. Claure is first author of The Social Consequences of Machine Allocation Behavior: Fairness, Interpersonal Perceptions and Performance, published April 27 in Computers in Human Behavior. Claure earned his master’s and doctorate in mechanical engineering, minoring in computer science.

Jung and Claure conducted an earlier study in which a robot chose which person to give a block to, and studied the reactions of each individual to the machine’s allocation decisions.

“We noticed that every time the robot seemed to prefer one person, the other one got upset,” said Jung, director of the Robots in Groups Lab. “We wanted to study this further, because we thought that, as machines making decisions becomes more a part of the world – whether it be a robot or an algorithm – how does that make a person feel?”

Because of the time it took to conduct experiments and analyze data using a physical robot, Jung and Claure felt there was a better and more efficient way to study this effect. That’s when Tetris – originally released in 1984, and long a useful tool for researchers looking to gain fundamental insights about human cognition, social behavior and memory – entered the picture.

“When it comes to allocating resources,” Claure said, “it turns out Tetris isn’t just a game – it’s a powerful tool for gaining insights into the complex relationship between resource allocation, performance and social dynamics.”

Using open-source software, Claure – now a postdoctoral researcher at Yale University – developed a two-player version of Tetris, in which players manipulate falling geometric blocks in order to stack them without leaving gaps before the blocks pile to the top of the screen. Claure’s version, Co-Tetris, allows two people (one at a time) to work together to complete each round.

An “allocator” – either human or AI, which was conveyed to the players – determines which player takes each turn. Jung and Claure devised their experiment so that players would have either 90% of the turns (the “more” condition), 10% (“less”) or 50% (“equal”).

The researchers found, predictably, that those who received fewer turns were acutely aware that their partner got significantly more. But they were surprised to find that feelings about it were largely the same regardless of whether a human or an AI was doing the allocating.

One particularly interesting finding: When the allocation was done by an AI , the player receiving more turns saw their partner as less dominant, but when the allocation was done by a human, perceptions of dominance weren’t affected.

The effect of these decisions is what the researchers have termed “machine allocation behavior” – similar to the established phenomenon of “resource allocation behavior,” the observable behavior people exhibit based on allocation decisions. Jung said machine allocation behavior is “the concept that there is this unique behavior that results from a machine making a decision about how something gets allocated.”

The researchers also found that fairness didn’t automatically lead to better game play and performance. In fact, equal allocation of turns led, on average, to a worse score than unequal allocation.

“If a strong player receives most of the blocks,” Claure said, “the team is going to do better. And if one person gets 90%, eventually they’ll get better at it than if two average players split the blocks.”

Rene Kizilcec, assistant professor of information science (Cornell Bowers CIS) and a co-author of the study, hopes this work leads to more research on the effects of AI decisions on people – particularly in scenarios where AI systems make continuous decisions, and not just one-off choices.

“AI tools such as ChatGPT are increasingly embedded in our everyday lives, where people develop relationships with these tools over time,” Kizilcec said, “how, for instance, teachers, students, and parents think about the competence and fairness of an AI tutor based on their interactions over weeks and months matters a great deal.”

The other co-author is Seyun Kim, a doctoral student in human-computer interaction at Carnegie Mellon University.

The work was supported by the National Science Foundation.