ΑΙhub.org

Interview with Simone Ciarella: using machine learning to study supercooled liquids

In their paper Dynamics of supercooled liquids from static averaged quantities using machine learning, Simone Ciarella, Massimiliano Chiappini, Emanuele Boattini, Marjolein Dijkstra and Liesbeth M C Janssen introduce a machine-learning approach to predict the complex non-Markovian dynamics of supercooled liquids. In this interview, Simone tells us about supercooled liquids, and how the team used machine learning in their study.

First of all, what are supercooled liquids and why are their dynamics an interesting area for study?

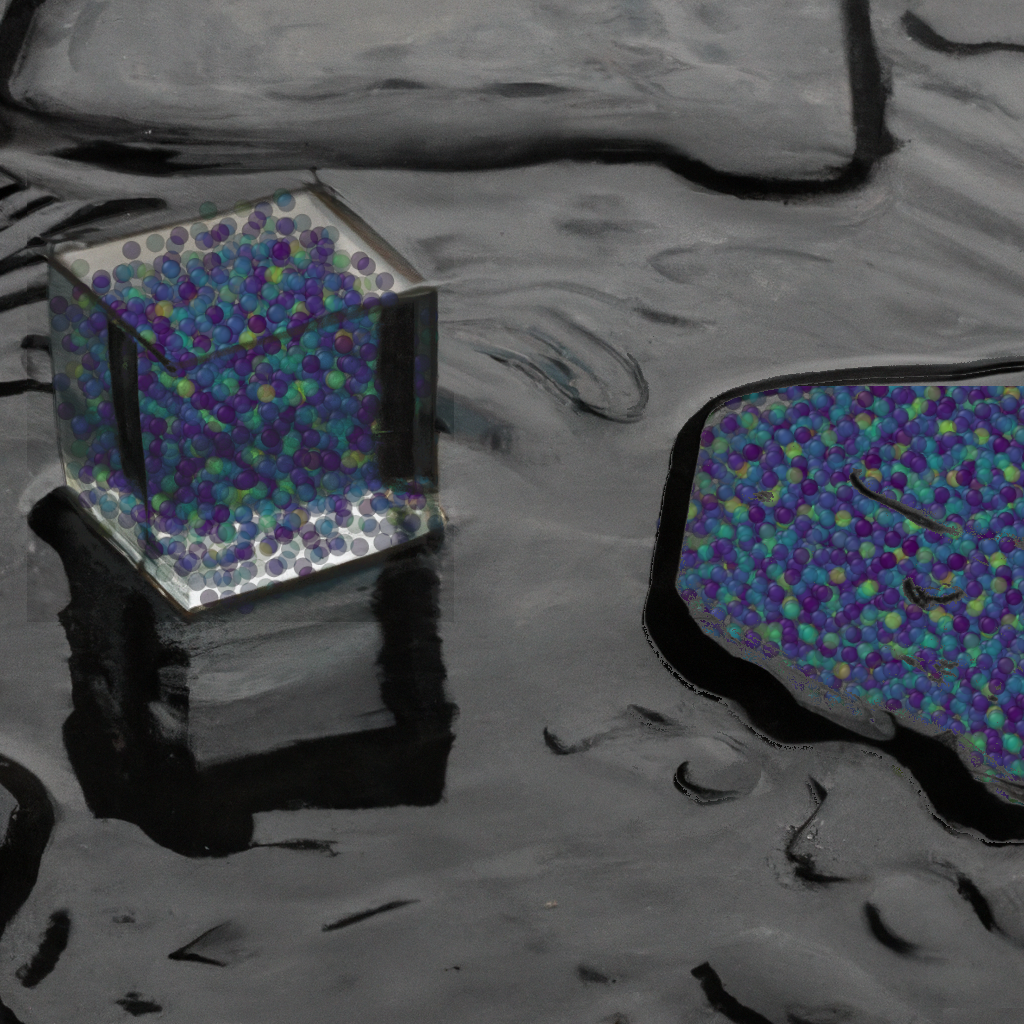

Supercooled liquids are liquids that are cooled below their normal freezing point without undergoing a phase transition into a solid state. Imagine you have a glass of water. Normally, when you lower the temperature, the water molecules slow down and arrange themselves into a solid crystal structure, that we call ice, because this structure is very stable. However, if you cool the water very carefully and quickly, you can actually cheat the molecules and prevent them from finding the solid crystal structure that they would like to form. This is what we call in general a supercooled liquid.

While significant progress has been made in understanding the behavior of supercooled liquids, it is still considered a major open problem in many aspects. This is strikingly relevant while looking at a phenomenon called the glass transition: as the temperature decreases, the liquid’s viscosity increases dramatically, and its dynamics slow down significantly. This results in the liquid appearing solid-like or glassy, even though from a structural point of view the changes are minimal. In other words, the material behaves as a solid, but it retains the structure of a liquid, causing an apparent mismatch between the structure and dynamics. So far, no theory has been successful in fully explaining the connection between structure and macroscopic properties when dealing with supercooled liquids and glasses.

In addition to this challenging fundamental question, the dynamics of supercooled liquids is important for many applications. For example, they are essential in materials science for the development of new glasses and polymers, or for advanced devices, like solid-state memories and biocompatible materials. An in-depth knowledge of supercooled liquid dynamics is therefore necessary in order to fully control and design such complex materials and devices, which have practical implications for many industries.

How can machine learning help in the understanding of supercooled liquids?

The main issue in the understanding of supercooled liquids can be summarized by the question: how can we tell a liquid from a glass simply by looking at a microscopic photograph of the system?

So far, no theory has been able to address this seemingly easy question. One reason for this difficulty is that the microscopic structure of a glassy material is inherently disordered, and it is a priori not clear which structural features are the most important in a disordered configuration.

A clear direction in which machine learning can help the understanding of supercooled liquids is by providing predictions of realistic trajectories that a single particle in the material would follow starting from an observed snapshot, given the particle’s local structural environment.

Recently, this has been attempted with neural networks, support vector machines, auto-encoders, and graph neural networks, achieving remarkable success. Ideally, we would like to use those predictions to develop a theory that explains the slowdown process that characterizes supercooled liquids. In fact, these ML predictions are extremely useful, because standard methods to calculate the trajectories become too slow and computationally expensive.

Furthermore, more generally, ML can help us to identify subtle but important differences in the disordered structures of liquids and glasses that would be almost impossible to see with the naked eye. These machine learning predictions, however, lose their accuracy as they get closer to the glass transition, so they cannot yet be used to develop a functioning theory of the glass transition.

What was the aim of your work, and what were your main findings?

In our research, we chose to adopt a system-level approach instead of focusing on individual particle trajectories. So, instead of treating supercooled liquids as a collection of distinct particles, we represented them as a single function that captures the average arrangement of particles.

Our aim was to predict the trajectory of this collective function (a measure for the viscosity) and comprehend the changes in its behavior approaching the glass transition. The decision to employ this approach was motivated by both theoretical and experimental considerations. In fact, many advanced theories on the glass transition are based on a collective description like ours. Additionally, from an experimental standpoint, it is generally more convenient to measure collective properties rather than resolving individual particle details.

Our initial result was the development of a predictive strategy for this collective representation. Given a static snapshot of the system, we can effectively estimate its future behavior in terms of collective functions. Furthermore, we uncovered a crucial constraint that any theory attempting to explain the glass transition must satisfy. This constraint in what is called the “memory term” ensures that the dynamics accurately reproduce experimental observations of supercooled liquids.

Could you describe your methodology?

First, we conducted a series of molecular dynamics simulations on both supercooled liquids and glass to gather a comprehensive dataset. These simulations provided us with detailed information about the behavior of individual particles in the system.

Next, we transformed our particle-resolved description into a collective representation, following a well-established framework in condensed matter theory. This allowed us to capture the average organization of particles in the supercooled liquid or glass. The collective representation provided a higher-level view of the system, enabling a more comprehensive understanding of its dynamics. To predict the trajectory of the collective function, we employed a neural network. The neural network was trained using the initial conditions of the system as input and the desired collective trajectory as the target output.

Remarkably, the neural network demonstrated excellent performance in predicting the behavior of the collective function for both liquids and glasses. Subsequently, we formulated a supercooled liquid theory in the form of an exact equation that encapsulates all the approximations and simplifications into a single unknown function (the memory term). This equation allows us to express the dynamics of the system in a more concise and manageable form, which is still unsolvable, but it condensates all the unknown phenomena in a single function.

To explore the general features of this approximate memory term in the equation, we employed an evolutionary strategy. This strategy involved iteratively refining and optimizing the parameters of the approximate term to identify its key characteristics. By leveraging this approach, we gained insights into the underlying principles governing the behavior of the collective function and its relationship to the system’s initial conditions. Through this multi-step process, combining simulations, collective observables, neural network predictions, and an evolutionary strategy, we made significant progress in predicting the dynamics of supercooled liquids and glasses, and were able to extract valuable information about their collective behavior and key driving factors.

What work are you planning next in this area?

We have outlined two directions for our ongoing research.

Firstly, on the theoretical side, we aim to further explore the findings obtained from our evolutionary strategy, specifically regarding the memory term. To enhance the realism of our theoretical model, we are actively developing novel techniques to estimate this term more accurately using data and simulations. By refining our understanding of the memory term, we expect to gain deeper insights into the long-term dynamics and memory effects within supercooled liquids and glasses.

In the second direction, we are incorporating artificial intelligence (AI) techniques, particularly generative AI, to complement molecular dynamics simulations. By integrating generative AI with simulation methods, we aim to create hybrid approaches that provide more precise, authentic, and broad predictions. These hybrid methods have the potential to significantly improve our ability to explore and understand the dynamics of supercooled liquids and glasses.

About Simone

|

Dr Ciarella is a young researcher who earned his Ph.D. in Physics in 2021. With a strong background in theoretical and computational physics cultivated during his academic journey in Rome, he embarked on his Ph.D. in Eindhoven, focusing on smart materials capable of shape-changing and self-healing. Driven by a passion for interdisciplinary research, he delved into the realm of machine learning during his PostDoc at ENS Paris, specifically concentrating on generative AI. Armed with an in-depth understanding of physics and expertise in machine learning techniques, his goal is to advance both fields by bridging the gap between theory and data-driven methodologies. |

Read the research in full

Dynamics of supercooled liquids from static averaged quantities using machine learning, Simone Ciarella, Massimiliano Chiappini, Emanuele Boattini, Marjolein Dijkstra and Liesbeth M C Janssen, Machine Learning: Science and Technology.