ΑΙhub.org

Machine learning enhances X-ray imaging of nanotextures

Real-space imaging of nano-textures in crystalline thin films. From Real-space imaging of polar and elastic nano-textures in thin films via inversion of diffraction data, reproduced under a CC BY 4.0 licence.

Real-space imaging of nano-textures in crystalline thin films. From Real-space imaging of polar and elastic nano-textures in thin films via inversion of diffraction data, reproduced under a CC BY 4.0 licence.

By Syl Kacapyr

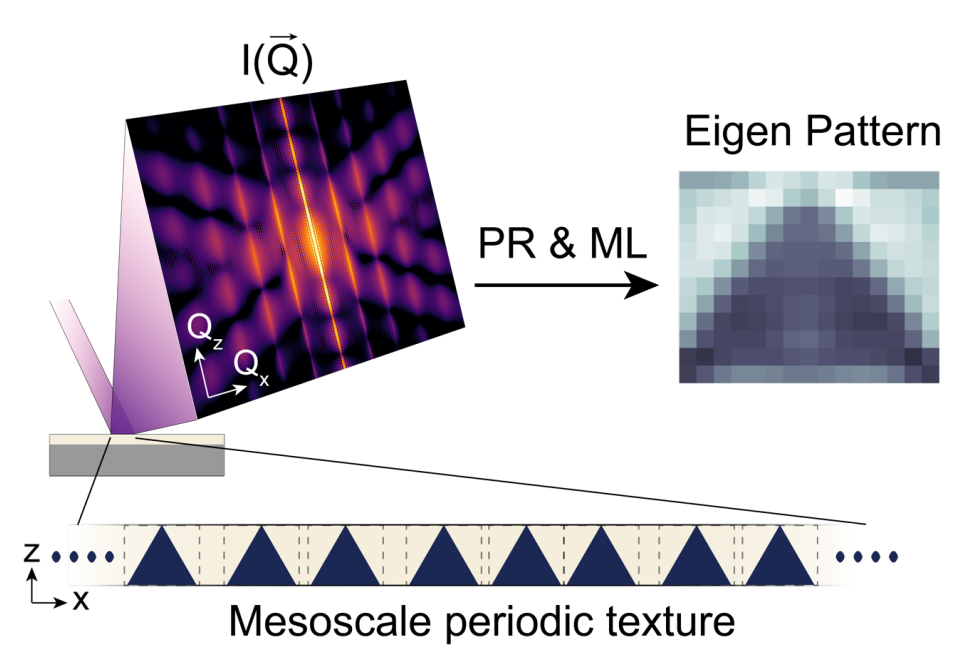

Using a combination of high-powered X-rays, phase-retrieval algorithms and machine learning, researchers revealed the intricate nanotextures in thin-film materials, offering scientists a new, streamlined approach to analyzing potential candidates for quantum computing and microelectronics, among other applications.

Scientists are especially interested in nanotextures that are distributed non-uniformly throughout a thin film because they can give the material novel properties. The most effective way to study the nanotextures is to visualize them directly, a challenge that typically requires complex electron microscopy and does not preserve the sample.

The new imaging technique overcomes these challenges by using phase retrieval and machine learning to invert conventionally-collected X-ray diffraction data – such as that produced at the Cornell High Energy Synchrotron Source, where data for the study was collected – into real-space visualization of the material at the nanoscale.

The use of X-ray diffraction makes the technique more accessible to scientists and allows for imaging a larger portion of the sample, said Andrej Singer, assistant professor of materials science and engineering and David Croll Sesquicentennial Faculty Fellow in Cornell Engineering, who led the research with doctoral student Ziming Shao.

“Imaging a large area is important because it represents the true state of the material,” Singer said. “The nanotexture measured by a local probe could depend on the choice of the probed spot.”

Another advantage of the new method is that it doesn’t require the sample to be broken apart, enabling the dynamic study of thin films, such as introducing light to see how structures evolve.

“This method can be readily applied to study dynamics in-situ or operando,” Shao said. “For example, we plan to use the method to study how the structure changes within picoseconds after excitation with short laser pulses, which might enable new concepts for future terahertz technologies.”

The technique was tested on two thin films, the first of which had a known nanotexture used to validate the imaging results. Upon testing a second thin film – a Mott insulator with physics associated with superconductivity – the researchers discovered a new type of morphology that had not been observed in the material before – a strain-induced nanopattern that forms spontaneously during cooling to cryogenic temperatures.

“The images are extracted without prior knowledge,” Shao said, “potentially setting new benchmarks and informing novel physical hypotheses in phase-field modeling, molecular dynamics simulations and quantum mechanical calculations.”

The research was supported by the U.S. Department of Energy and the National Science Foundation.

Read the research in full

Real-space imaging of polar and elastic nano-textures in thin films via inversion of diffraction data, Ziming Shao, Noah Schnitzer, Jacob Ruf, Oleg Y. Gorobtsov, Cheng Dai, Berit H. Goodge, Tiannan Yang, Hari Nair, Vlad A. Stoica, John W. Freeland, Jacob Ruff, Long-Qing Chen, Darrell G. Schlom, Kyle M. Shen, Lena F. Kourkoutis, Andrej Singer