ΑΙhub.org

Interview with Changhoon Kim – enhancing the reliability of image generative AI

The AAAI/SIGAI Doctoral Consortium provides an opportunity for a group of PhD students to discuss and explore their research interests and career objectives in an interdisciplinary workshop together with a panel of established researchers. This year, 30 students have been selected for this programme, and we’ll be hearing from them over the course of the next few months. Our first interviewee is Changhoon Kim.

Tell us a bit about your PhD – where are you studying, and what is the topic of your research?

I am pursuing my Ph.D. at Arizona State University, a vibrant hub of innovation located in one of the U.S.’s sunniest cities. This unique setting provides a conducive atmosphere for focused research, particularly during summer when the intense heat encourages more indoor lab work.

My research focuses on enhancing the reliability of Image Generative Artificial Intelligence (Gen-AI) models, like DALL·E 3 and Stable Diffusion. These advanced technologies have evolved from initial research concepts to powerful, production-grade tools, influencing diverse fields such as entertainment, art, journalism, and education. However, the rise of these Gen-AI models brings with it significant challenges. Their widespread accessibility, while beneficial in democratizing AI, also creates vulnerabilities. These models can be exploited by malicious actors for activities like spreading misinformation or creating deepfakes, which have serious implications for privacy and public opinion. Addressing these risks is crucial.

My work is dedicated to pinpointing and mitigating the inherent shortcomings of these models. I aim to fortify their reliability, thereby ensuring their responsible and ethical use. By doing so, my research strives to balance the technological advancements these models represent with the imperative to protect societal welfare in an era of rapid digital transformation.

Could you give us an overview of the research you’ve carried out during your PhD?

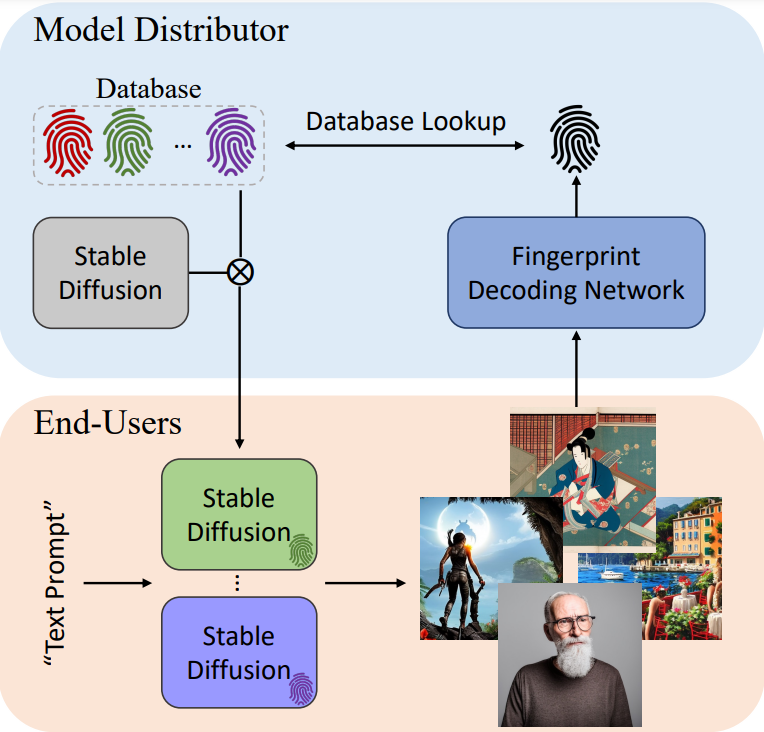

My PhD research at Arizona State University addresses the emerging challenges in the field of Generative Artificial Intelligence (Gen-AI), particularly the risks posed by the widespread accessibility. To mitigate risks, I have developed a novel fingerprinting technique (see image above) tailored for Gen-AI models. This technique diverges from traditional methods like discrete wavelet transformation, which rely on post-generation embedding and are subject to user discretion. Instead, it intrinsically incorporates a user-specific fingerprint within the Gen-AI model’s generation process, making it invisible to end-users but extractable through a dedicated decoding module.

This advancement significantly enhances the traceability and accountability of Gen-AI outputs. By embedding the fingerprint at the point of model download, the system ensures that every output inherently carries the user’s unique fingerprint. This approach effectively prevents users from bypassing the fingerprinting process, thereby maintaining the integrity of the Gen-AI outputs. If misused, these fingerprints enable the identification of the responsible party. It represents a crucial step towards ensuring the secure and responsible use of Gen-AI technologies.

Is there an aspect of your research that you are particularly proud of?

A particularly compelling element of my research is its extensive applicability across the diverse domains of Generative Artificial Intelligence (Gen-AI). While Gen-AI models have a broad range of outputs, including audio, video, and 3D models, the focus of most research on attribution has traditionally been limited to images and language. My work, however, is distinguished by its adaptability to these various modalities. A notable achievement under my direction was when a master’s student and I successfully extended my method to audio generative models. This accomplishment highlights the versatility of my research and its significance in setting new standards for ensuring accountability and traceability across the multifaceted uses of Gen-AI technologies.

What are your plans for building on your PhD research – what aspects would you like to investigate next?

Building upon my PhD research, I plan to transition from solely focusing on reactive methods for assigning responsibility for content generated by Gen-AI, such as fingerprinting, to adopting a more proactive approach. While current fingerprinting methods are crucial, they primarily address issues post-factum, after societal harm has been inflicted by malicious users. This situation underscores the necessity for strategies that are not only reactive but also proactive in preventing such misuse in the first place.

Specifically, my focus will be on machine unlearning. The current state of Gen-AI grants malicious actors the capability to generate unauthorized content without necessary permissions. This situation urgently calls for the development of methods that can judiciously refine Gen-AI’s knowledge base, removing sensitive content while preserving overall performance.

What made you want to study AI?

My journey towards studying AI was a gradual evolution from my background in Industrial Engineering, where my focus up to my master’s degree was on optimization to solve industry-related problems. The pivotal moment came during a conversation with my friend and collaborator, Kyle Min (Intel Labs.). He opened my eyes to the fascinating complexities in computer vision, especially how deep learning models mirror human perceptions. This conversation sparked my interest in the field, as it opened up a new perspective on how AI could intersect with and enhance my previous expertise in optimization. It was this blend of optimization knowledge and the groundbreaking potential of AI in fields like computer vision that ultimately steered me towards pursuing AI as my research focus.

What advice would you give to someone thinking of doing a PhD in the field?

Embarking on a PhD, especially in a field as demanding as AI, is a journey that often extends beyond five years and is filled with its own unique challenges. One of the most important pieces of advice I can offer is to have a clear understanding of your dream or goal. This clarity is crucial because, during the course of your PhD, you will likely face periods of self-doubt and criticism. It is common to experience feelings akin to imposter syndrome, questioning your knowledge and abilities, and to find yourself comparing your progress with that of other scholars, colleagues, or friends who have taken different paths.

Despite these challenges, if your dream aligns with your research, the PhD journey can be immensely rewarding. It is not just about academic or professional growth; it’s also a period of significant personal development. You learn to master your mindset, control your emotions, and gain a deeper understanding of life and your place in it. If your passion for research is driven by a dream that resonates with your personal and professional aspirations, then a PhD can be a profoundly fulfilling experience, replete with both challenges and triumphs.

Could you tell us an interesting (non AI-related) fact about you?

Outside of my academic pursuits, I have found a unique way to balance the intense mental demands of a PhD journey – through Brazilian Jiu-Jitsu (BJJ). Pursuing a doctorate involves a constant mental engagement, often stretching right up to bedtime, which can quickly escalate into extreme stress or even burnout. Recognizing the need for a counterbalance, I took up BJJ from my first year of the PhD program. This form of martial arts offers a complete departure from my research activities. During training, I immerse myself in a world entirely different from my academic work, which allows me to momentarily set aside my research thoughts. This “research-off” period has been crucial in relieving stress, maintaining mental well-being, and ensuring a smoother transition to restful sleep. BJJ has not just been a physical activity for me, but a therapeutic escape, integral to my overall PhD experience.

About Changhoon

|

Changhoon Kim is nearing the completion of his Ph.D. in Computer Engineering at Arizona State University, under the advisory of Professor Yezhou Yang. His primary research is centered on the creation of trustworthy and responsible machine learning systems. He has devoted recent years to the development of user-attribution methods for generative models—an indispensable area of research in the age of AI-generated hyper-realistic content. His research extends to various modalities, including image, audio, video, and multi-modal generative models. |

tags: AAAI, AAAI Doctoral Consortium, AAAI2024, ACM SIGAI