ΑΙhub.org

Interview with Elizabeth Ondula: Applied reinforcement learning

The AAAI/SIGAI Doctoral Consortium provides an opportunity for a group of PhD students to discuss and explore their research interests and career objectives in an interdisciplinary workshop together with a panel of established researchers. This year, 30 students have been selected for this programme, and we’ll be hearing from them over the course of the next few months. In this interview, we meet Elizabeth Ondula and find out about her work applying reinforcement learning in different domains.

Where are you studying for your PhD and what’s the topic of your research?

I’m currently pursuing my PhD in Computer Science at the University of Southern California in Los Angeles, a city I’d describe as diverse in culture and history. Here, I’ve had the opportunity to cross paths with brilliant artists, writers, scientists, engineers, musicians, and actors, all contributing to a dynamic environment that enriches my PhD experience.

I’m a member of the autonomous networks research group; we’re advised by Professor Bhaskar Krishnamachari. What I focus on is applied reinforcement learning. More specifically, the past three years it’s been around applying it to public health. This includes stochastic epidemic modeling, development of a simulation environment, and evaluation of reinforcement learning algorithms. Other research interests are understanding decision processes for large language model (LLM)-based multi-agent systems and applying graph neural networks to autonomous exploration.

Could you give us an overview of the research you’ve carried out so far during your PhD?

In my first and second year I explored how to apply reinforcement learning to find sustainable configurations of farming land that will maximize crop yield while minimizing soil degradation.

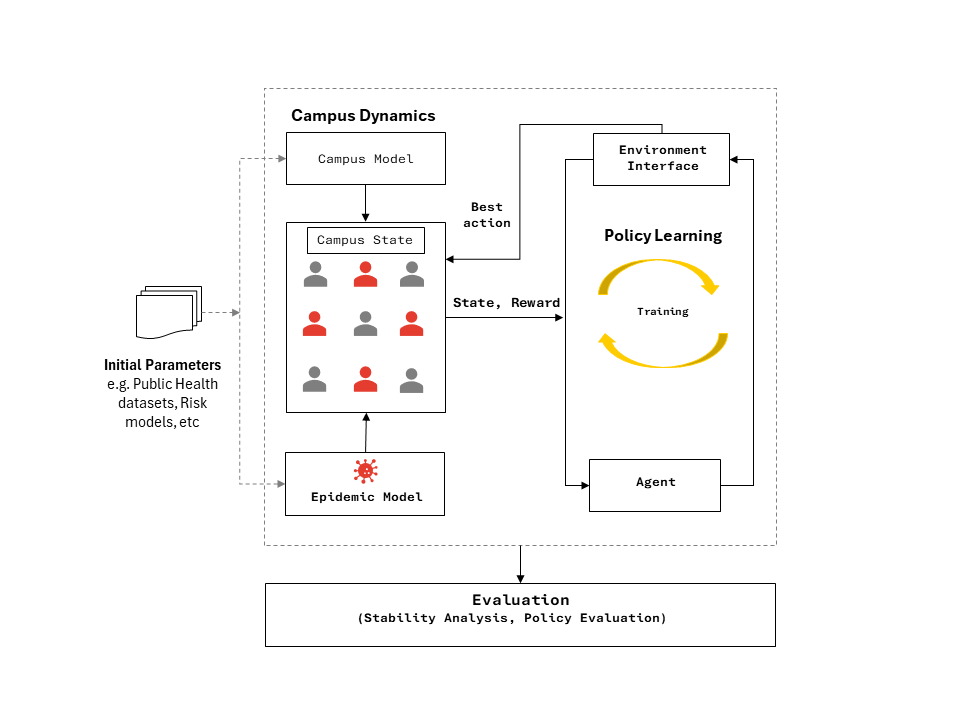

From my third year to now I have been working on applying reinforcement learning to develop and evaluate public health policies and decisions, especially in epidemic scenarios. The artifact is a simulation tool that allows for exploration and refining of policies that are attuned to diverse epidemic dynamics. The current scope of research is particularly concentrated on the transmission of infectious diseases, emphasizing the mechanisms of person-to-person spread, be it through indirect contact or the more insidious airborne transmission.

I have also collaborated with other researchers and fellow students on understanding how sentiment affects deliberative opinions in multi-agent systems using LLMs, and on applying graph neural networks in robot exploration.

Could you tell us more about the epidemic modelling? Did this project come about because of the COVID pandemic?

The idea of a stochastic epidemic model is that it is supposed to reflect a real-world epidemic scenario. We explore the different policies that an AI agent comes up with, and the idea is to understand how to deal with an epidemic. We do a lot of evaluation of the policies because we want to understand how the model is making the decisions it makes, and whether those decisions are safe.

This research was motivated during the peak of the pandemic. This was a challenging time. I also lost my dad and was unable to travel for his burial. The travel restrictions in place made me think about why these public policies are static, and how these strategies could be more adaptable to evolving conditions. My research addresses this question including figuring out safety protocols that could be applicable in various spaces such as educational institutions, workplaces, transportation, etc. In addition, I also look into how to evaluate AI systems that generate such policies and protocols.

The main work is around having the AI agent figure out, for example, how many students could be allowed in a campus class while the epidemic is going on. The agent needs to figure out how many students could safely be let back to school so as not to compromise the safety of the community. So, could we have come up with policies that are dynamic, and could be adapted to different situations? Our work was specifically looking at occupancy levels for classrooms, but it’s something that could be extended to figuring out capacities for hospitals, or travel restrictions.

Architecture of the tool applied to campus scenarios.

Architecture of the tool applied to campus scenarios.

Could you tell us more about the project on sustainable configurations of crops?

Prior to my work on epidemic policies, I was applying RL to working on sustainable configurations of farming land. Basically, which configurations would maximize yield whilst minimizing soil degradation. My specific interest was finding out if we could come up with a sustainable configuration of crops so that we could avoid pesticides or fertilizer. The motivation for this is what is known as the “three sisters” cropping system where you have corn, bean and squash in combination. The corn acts as a support for the bean and the squash, the bean adds nutrients, and then the squash helps maintain moisture in the soil. It was exploratory research, trying to find different combinations of crops that worked in that way. And, using those combinations, could we maximize yield and minimize the effect on the environment. This was a very ambitious project and was where I first started exploring how RL could be applied to different domains. RL has mostly been applied to robotics or games, so, in my work, I tried to focus more on applications with social impact.

What are your plans for building on the research so far during your PhD?

I am currently working towards open sourcing my tools with the hope that they could foster collaborations among different stakeholders. For example, for the artifact mentioned above, it could bridge the gap between epidemiologists, AI researchers, policymakers and administrators.

So far, I’ve been working on the use case of a campus setting, and looking at airborne transmission, so could the same approach, or framework, also be applied to other types of epidemics, like dengue fever? That is something else that I’m exploring. Other use cases I’m thinking about are hospitals, and how staff decide to move different patients if there is a breakout of a virus, for example.

What was it that made you want to study AI?

Firstly, I was just driven by curiosity about developing intelligent tools and software that are capable of performing tasks autonomously. My ultimate goal is to have tools that can assist in decision-making processes and address challenges that are faced in our society. I’m interested in how AI tools could enhance our day-to-day lives. Personally, I’d like to be able to offload some of the decisions I have to make repetitively. The push to get into a PhD program came from being inspired by people in the field. Prior to starting my PhD, I was working as a software engineer at IBM Research and there I saw many different scientists, and that’s what I wanted to become. Just seeing what other people were able to achieve and how they were thinking, that gave me the push to get into the field.

Do you have any advice for anyone who’s thinking about doing a PhD in the field?

I would say to not be afraid to take a chance, or to feel intimidated by what already exists. In my view, AI is a very diverse field and it’s always evolving, and in need of diverse perspectives. So, the more people coming from different backgrounds (whether that’s a different field, or a different community), the better. This can help make the field stronger. There will also always be a lot of problems to address, and AI can help, but it can only help if there are enough people working on those problems.

Could you tell us an interesting non-AI related fact about you?

I really enjoy social gatherings, including going to parties or just being out with people. I also just started writing poetry. I signed up to a poetry class over the summer which I’m looking forward to attending.

Are there any books, talks, or other resources / sources of inspiration that have been particularly helpful to you during your PhD?

As well as my mentors and advisor, my friends and community, I’ve found the following resources helpful during my PhD:

- Books

- Science and method, Henri Poincaré

“If the scientist had an infinity of time at his disposal, it would be sufficient to say to him, ‘Look, and look carefully’. But since he has not time to look at everything, and above all to look carefully, and since it is better not to look at all than to look carelessly, he is forced to make a selection” - Ikigai: The Japanese Secret to a Long and Happy Life, H García and F Miralles (2017)

- Thinking in Systems: A Primer, Donella H. Meadows (2008)

- The Poetry and Music of Science: Comparing Creativity in Science and Art, Tom McLeish (2019)

- Good vs Good: Why the 8 Great Goods Are Behind Every Good (and Bad) Decision, John C Beck

- Lean In, Sheryl Sandberg

- Talks

- The danger of a single story | TED, Chimamanda Ngozi Adichie

- Know your inner saboteurs: Shirzad Chamine at TEDxStanford

- 2008 ACM A.M. Turing Award Lecture The Power of Abstraction, Barbara Liskov

- Other resources

About Elizabeth Ondula

|

Elizabeth is an Electrical Engineer from the Technical University of Kenya and is currently a PhD student of Computer Science at USC. She is a member of the Autonomous Networks Research Group. She co-organizes a bi-weekly reinforcement learning group, SUITERS-RL. Prior to academia, she had roles as a Software Engineer at IBM Research in Kenya, Head of Product Development of Brave Venture Labs and Co-lead of Hardware Research at iHub Nairobi. Outside academia and engineering, she enjoys music, poetry, fine arts, photography, journaling, and outdoor activities. |

tags: AAAI, AAAI Doctoral Consortium, AAAI2024, ACM SIGAI