ΑΙhub.org

Interview with Sherry Yang: Learning interactive real-world simulators

Sherry Yang, Yilun Du, Kamyar Ghasemipour, Jonathan Tompson, Leslie Kaelbling, Dale Schuurmans and Pieter Abbeel won an outstanding paper award at ICLR2024 for their work Learning Interactive Real-World Simulators. In the paper, they introduce a universal simulator (called UniSim) which takes image and text input to train a robot simulator. We spoke to Sherry about this work, some of the challenges, and potential applications.

Could you just give us a quick definition of a universal simulator and then outline some of the interesting applications for such a simulator?

There are two components – there is the universal component and then there is a simulator component. Looking at the simulator component first – typically when people build a simulator, they do this based on an understanding of the real world, using physics equations. Researchers will build a simulator to study how things work, such as how cars move, for example. One observation is that when we have very good simulators, we can actually train very good agents. AlphaGo is a very successful example of a perfect simulator – we know the rules of the game of Go, which we can code up and then AlphaGo agents directly interact with the rules, play a game, and then figure out whether the game was a win or a loss. Because the simulator is perfect, any algorithms people develop in the simulator actually transfer to the real world.

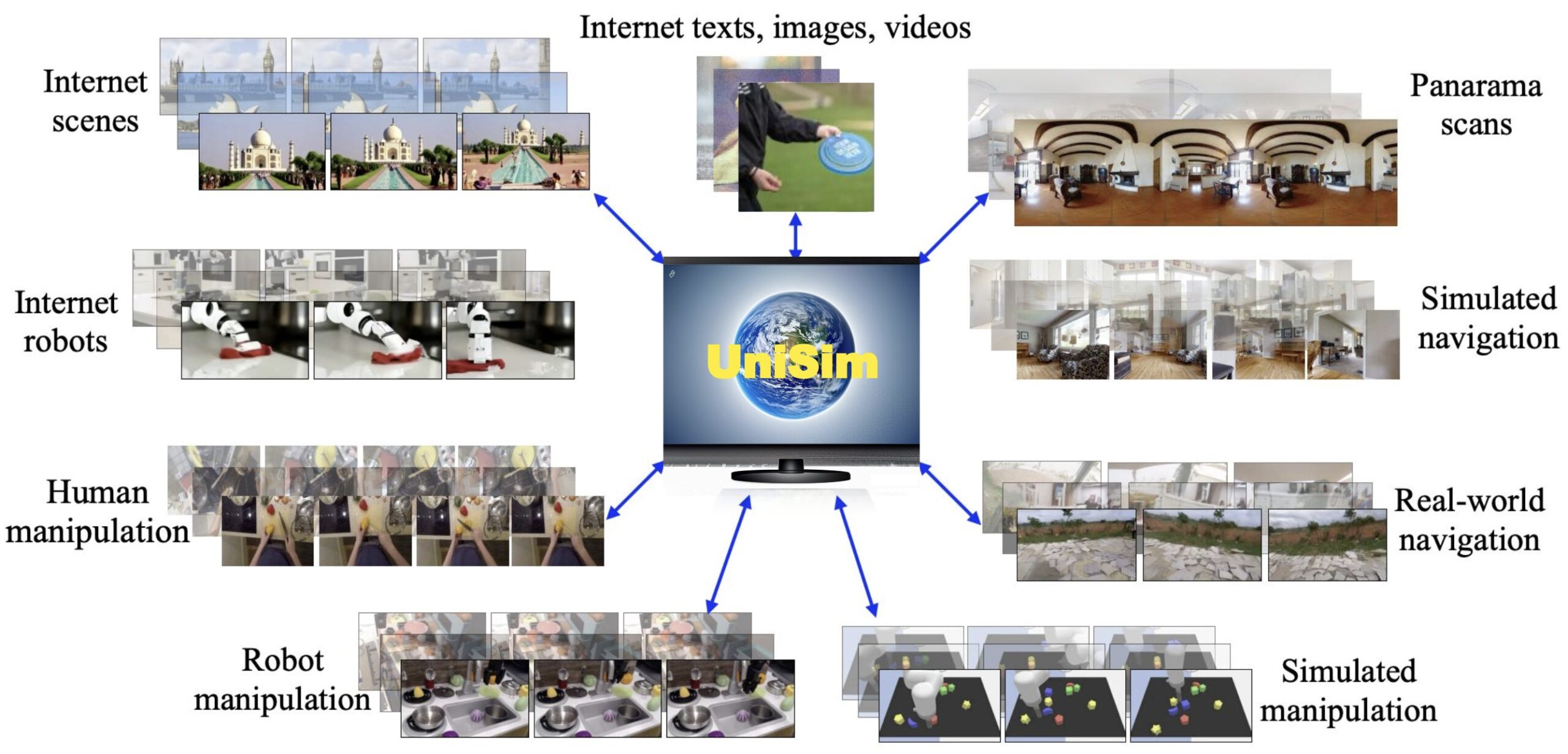

Now, the issue is that for other simulators that try to model the real-world (going beyond games with perfect rules), it’s really hard to write a simulator using code that comprehensively represents all of the real-world interactions. This is where the universal part comes in and is what we are trying to achieve with our model UniSim. UniSim is, in some sense, more of a visual universal simulator. It takes an image as an input, and some action specified by natural language (such as “move forward”), and generates a video that corresponds to executing that action from the starting image. The video it generates is a simulation of what would have happened if that person moved forward, for example. Because the model is trained on a lot of real-world videos, it really reflects real-world motion. That’s where the word “universal” comes in – different kinds of physics interactions can be characterized just using image input plus language to describe the action. However, I think we have to be a little careful, because there are definitely things that cannot be simulated by UniSim right now.

The universal simulator is trained on data including objects, scenes, human activities, motion in navigation and manipulation, panorama scans, and simulations and renderings.

The universal simulator is trained on data including objects, scenes, human activities, motion in navigation and manipulation, panorama scans, and simulations and renderings.

What are the main achievements of the paper that go beyond what has come before?

We were really the first people to explore this universal interface of video and language. In our previous work, we introduced UniPi. UniPi was inspired by the fact that different robots have different morphologies as a result of different action spaces. Oftentimes the datasets collected by different robots or datasets between robots and humans can’t really be jointly used to train policies. The idea behind UniPi was to treat video as a policy. When we condition on some initial frame and some language description, we generate this video, and then we can extract low-level controls just by looking at what’s different between the videos. Usually, it’s some kind of action that happened between the frames, such as how the robot arm moved. In that sense we’re using the video space, or pixel space, as a unified space to characterize actions from different robots.

In this paper (where we present UniSim) we’re trying to learn a dynamics model, taking the current state and action, and trying to predict the next state. So, when these actions are language, we’re trying to predict the effect of executing this language. In some sense, it is more like a simulator.

We also have some follow-up work, where we use the simulator to visualize what happens. So, there are quite a few related works. But I would say UniSim is the most general in a sense; it’s also the largest in terms of the scale of the data and model.

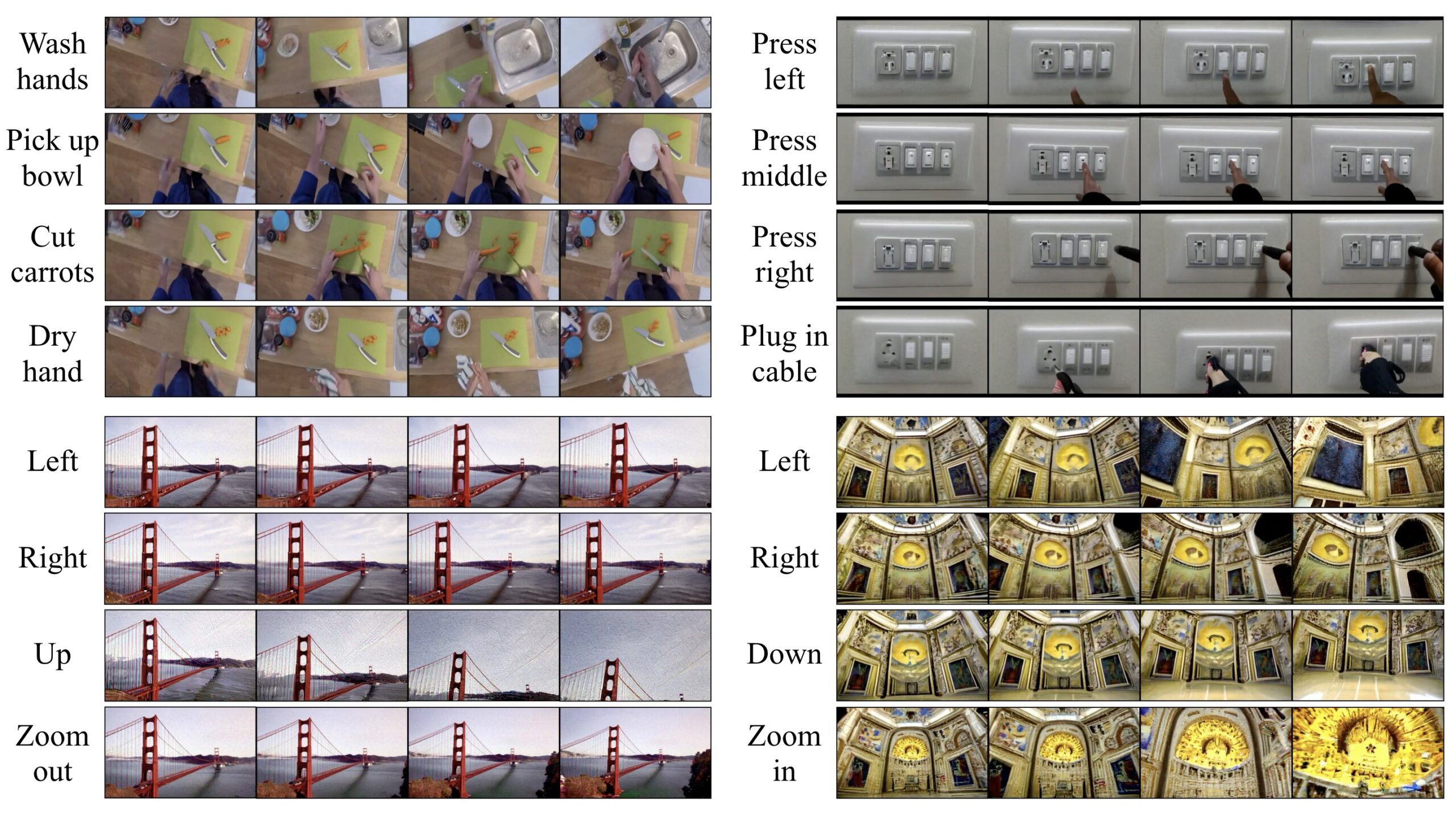

Example simulations. UniSim can support manipulation actions, such as “cut carrots”, “wash hands”, and “pickup bowl”, from the same initial frame (top left), and other navigation actions.

Example simulations. UniSim can support manipulation actions, such as “cut carrots”, “wash hands”, and “pickup bowl”, from the same initial frame (top left), and other navigation actions.

You’ve got a GitHub demo page which I think really helps to show people how the simulator works. Have you got any favourite demo examples that people should really go and check out?

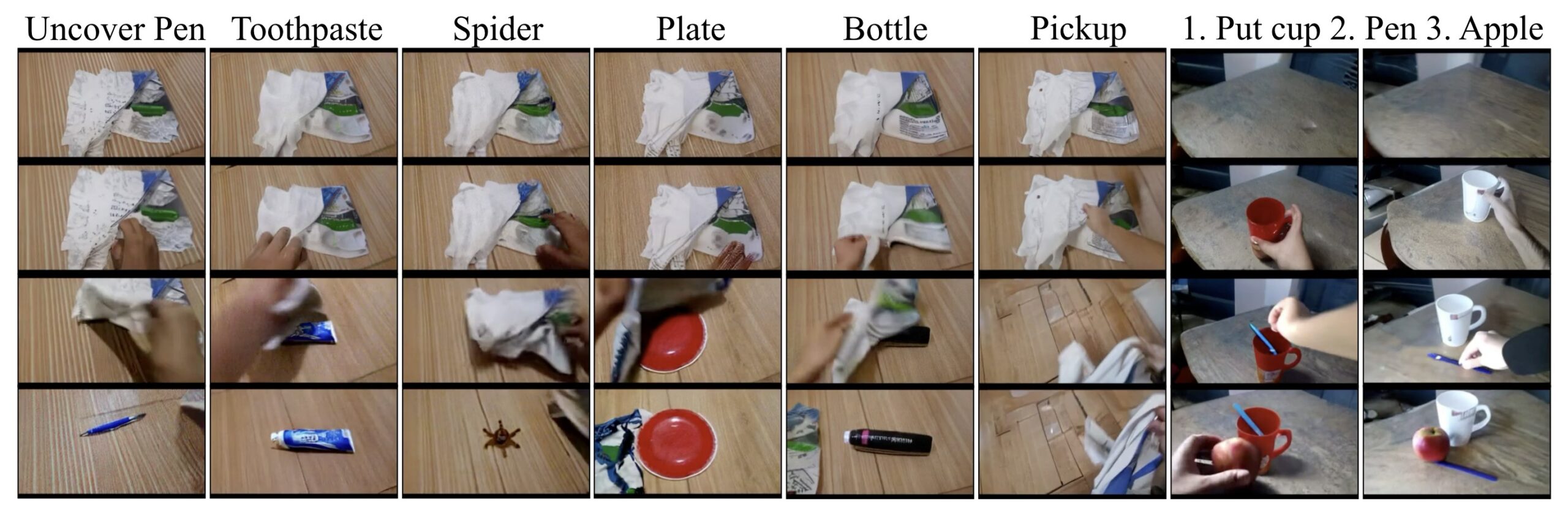

I think one thing that really impressed me was when there was a napkin on the table. When we uncover the napkin, we use natural language to specify what object is revealed. And I think it’s interesting because it’s like the model really understands some physics behind this activity. Because if someone says “uncover a car” they’re just going to get a very unrealistic video because a car cannot be covered by a napkin. So maybe they will get a toy car, like a kids’ toy.

The second step of the simulator is interesting, when someone starts to interact with the objects (like picking up toothpaste or pinching a pen). If you look at the distance between the two fingers, the model is realistically fitting the size of the object. And we’re not doing any explicit training for this. Everything is data-driven – it’s a maximum likelihood objective. As a result, we can actually get these very realistic interactions that are representative of the real world, which is very impressive.

Left: text is used to specify the object being revealed by suffixing “uncovering” with the object name. Right: it is only necessary to specify “put cup” or “put pen”, and cups and pens of different colors are sampled as a result of the stochastic sampling process during video generation.

Left: text is used to specify the object being revealed by suffixing “uncovering” with the object name. Right: it is only necessary to specify “put cup” or “put pen”, and cups and pens of different colors are sampled as a result of the stochastic sampling process during video generation.

You mention in the paper that the lack of data was one of the roadblocks to developing a simulator, but you’ve managed to overcome that somewhat in your work. How did you do that?

So, for these GitHub demos, the image input was some image from the pre-training data. Ideally, we want someone to upload the image of the room that they’re in and to be able to start interacting with it. And I think the model can do that to a certain extent, but not as well as people would imagine. And I think the reason is that there are just not enough text-video pairs available to train the model. If we think about how much data a language model is pre-trained on, it’s pretty much the entire text from the entire Internet. For video models, because we’re training a text condition video generation model, we have to have paired or labeled videos with text and this is a more manual process with a lot of these datasets curated by humans (for example, to label what the content of the video is). We’re currently working on having more capable visual language models, like GPT for Vision or Gemini 1.5, automatically produce captions so that we can use these to train text-to-video models. I think it’s still more challenging than pre-training with text only because of the lack of paired text-video data.

I think this work really shows what’s possible. A lot of the generated videos, if you look at them, still hallucinate, so there’s still a lot of work to be done, but it really opens up the possibilities.

Were there any other particular challenges you recall when trying to develop the simulator?

I think one thing that the model struggles a lot with is memory because video takes up a lot of memory. For large language models, people can have these really long context strings and pretty much capture everything in the context. However, for videos, if we want the simulator to really be simulating the real world, then we need it to have some kind of “memory”. For example, if I put a cup inside a drawer and close it, then, if I open the drawer three days later, the cup should still be there. But that means that we need three days of video data as input to the model so that it can remember that the cup is in the drawer. That is not really possible with the current infrastructure in terms of handling really long video input. So as a result, the current version of our work only conditions on a few frames as opposed to a more comprehensive history. As a result, there are some issues if some objects are blocked by other objects – when you move the front object away and it’s not super clear what the object being blocked was because the model doesn’t have that memory. So, that’s one of the challenges.

Another challenge is the inference speed – because we’re using a diffusion model it’s relatively slow to generate these videos. It could take up to a few minutes to generate just a few seconds of interactions, for example. However, I’m not too worried about the sampling speed because people are always working to make things faster in diffusion models whilst preserving the quality of the generated samples.

I guess there are also a lot of challenges associated with evaluation and knowing that the videos that we generated are realistic. Right now, it’s pretty much assessed by human eye. I think it’s good in the sense that at least video is a representation that is more interpretable, similar to text. However, in some other sense, because we have to rely on humans to say whether the videos are realistic, then it’s not super scalable. I think an interesting direction would be to have ways to automatically evaluate whether the generated video is realistic. One approach, for example, is to use embodied AI to turn these generated videos into real world actions and see if those executed actions actually result in the same video as the generated video. Essentially, we have two worlds – the physical world and the simulated world – and we’re trying to align these two worlds.

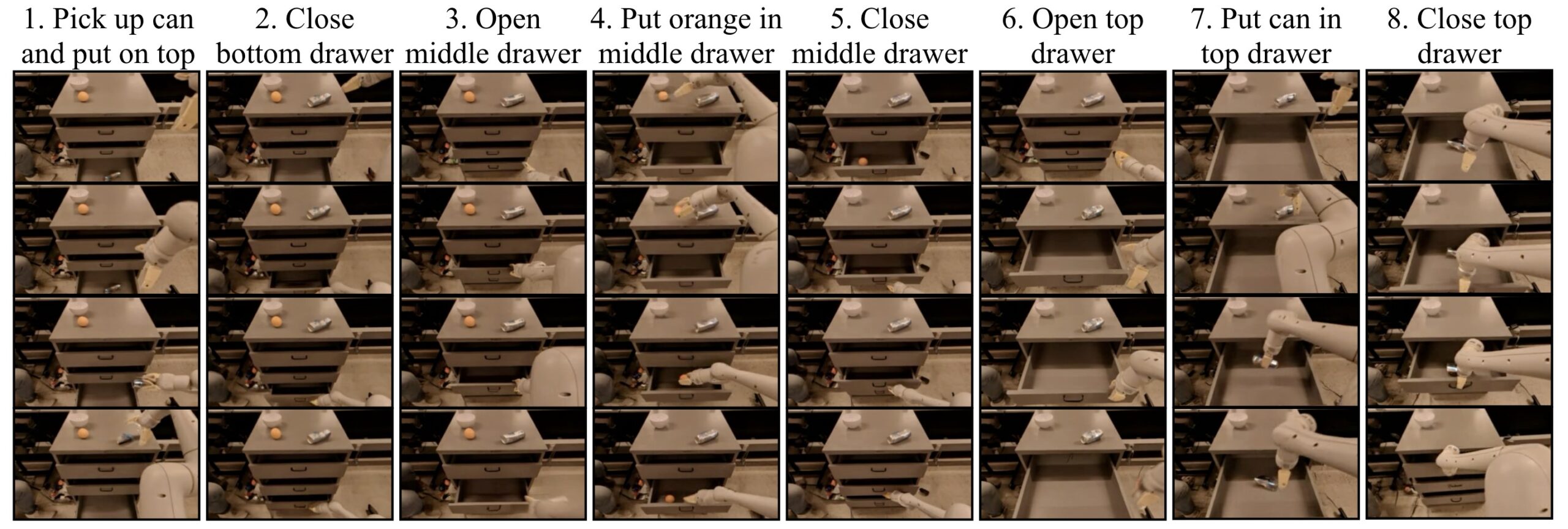

An example of a long-horizon simulation. The simulated interactions maintain temporal consistency across long-horizon interactions, correctly preserving objects and locations.

An example of a long-horizon simulation. The simulated interactions maintain temporal consistency across long-horizon interactions, correctly preserving objects and locations.

What is it you’re most excited about for the future of this project and what the simulator could potentially do?

One potential application would be in generating customized “how to” videos. When it comes to learning how to do a particular task, text is not the only modality that people consume – we watch a lot of videos. For example, learning how to put furniture together we can do by watching a video demonstration. One limitation in just watching existing videos on the Internet is that maybe the furniture in the video is a slightly different version. I think there would be a lot of value in being able to use our simulator to create customized “how to” videos, similarly to how people use ChatGPT to answer their text-based questions. I think the current UniSim model is not good enough to do that because it’s not great at generating really long horizon videos while having a sense of consistency, because of the memory issue I was talking about.

For this application, even if the test task is not successful, the video generation model can learn from the mistakes and try to do the task again. I think in some sense, video is really a media that connects what a person wants to do at a high level, which is usually represented by text, to how things are done (for example how much to move left, how much to go right). And I think this work bridges this gap between really high-level user language commands to very low-level executions and automating this pipeline where we can have robots continue to collect data according to plans generated by the model and further improve.

I’m also really excited about applications in science and engineering. I think there are a lot of domains where recovering the exact dynamics model is difficult, like fluid dynamics. It’s really difficult to understand the mathematical equations to model these very difficult interactions. For a lot of these applications, maybe the goal is not to recover the exact mathematical formula for how the physics works, but rather to have some knowledge about the dynamics and the effect of control inputs or actions.

In our follow up work, we used video as the new language for real-world decision making. We showed we can even simulate atom movements under electron microscopes using just next frame prediction conditioned on control inputs. So, I think the implications for science and engineering are quite broad.

About Sherry Yang

|

Sherry is a final year PhD student at UC Berkeley advised by Pieter Abbeel and a senior research scientist at Google DeepMind. Her research aims to develop machine learning models with internet-scale knowledge to make better-than-human decisions. To this end, she has developed techniques for generative modeling and representation learning from large-scale vision, language, and structured data, as well as decision making algorithms in imitation learning, planning, and reinforcement learning. Sherry initiated and led the Foundation Models for Decision Making workshop at NeurIPS 2022 and 2023, bringing together research communities in vision, language, planning, and reinforcement learning to solve complex decision making tasks at scale. Before her current role, Sherry received her Bachelor’s degree and Master’s degree from MIT advised by Patrick Winston and Julian Shun. |

Find out more

- The paper: Learning Interactive Real-World Simulators, Sherry Yang, Yilun Du, Kamyar Ghasemipour, Jonathan Tompson, Leslie Kaelbling, Dale Schuurmans and Pieter Abbeel.

- The GitHub page: UniSim: Learning Interactive Real-World Simulators

tags: ICLR2024