ΑΙhub.org

Bridging the gap between user expectations and AI capabilities: Introducing the AI-DEC design tool

The challenge of AI integration in the workplace

Today, AI systems are increasingly integrated into everyday workplaces. However, as AI systems become more prevalent in everyday workplaces, their integration has not always been as smooth or successful as anticipated. A significant reason for this is the gap between user (worker) expectations and the actual capabilities of AI systems. This gap often leads to user dissatisfaction and poor adoption rates, highlighting the need for better design approaches to align user needs with AI functionalities.

Understanding dual perspectives

To address these design challenges, it’s crucial to understand dual perspectives: both the user’s and the AI system’s point of view. From the user’s viewpoint, designers need to comprehend their information and interaction needs, routines, and skills. From the AI system’s perspective, understanding its data inputs, capabilities, and limitations is essential. By merging these perspectives, we can create AI designs that are more user-centered and capable of delivering meaningful value.

The AI-DEC: A Card-based design tool for user-centered AI explanations

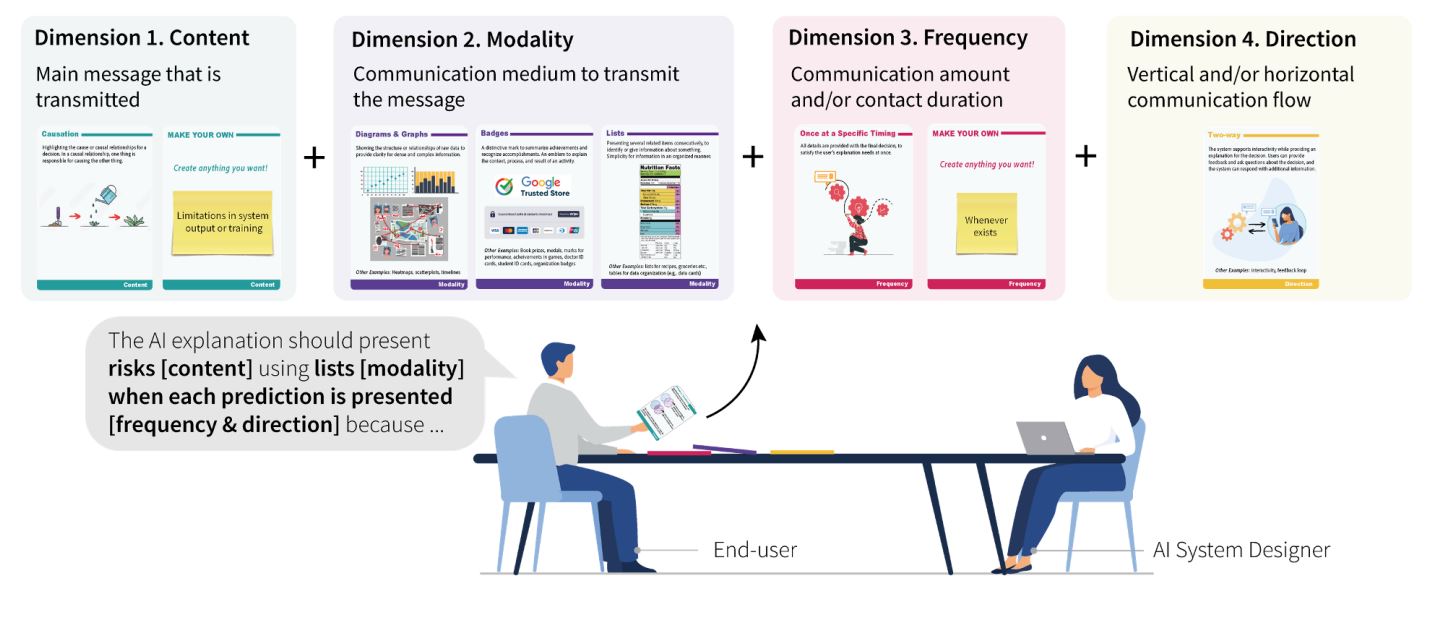

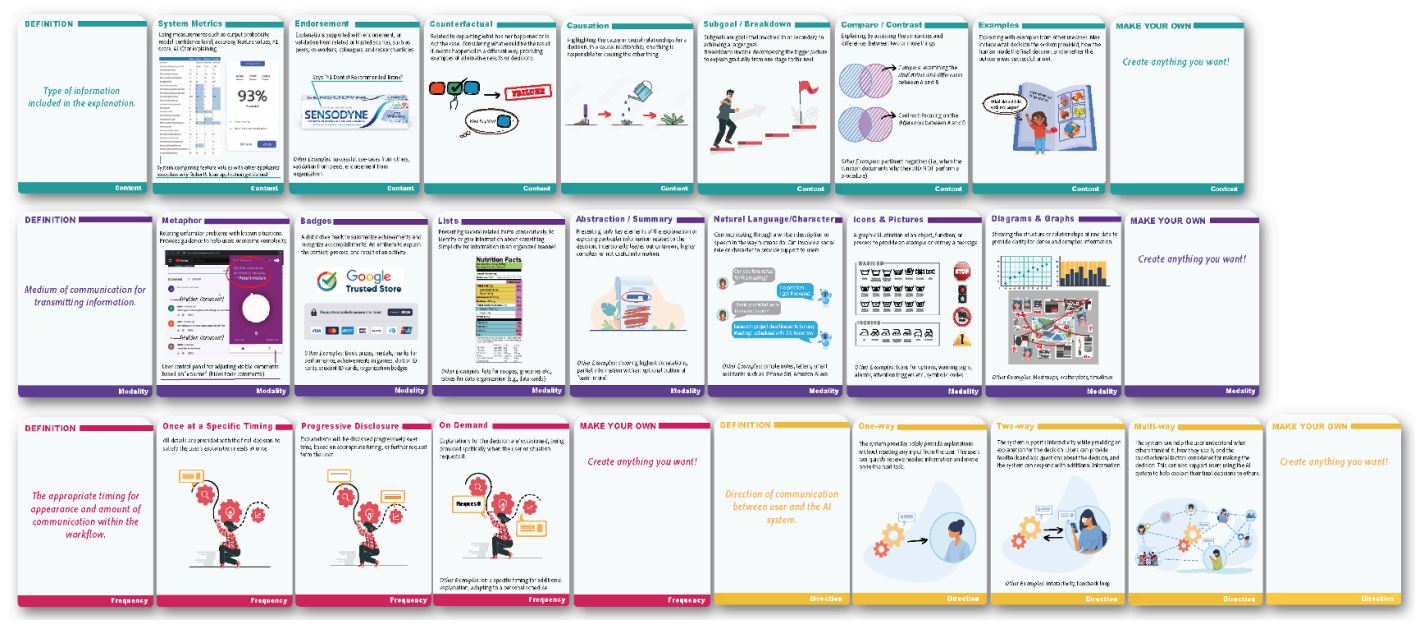

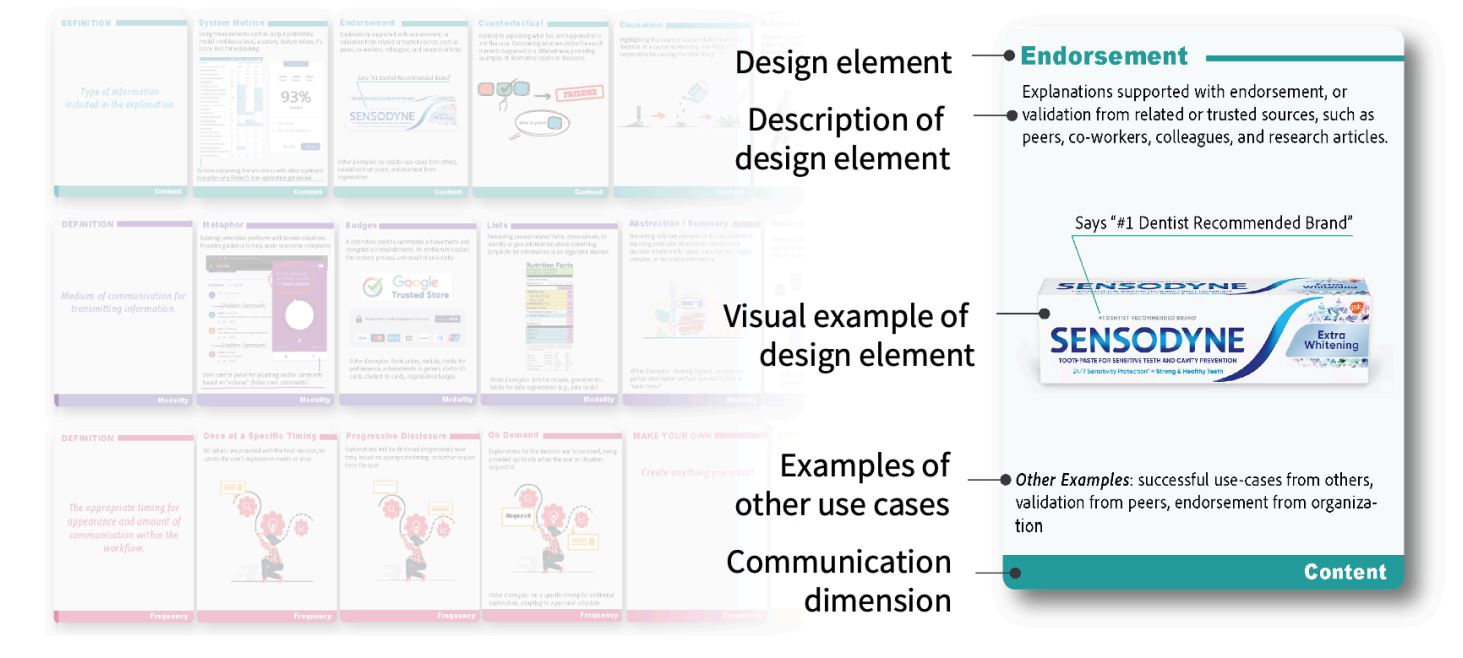

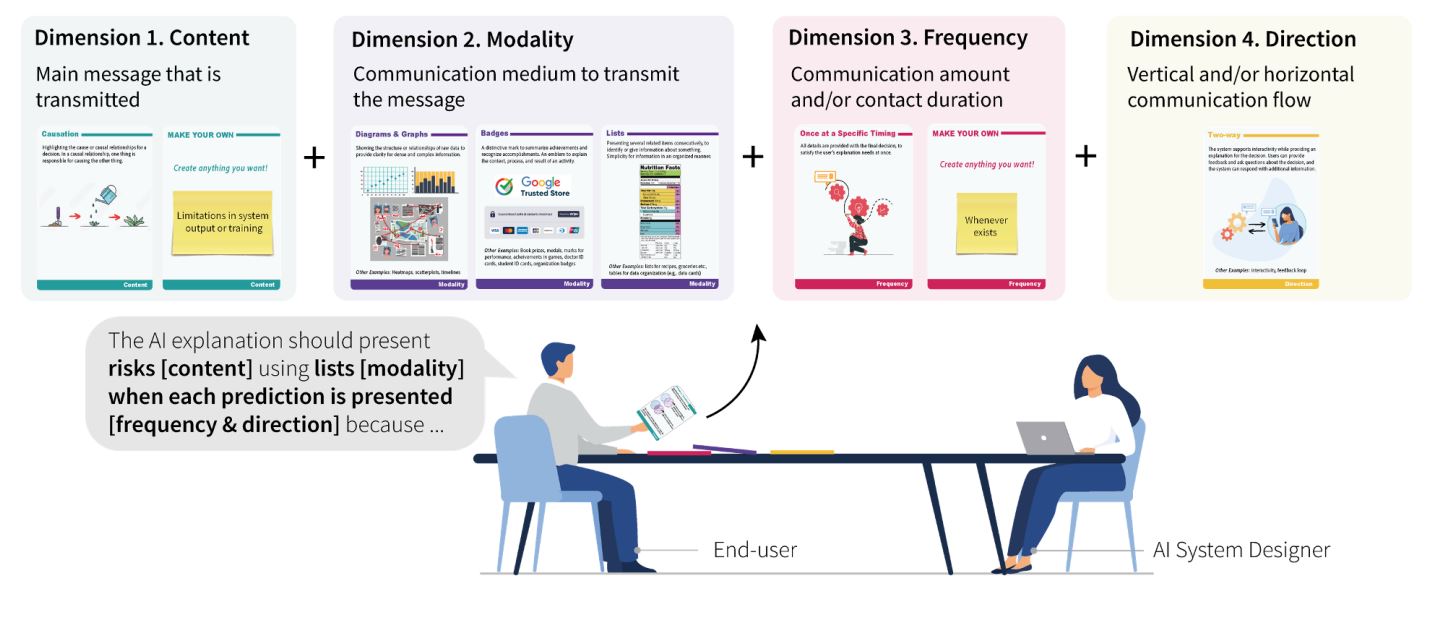

Our team developed the AI-DEC, a participatory design tool to bridge this gap. The AI-DEC is a card-based design method that enables users and AI systems to communicate their perspectives and collaboratively build AI explanations. Each card in the AI-DEC represents a design element with descriptions and example use cases, organized into four critical dimensions for explanation design: content, modality, frequency, and direction.

The four dimensions of the AI-DEC

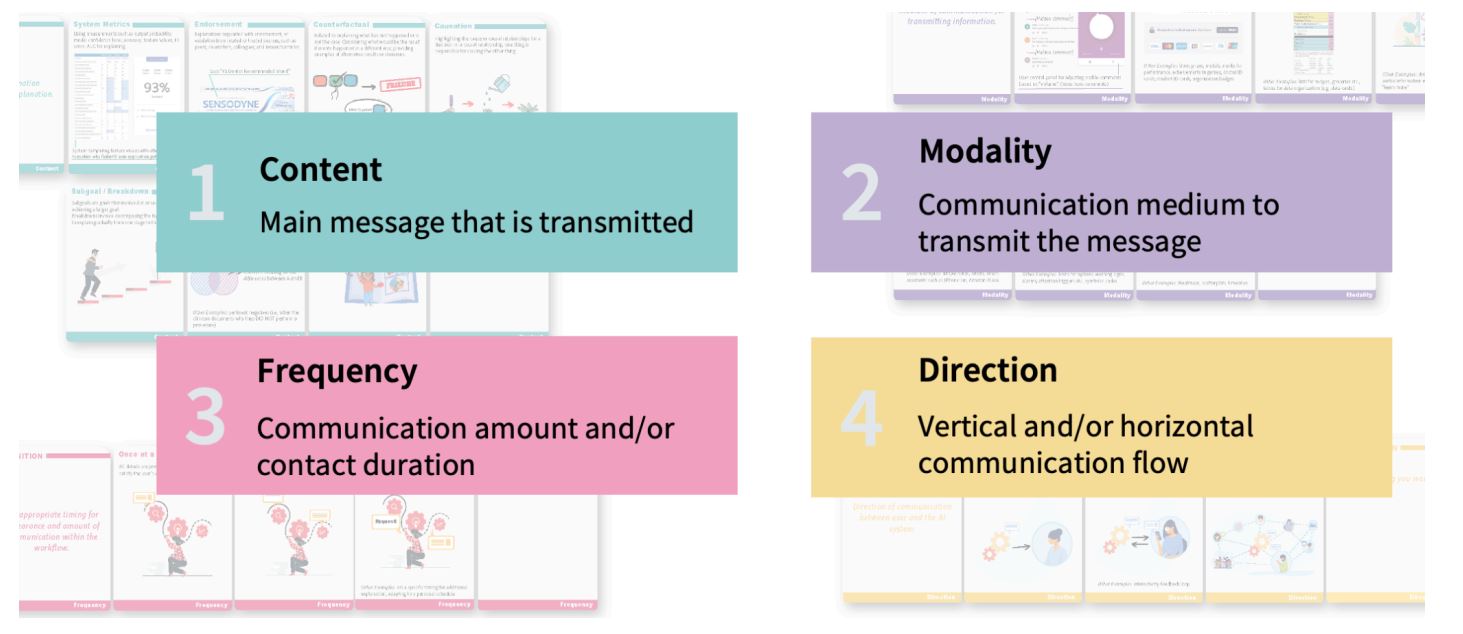

- Content: This involves the main message that is transmitted.

- Modality: Refers to the communication medium used to transmit the message.

- Frequency: Entails the amount and duration of communication.

- Direction: Refers to the vertical and horizontal communication flow within an organization.

These dimensions are derived from a communication strategy model by Mohr and Nevin (Mohr & Nevin, 1990), which shows that effective communication strategies can enhance group outcomes such as coordination, satisfaction, commitment, and performance.

Practical application and effectiveness

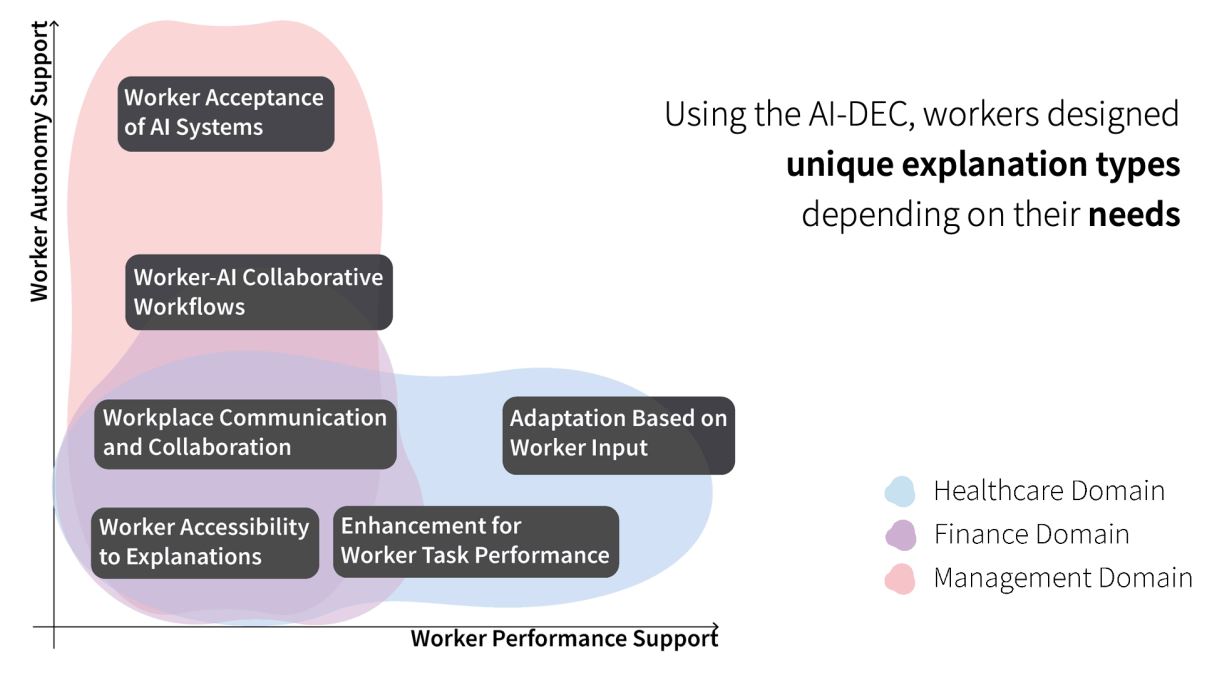

To evaluate the AI-DEC’s effectiveness, we conducted co-design sessions with 16 workers from healthcare, finance, and management domains. These workers used AI systems for decision-making tasks like aiding in diagnoses, making financial loan decisions, and creating worker schedules. During the sessions, workers designed AI explanations tailored to their unique needs, resulting in diverse explanation designs across different domains.

Key themes and findings

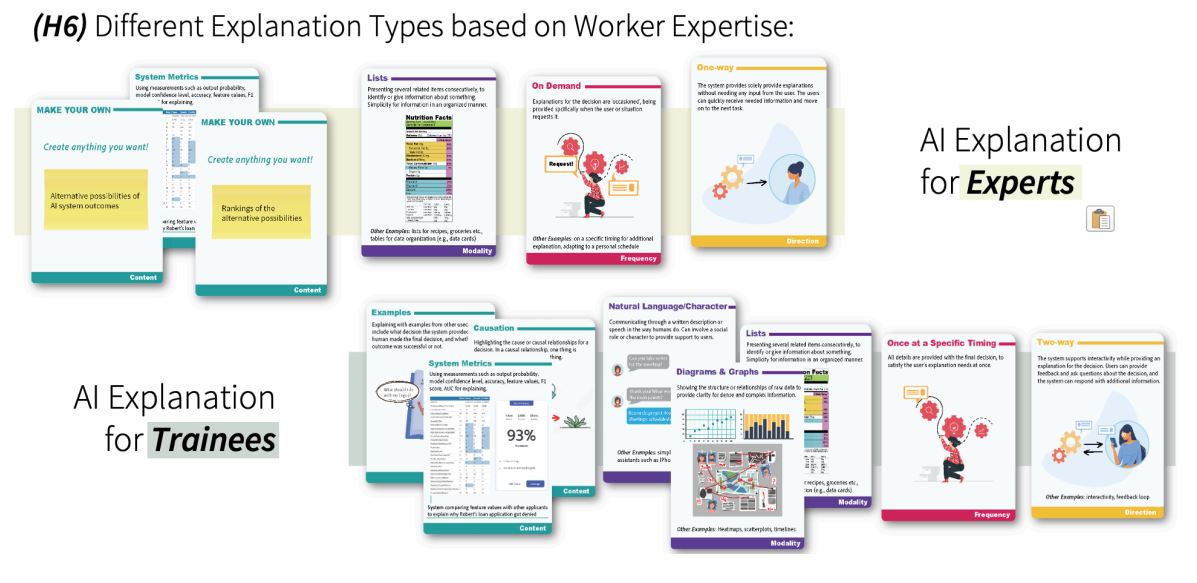

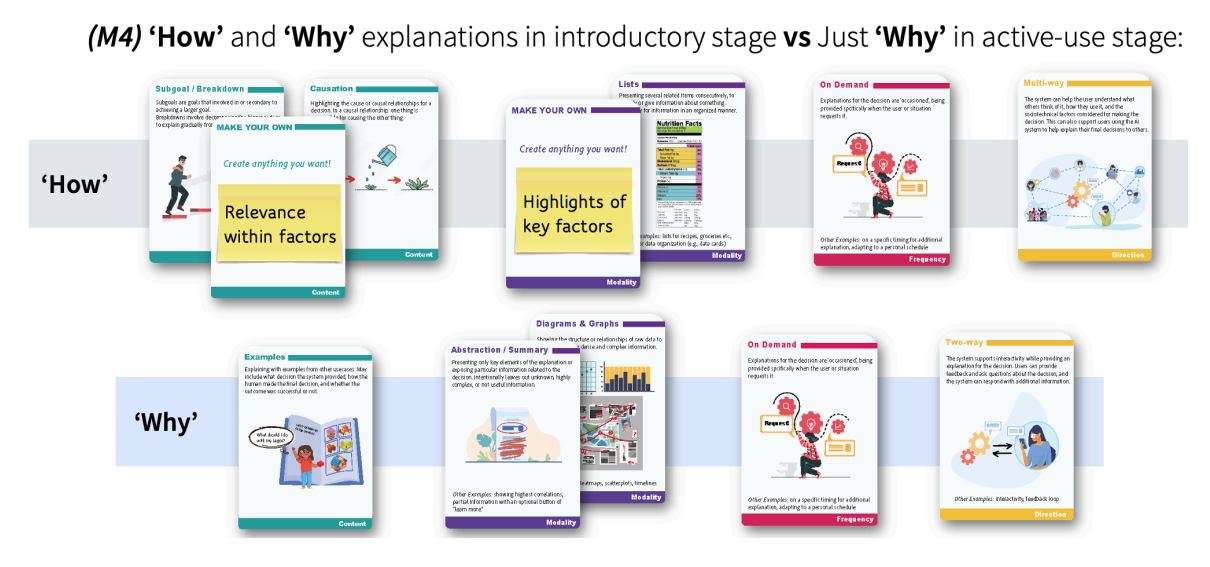

During the co-design session, workers used the AI-DEC to design different types of explanations depending on their unique information and interaction needs. Let us present two different types as examples:

1. Adaptation Based on Worker Input: Healthcare workers designed AI explanations to enhance support based on worker feedback, tailoring explanations to different expertise levels.

2. Worker Acceptance of AI Systems: In the management domain, workers designed explanations to address resistance towards AI systems by providing detailed explanations during the introductory stage and more concise explanations in the active-use stage.

Implications for AI design

We believe the AI-DEC to be most effective when designers need to understand user needs and AI capabilities, prototype human-AI interactions, and collaborate with users and AI engineers. It facilitates communication between AI designers and users, resulting in prototypes that are more user-centered and context-specific.

Moreover, we envision the AI-DEC as a tool for UX designers to enhance their understanding of technical AI capabilities, enabling them to design more effective user experiences.

Future applications and takeaways

For various application contexts, the AI-DEC can be tailored to meet the needs of designers, users, or organizations, and used to design continuous interaction experiences, not just singular explanations. We envision the AI-DEC to enable effective user involvement in the AI design process, often a challenging aspect in many AI system design procedures today.

By bridging the gap between user expectations and AI capabilities, the AI-DEC holds promise for successful AI integration in various application settings. Its participatory approach ensures that both user and AI perspectives are aligned, leading to more effective and user-centered AI systems.

Read the work in full

The AI-DEC: A Card-based Design Method for User-centered AI Explanations, Christine P Lee, Min Kyung Lee, Bilge Mutlu.