ΑΙhub.org

Geometric deep learning for protein sequence design

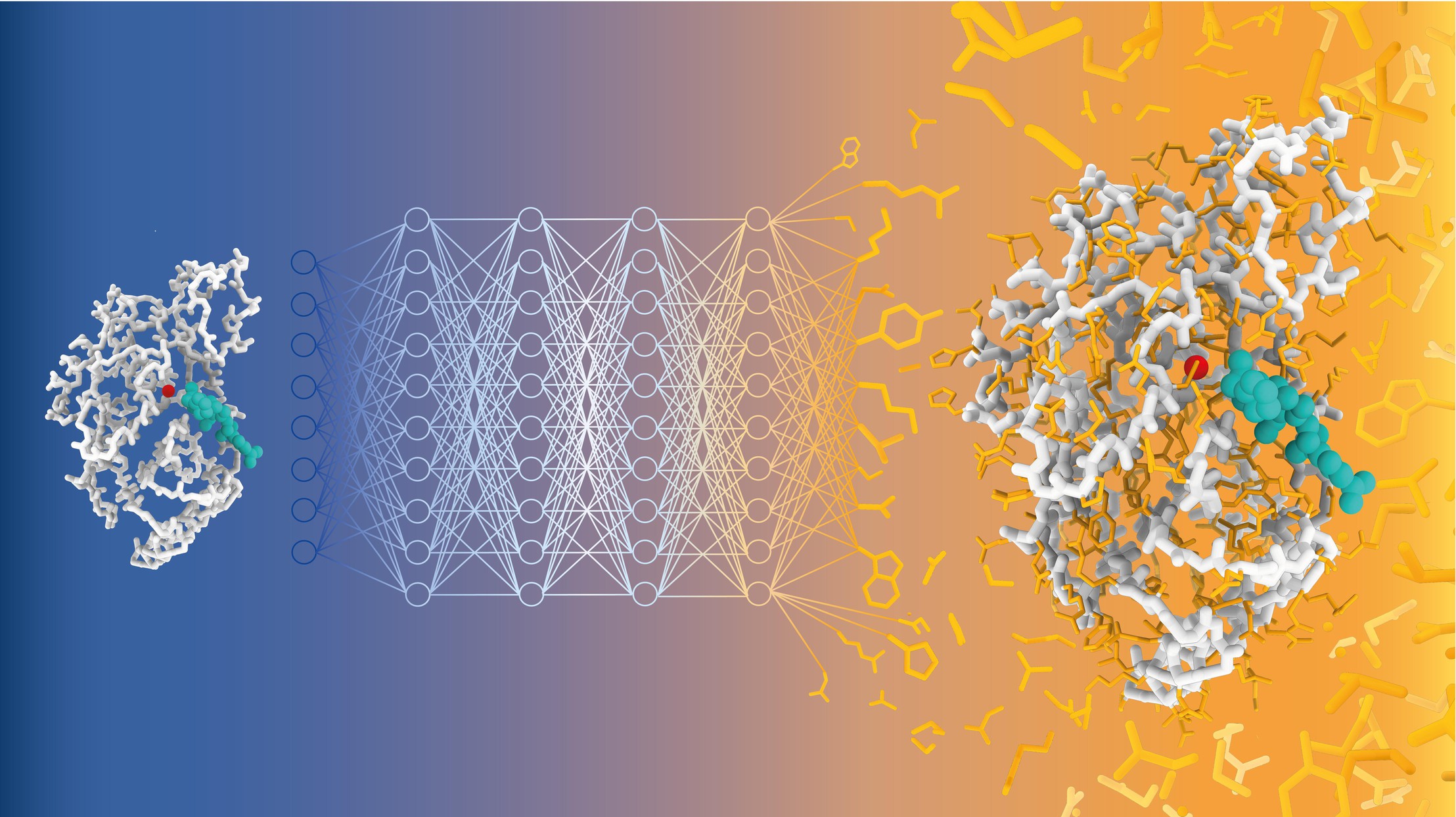

Schematic representation of sequence prediction with CARBonAra. The geometric transformer samples the sequence space of the beta-lactamase TEM-1 enzyme (in grey) complexed a natural substrate (in cyan) to produce new well folded and active enzymes. Credit: Alexandra Banbanaste (EPFL).

Schematic representation of sequence prediction with CARBonAra. The geometric transformer samples the sequence space of the beta-lactamase TEM-1 enzyme (in grey) complexed a natural substrate (in cyan) to produce new well folded and active enzymes. Credit: Alexandra Banbanaste (EPFL).

By Nik Papageorgiou

Designing proteins that can perform specific functions involves understanding and manipulating their sequences and structures. This task is crucial for developing targeted treatments for diseases and creating enzymes for industrial applications.

One of the grand challenges in protein engineering is designing proteins de novo, meaning from scratch, to tailor their properties for specific tasks. This has profound implications for biology, medicine, and materials science. For instance, engineered proteins can target diseases with high precision, offering a competitive alternative to traditional small molecule-based drugs.

Additionally, custom-designed enzymes, which act as natural catalysts, can facilitate rare or non-existent reactions in nature. This capability is particularly valuable in the pharmaceutical industry for synthesizing complex drug molecules and in environmental technology for breaking down pollutants or plastics more efficiently.

A team of scientists led by Matteo Dal Peraro at EPFL has now developed CARBonAra (Context-aware Amino acid Recovery from Backbone Atoms and heteroatoms), an AI-driven model that can predict protein sequences, but by taking into account the constraints imposed by different molecular environments. CARBonAra is trained on a dataset of approximately 370,000 sub units, with an additional 100,000 for validation and 70,000 for testing, from the Protein Data Bank (PDB).

CARBonAra builds on the architecture of the Protein Structure Transformer (PeSTo) framework – also developed by Lucien Krapp in Dal Peraro’s group. It uses geometric transformers, which are deep learning models that process spatial relationships between points, such as atomic coordinates, to learn and predict complex structures.

CARBonAra can predict amino acid sequences from backbone scaffolds, the structural frameworks of protein molecules. However, one of CARBonAra’s standout features is its context awareness, which is especially demonstrated in how it improves sequence recovery rates – the percentage of correct amino acids predicted at each position in a protein sequence compared to a known reference sequence.

CARBonAra significantly improved recovery rates when it includes molecular “contexts”, such as protein interfaces with other proteins, nucleic acids, lipid or ions. “This is because the model is trained with all sort of molecules and relies only on atomic coordinates, thus that it can handle not only proteins,” explains Dal Peraro. This feature in turn enhances the model’s predictive power and applicability in real-life, complex biological systems.

The model does not perform well only in synthetic benchmarks but was experimentally validated. The researchers used CARBonAra to design new variants of the TEM-1 β-lactamase enzyme, which is involved in the development of antimicrobial resistance. Some of the predicted sequences, differing by approximatively 50% from the wild-type sequence, were folded correctly and preserve some catalytical activity at high temperatures, when the wild-type enzyme is already inactive.

The flexibility and accuracy of CARBonAra could open new avenues for protein engineering. Its ability to take into account complex molecular environments has the potential to make it a useful tool for designing proteins with specific functions, enhancing future drug discovery campaigns. In addition, CARBonAra’s success in enzyme engineering demonstrates its potential for industrial applications and scientific research.

Read the work in full

Context-aware geometric deep learning for protein sequence design, Lucien F. Krapp, Fernando A. Meireles, Luciano A. Abriata, Jean Devillard, Sarah Vacle, Maria J. Marcaida & Matteo Dal Peraro, Nature Communications (2024).