ΑΙhub.org

Using deep learning to help distinguish dark matter from cosmic noise

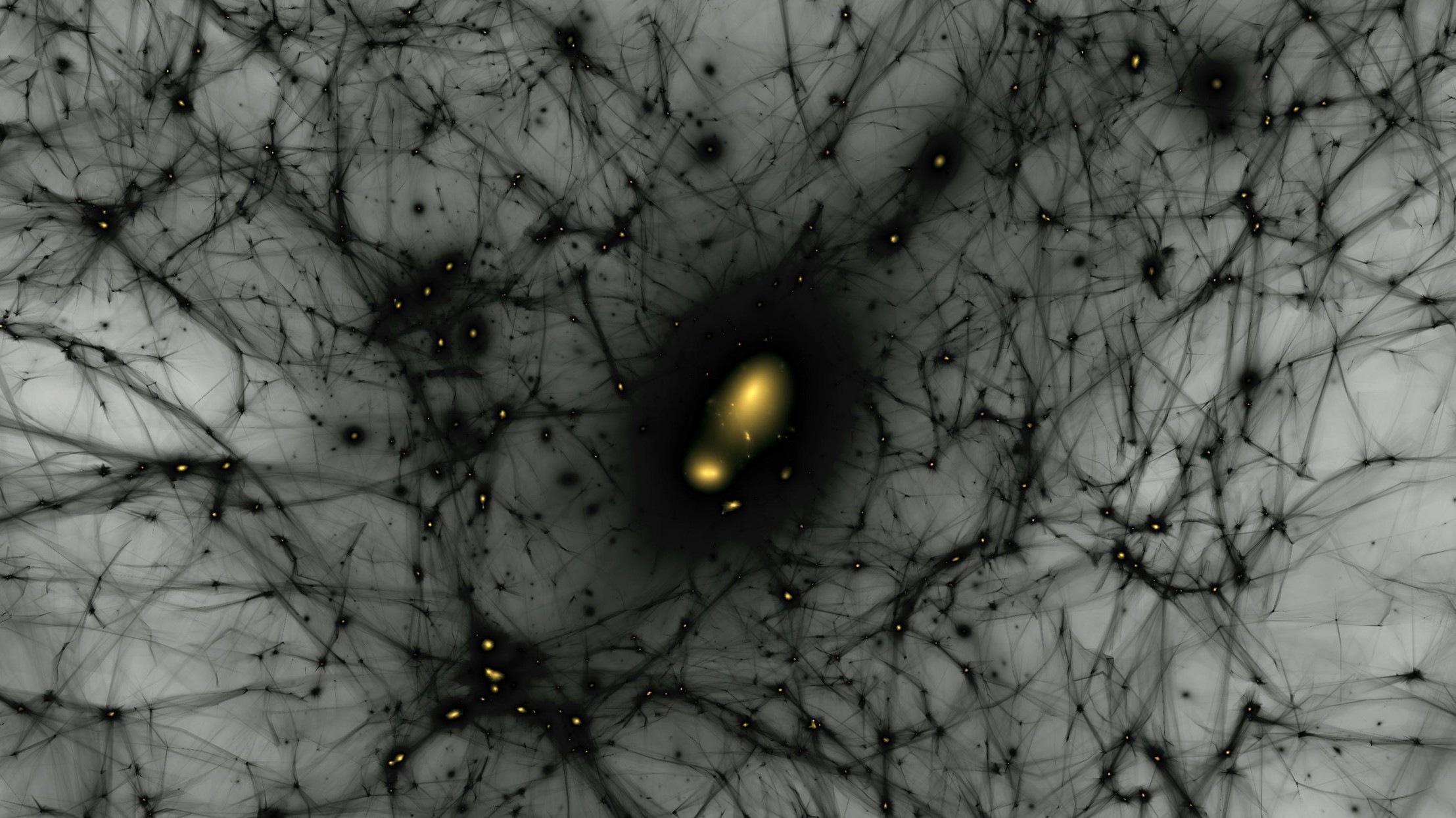

Still image from simulation of the formation of dark matter structures from the early universe to today. Gravity makes dark matter clump into dense halos, indicated by bright patches, where galaxies form. In this simulation, a halo like the one that hosts the Milky Way forms and a smaller halo resembling the Large Magellanic Cloud falls toward it. SLAC and Stanford researchers, working with collaborators from the Dark Energy Survey, have used simulations like these to better understand the connection between dark matter and galaxy formation. Credit: Ralf Kaehler/Ethan Nadler/SLAC National Accelerator Laboratory.

Still image from simulation of the formation of dark matter structures from the early universe to today. Gravity makes dark matter clump into dense halos, indicated by bright patches, where galaxies form. In this simulation, a halo like the one that hosts the Milky Way forms and a smaller halo resembling the Large Magellanic Cloud falls toward it. SLAC and Stanford researchers, working with collaborators from the Dark Energy Survey, have used simulations like these to better understand the connection between dark matter and galaxy formation. Credit: Ralf Kaehler/Ethan Nadler/SLAC National Accelerator Laboratory.

By Nik Papageorgiou

Dark matter is the invisible force holding the universe together – or so we think. It makes up around 85% of all matter and around 27% of the universe’s contents, but since we can’t see it directly, we have to study its gravitational effects on galaxies and other cosmic structures. Despite decades of research, the true nature of dark matter remains one of science’s most elusive questions.

According to a leading theory, dark matter might be a type of particle that barely interacts with anything else, except through gravity. But some scientists believe these particles could occasionally interact with each other, a phenomenon known as self-interaction. Detecting such interactions would offer crucial clues about dark matter’s properties.

However, distinguishing the subtle signs of dark matter self-interactions from other cosmic effects, like those caused by active galactic nuclei (AGN) – the supermassive black holes at the centers of galaxies – has been a major challenge. AGN feedback can push matter around in ways that are similar to the effects of dark matter, making it difficult to tell the two apart.

Astronomer David Harvey at EPFL’s Laboratory of Astrophysics has developed a deep-learning algorithm that can help untangle these complex signals. Their machine learning-based method is designed to differentiate between the effects of dark matter self-interactions and those of AGN feedback by analyzing images of galaxy clusters – vast collections of galaxies bound together by gravity. The work promises to enhance the precision of dark matter studies.

Harvey trained a Convolutional Neural Network (CNN) with images from the BAHAMAS-SIDM project, which models galaxy clusters under different dark matter and AGN feedback scenarios. By being fed thousands of simulated galaxy cluster images, the CNN learned to distinguish between the signals caused by dark matter self-interactions and those caused by AGN feedback.

Among the various CNN architectures tested, the most complex – dubbed “Inception” – proved to also be the most accurate. The model was trained on two primary dark matter scenarios, featuring different levels of self-interaction, and validated on additional models, including a more complex, velocity-dependent dark matter model.

Inception achieved an impressive accuracy of 80% under ideal conditions, effectively identifying whether galaxy clusters were influenced by self-interacting dark matter or AGN feedback. It maintained its high performance even when the researchers introduced realistic observational noise that mimics the kind of data we expect from future telescopes like Euclid.

What this means is that Inception – and this approach more generally – could prove incredibly useful for analyzing the massive amounts of data we collect from space. This could prove a promising tool for future dark matter research.

Read the research in full

A deep-learning algorithm to disentangle self-interacting dark matter and AGN feedback models, David Harvey, 2024.