ΑΙhub.org

Interview with Kunpeng Xu: Kernel representation learning for time series

In this interview series, we’re meeting some of the AAAI/SIGAI Doctoral Consortium participants to find out more about their research. The Doctoral Consortium provides an opportunity for a group of PhD students to discuss and explore their research interests and career objectives in an interdisciplinary workshop together with a panel of established researchers. In the first of our interviews with the 2025 cohort, we meet Kunpeng (Chris) Xu and find out more about his research and future plans.

Tell us a bit about your PhD – where are you studying, and what is the topic of your research?

Hi! I’m Kunpeng (Chris). I am a final-year Ph.D. student at the ProspectUs-Lab, Université de Sherbrooke, Canada, where I have been working with Professor Shengrui Wang and Professor Lifei Chen since 2021.

My research spans time series analysis, kernel learning, and self-representation learning, with a focus on forecasting, pattern mining, concept drift adaptation, and interpretability in sequential data. I explore data-driven kernel representation learning to develop more adaptive and expressive models for complex time series, while also investigating subspace learning and its applications in AI. I am also interested in AI4Science, particularly in understanding regime shifts in atmospheric and oceanic systems within environmental ecology, as well as phase transitions in physics.

Could you give us an overview of the research you’ve carried out during your PhD?

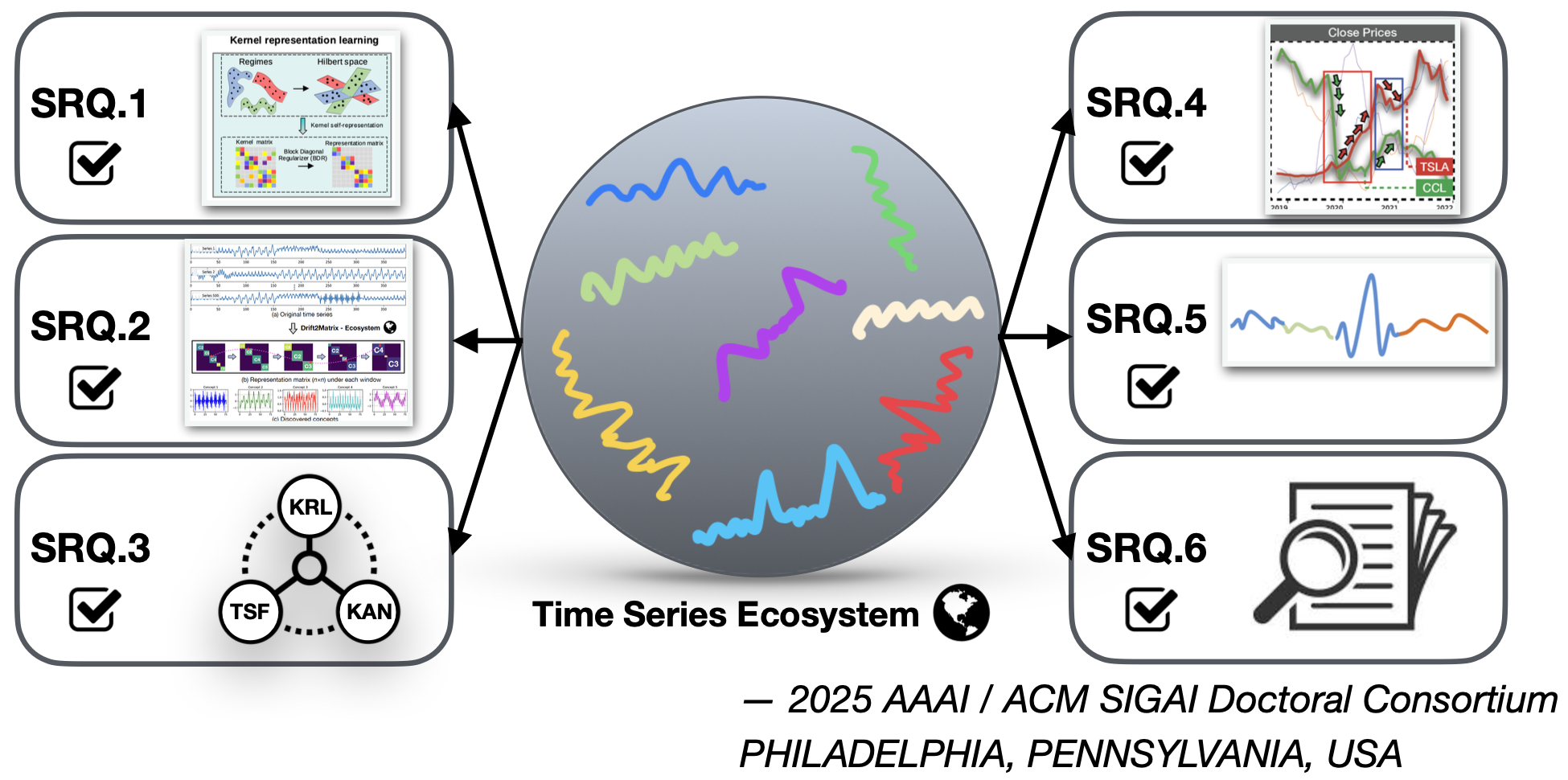

During my PhD, I have explored various aspects of Kernel Representation Learning (KRL) for time series, progressing from foundational research to more complex and dynamic applications.

In my first year, I focused on self-representation learning and multiple kernel learning, applying them to multi-modal categorical sequences, where each modality was modeled using a separate kernel. This work demonstrated the power of kernel-based methods in capturing structured dependencies across different modalities.

As I continued my research, I wanted to make these models more adaptive – could we learn the kernel itself rather than predefining it? This question led me to explore data-driven kernel learning, where the model directly learns the kernel from the data rather than relying on manually chosen functions. This shift significantly improved the adaptability and generalization of kernel-based methods.

In my third year, I extended kernel representation learning to high-dimensional and dynamically evolving time series. I developed a framework that models multiple time series as an evolving ecosystem, allowing us to track nonlinear relationships and structural changes over time. This approach has been particularly effective in domains such as finance and healthcare, where detecting patterns and adapting to concept drift is crucial. Through kernel-induced representation learning, my research addresses challenges in dynamic environments, concept drift adaptation, and regime-switching modeling, ultimately improving both predictive accuracy and interpretability in real-world applications.

Finally, as a final-year PhD student, I will introduce my doctoral research – “Kernel Representation Learning for Time Sequence: Algorithm, Theory, and Applications”, at the 2025 AAAI/SIGAI Doctoral Consortium, where I will be sharing my work on the theoretical foundations, algorithmic advancements, and practical applications of KRL in time series analysis. (See figure below.)

Is there an aspect of your research that has been particularly interesting?

One of the most fascinating aspects of my research has been modeling multiple time series as an evolving ecosystem using Kernel Representation Learning (KRL). Traditional time series models often assume static relationships between variables, but in real-world scenarios – such as financial markets, healthcare monitoring, and environmental systems – these relationships are constantly shifting due to external factors and internal dependencies.

By treating time series as an evolving ecosystem, my research allows us to track how dependencies change over time, adapt to concept drift, and uncover hidden structures that are not easily captured by traditional models. This approach has been particularly exciting because it provides a new perspective on dynamic environments, enabling models to automatically learn adaptive representations and capture nonlinear relationships between sequences in a principled way.

Additionally, bridging kernel methods with deep learning approaches – such as transformers, diffusion models, and Kolmogorov-Arnold Networks (KAN) – has been a particularly rewarding challenge. It has opened up new ways to combine mathematical rigor with the flexibility of modern deep learning, leading to models that are not only highly predictive but also interpretable – a crucial factor in domains like finance and healthcare where decision-making needs to be transparent and reliable.

I understand that you spent a year as a visiting scholar at an autonomous driving research institute. Could you talk a bit about that experience?

During my PhD, I had the opportunity to work as a visiting scholar at UISEE, a leading autonomous driving research institute whose CEO also served as the Director of Intel Labs China. This experience was particularly enriching, as it allowed me to apply my expertise in sequence modeling and machine learning to real-world decision-making problems in self-driving systems.

I still remember the moment I got my first interview call – I was playing basketball when my phone suddenly rang. The conversation lasted a long time, as we discussed my research and how it could be applied to motion planning and decision-making in autonomous driving systems. When I finally got the news that I had passed the interview and was offered the highest recognition available for an intern – a visiting scholar position – I was so excited. It was a great validation of my work and an exciting step into a new application domain.

At UISEE, my work primarily focused on lane-change decision-making for autonomous vehicles. The challenge was to develop models capable of effectively predicting and adapting to complex and uncertain driving environments. Unlike traditional time series problems, autonomous driving involves real-time multi-agent interactions, where models must not only predict future states but also make optimal decisions under uncertainty. I explored reinforcement learning and sequence modeling techniques to improve decision robustness, particularly in scenarios where vehicles needed to navigate dynamic traffic conditions and interact unpredictably with human drivers. As part of this research, I published a paper and contributed to a patent application, which provided me with valuable experience and deeper insights into the practical challenges of applying AI in autonomous systems.

Your next position will be as a postdoc at McGill University. What research will you be focusing on there?

I’m going to be a postdoctoral fellow at McGill University, where I will work with Professor Yue Li and Professor Archer Y. Yang, focusing on representation learning and explainable AI (XAI) for healthcare data. My research will involve developing adaptive and interpretable models for analyzing electronic health records (EHRs), multi-modal patient data, and biomedical signals to improve predictive modeling and decision support in healthcare.

I aim to investigate the representation learning foundation model, focusing on how to design structured, efficient, and interpretable models. This includes studying the expressivity of neural architectures, the stability of learned representations under perturbations, and the role of inductive biases in model generalization. Additionally, I will explore how advances in large language models (LLMs) and generative AI can be integrated into structured healthcare data analysis, particularly in areas such as causal reasoning, uncertainty quantification, and robust inference. By combining mathematical rigor with practical considerations, we hope to develop models that not only improve predictive performance but also provide deeper theoretical insights into the mechanisms of learning and decision-making in complex medical systems.

What advice would you give to someone thinking of doing a PhD in the field?

Pursuing a PhD is a challenging yet rewarding journey that requires passion, resilience, and adaptability. Much like fitness training, the process can often feel tedious, and there will be times when you feel like giving up. Progress may seem slow, and the daily grind of research – reading papers, debugging models, revising manuscripts – can be frustrating. However, just as in fitness, the real results come with consistency and long-term effort. The breakthroughs, the deep understanding, and the ability to push the boundaries of knowledge only emerge after persistent effort over time. Staying motivated, celebrating small wins, and embracing the learning process will help you push through the challenging moments and ultimately make your PhD journey fulfilling.

Could you tell us an interesting (non-AI related) fact about you?

Outside of research, I have a deep passion for sports and fitness. I was a member of the university’s basketball team and also had the honor of winning the Sherbrooke Badminton Championship. I enjoy basketball, swimming, and badminton, and I find that staying active helps me clear my mind and maintain a balanced lifestyle. Beyond competitive sports, fitness is an essential part of my daily routine – I make it a priority to work out every day.

I also love photography, especially capturing landscapes and cityscapes during my travels. Exploring new places through the lens allows me to appreciate details that often go unnoticed, much like uncovering hidden patterns in data.

About Kunpeng

|

Kunpeng (Chris) Xu is a final-year Ph.D. student in Computer Science at Université de Sherbrooke (UdeS), supervised by Professor Shengrui Wang, and an incoming Postdoctoral Fellow at McGill University, where he will work with Professor Yue Li and Professor Archer Y. Yang. His research focuses on time series analysis, kernel learning, and self-representation learning, with applications in forecasting, concept drift adaptation, and interpretability. He spent a year as a visiting scholar at UISEE, where he worked on reinforcement learning for autonomous driving, and collaborated with Laplace Insights to study regime switching in financial time series. He has also served as the Session Chair at PAKDD 2024 and as a Program Committee (PC) member for top conferences, such as NeurIPS, ICLR, ICML, AAAI, IJCAI, ICDM, KDD, etc. He is also a reviewer for leading journals, including IEEE TKDE, IEEE TNNLS, ESWA, KBS, and PR. |

tags: AAAI, AAAI Doctoral Consortium, AAAI2025, ACM SIGAI