ΑΙhub.org

AI ring tracks spelled words in American Sign Language

Hyunchul Lim wears the SpellRing. Photo credit: Louis DiPietro

Hyunchul Lim wears the SpellRing. Photo credit: Louis DiPietro

By Louis DiPietro

A Cornell-led research team has developed an artificial intelligence-powered ring equipped with micro-sonar technology that can continuously and in real time track fingerspelling in American Sign Language (ASL).

In its current form, SpellRing could be used to enter text into computers or smartphones via fingerspelling, which is used in ASL to spell out words without corresponding signs, such as proper nouns, names and technical terms. With further development, the device could potentially be used to continuously track entire signed words and sentences.

“Many other technologies that recognize fingerspelling in ASL have not been adopted by the deaf and hard-of-hearing community because the hardware is bulky and impractical,” said Hyunchul Lim, a doctoral student in the field of information science. “We sought to develop a single ring to capture all of the subtle and complex finger movement in ASL.”

Lim is lead author of “SpellRing: Recognizing Continuous Fingerspelling in American Sign Language using a Ring,” which will be presented at the Association of Computing Machinery’s conference on Human Factors in Computing Systems (CHI), April 26-May 1 in Yokohama, Japan.

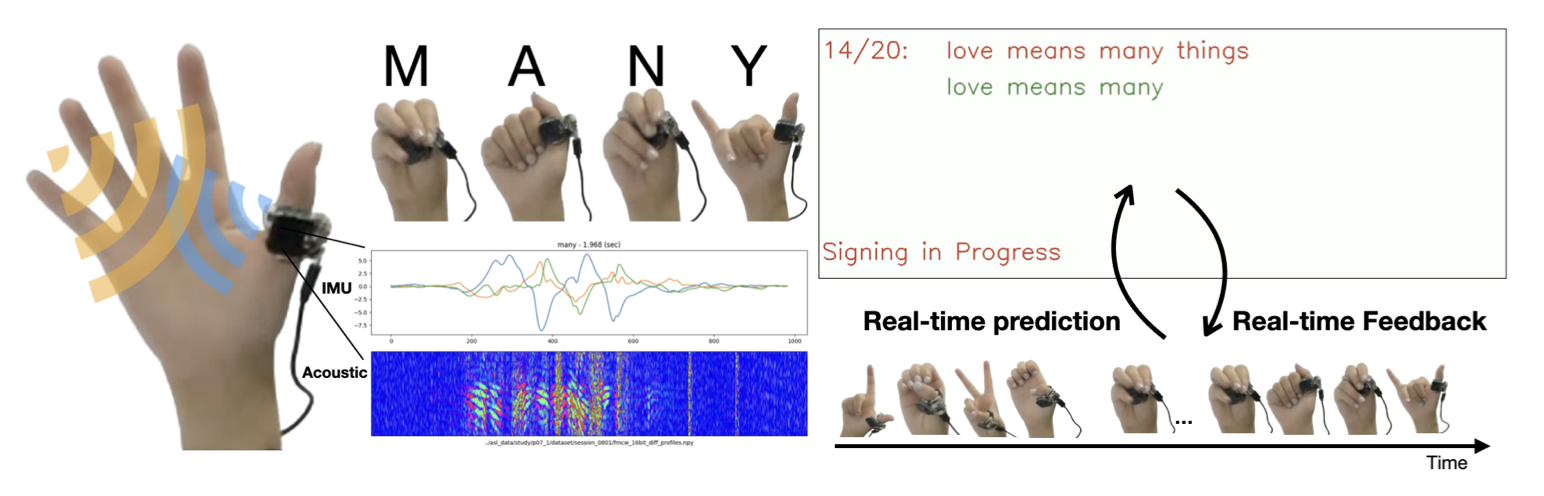

Figure from SpellRing: Recognizing Continuous Fingerspelling in American Sign Language using a Ring showing a schematic of the system.

Figure from SpellRing: Recognizing Continuous Fingerspelling in American Sign Language using a Ring showing a schematic of the system.

Developed by Lim and researchers in the Smart Computer Interfaces for Future Interactions (SciFi) Lab, in the Cornell Ann S. Bowers College of Computing and Information Science, SpellRing is worn on the thumb and equipped with a microphone and speaker. Together they send and receive inaudible sound waves that track the wearer’s hand and finger movements, while a mini gyroscope tracks the hand’s motion. These components are housed inside a 3D-printed ring and casing no bigger than a standard U.S. quarter.

A proprietary deep-learning algorithm then processes the sonar images and predicts the ASL fingerspelled letters in real time and with similar accuracy as many existing systems that require more hardware.

Developers evaluated SpellRing with 20 experienced and novice ASL signers, having them naturally and continuously fingerspell a total of more than 20,000 words of varying lengths. SpellRing’s accuracy rate was between 82% and 92%, depending on the difficulty of words.

“There’s always a gap between the technical community who develop tools and the target community who use them,” said Cheng Zhang, assistant professor of information science (Cornell Bowers CIS) and a paper co-author. “We’ve bridged some of that gap. We designed SpellRing for target users who evaluated it.”

Training an AI system to recognize 26 handshapes associated with each letter of the alphabet – particularly since signers naturally tweak the form of a particular letter for efficiency, speed and flow – was far from straightforward, researchers said. “The variation between letters can be significant,” said Zhang, who directs the SciFi Lab. “It’s hard to capture that.”

SpellRing builds off a previous iteration from the SciFi Lab called Ring-a-Pose and represents the latest in an ongoing line of sonar-equipped smart devices from the lab. Researchers have previously developed gadgets to interpret hand poses in virtual reality, the upper body in 3D, silent speech recognition, and gaze and facial expressions, among several others.

“While large language models are front and center in the news, machine learning is making it possible to sense the world in new and unexpected ways, as this project and others in the lab are demonstrating,” said co-author François Guimbretière, professor of information science (Cornell Bowers CIS). “This paves the way to more diverse and inclusive access to computational resources.”

“I wanted to help ensure that we took every possible measure to do right by the ASL community,” said co-author Jane Lu, a doctoral student in the field of linguistics whose research focuses on ASL. “Fingerspelling, while nuanced and challenging to track from a technical perspective, comprises but a fraction of ASL and is not representative of ASL as a language. We still have a long way to go in developing comparable devices for full ASL recognition, but it’s an exciting step in the right direction.”

Lim’s future work will include integrating the micro-sonar system into eyeglasses to capture upper body movements and facial expressions, for a more comprehensive ASL translation system.

“Deaf and hard-of-hearing people use more than their hands for ASL. They use facial expressions, upper body movements and head gestures,” said Lim, who completed basic and intermediate ASL courses at Cornell as part of his SpellRing research. “ASL is a very complicated, complex visual language.”

Other co-authors are Nam Anh Dang, Dylan Lee, Tianhong Catherine Yu, Franklin Mingzhe Li, Yiqi Jin, Yan Ma, and Xiaojun Bi.

This research was funded by the National Science Foundation.

Read the work in full

SpellRing: Recognizing Continuous Fingerspelling in American Sign Language using a Ring, Hyunchul Lim, Nam Anh Dang, Dylan Lee, Tianhong Catherine Yu, Jane Lu, Franklin Mingzhe Li, Yiqi Jin, Yan Ma, Xiaojun Bi, François Guimbretière, and Cheng Zhang.