ΑΙhub.org

Review of “Exploring metaphors of AI: visualisations, narratives and perception”

IceMing & Digit / Stochastic Parrots at Work / Licenced by CC-BY 4.0

IceMing & Digit / Stochastic Parrots at Work / Licenced by CC-BY 4.0

Better Images of AI and We and AI have been exploring the role of visual and narrative metaphors in shaping our understanding of AI. As part of this we invited some researchers who have been conducting different types of research into the topic, to shed light on the ways metaphors can contribute to hype specifically. This informs a project we are conducting on building an annotated AI metaphor database.

Introduction

From 10th to 12th September 2025, Barcelona hosted an academic gathering at the Universitat Oberta de Catalunya: the first Hype Studies Conference, titled “(Don’t) Believe the Hype!?” Organised by a transnational, collective research group of scholars and practitioners, the conference drew together researchers, activists, artists, journalists, and technology professionals to examine hype as a significant force shaping contemporary society.

Hype Studies is an emerging academic field that analyses how and why excessive expectations form around technologies, ideas, or phenomena, and what effects those expectations have on society, culture, economics, and policy. As the playful brackets around “Don’t” in the conference title suggest – both a warning and an invitation to question that warning – the aim of the conference wasn’t to simply reject hype, but to understand it. The conference approached hype critically by examining it as a phenomenon with real power and consequences that needs to be understood and questioned. The purpose here was to build collective knowledge about hype, develop better and more concrete theories, share empirical findings, and create an interdisciplinary community whilst advancing the field’s scholarship and knowledge.

Deliberately inclusive and flexible in format, the conference invited not only researchers, but also artists and professionals from many different fields, with an interdisciplinary approach. This reflected the reality that hype operates across economic trends, political agendas, media narratives, and technological development, and that no single discipline can fully capture how it works. The call for papers welcomed not only traditional scholarly research but also artistic contributions, workshops, and interactive sessions designed to map and visualise how hype functions and its impact on society. The hybrid mode of delivery further helped to open the conference to as many people as possible.

Whilst participants held differing views on whether hype should be exposed and countered or simply documented and analysed, they shared a conviction that in an age of viral media and algorithmically amplified attention, understanding hype’s mechanisms has become intellectually and practically urgent. It was within this context that the workshop “Exploring Metaphors of AI: Visualisations, Narratives and Perception” took place.

The Session

The proliferation of human-like or magical metaphors to describe AI is dramatically shaping how society perceives it, often creating unrealistic expectations and distorting the understanding of AI. Drawing inspiration from the work of Better Images of AI on how visual representations influence public understanding of AI, the workshop brings together academic research and creative practice with the goal of critically examining descriptions, narratives, and visualisations of AI, reflecting on their impact on society, and developing more accurate language and imagery that improve public understanding and discourse about AI. Contributors include Myra Cheng, analysing a large dataset of crowdsourced metaphors; Leo Lau of Mixed Initiative, presenting counter-representations to dominant AI imagery; Rainer Rehak, critically evaluating prevailing AI narratives; and Anuj Gupta with Yasser Atef, examining metaphors’ role in critical AI literacies in education.

1. Tania Duarte: Better Images of AI

Tania kicked off the session by introducing the participants and presenting Better Images of AI, a project which emerged as a result of observing how the use of repetitive clichés in the visual representations of AI prevents people from understanding the real impact of the technology on society and the environment. Images inspired by science fiction, like glowing brains or the Terminator or cyborgs, mislead about what the technology can actually do, remove the responsibility of those who make it, and disempower the public by creating fear, confusion or misinformation. Better Images of AI aims to create a new repository of images that better capture how AI systems actually work, what they’re used for, and their real-world effects with more thoughtful, diverse representations that acknowledge AI’s material reality, limitations, and actual functions in everyday life. Images are freely accessible to all users under Creative Commons licences, and all visuals in the library are created by human artists. On the website, a guide titled “The Better Images of AI: A Guide for Users and Creators“, co-authored by Dr Kanta Dihal and Tania Duarte, summarises research about AI imagery. Tania then showed examples of images and invited the audience to contribute to the project.

IceMing & Digit / Stochastic Parrots at Work / Licenced by CC-BY 4.0

IceMing & Digit / Stochastic Parrots at Work / Licenced by CC-BY 4.0

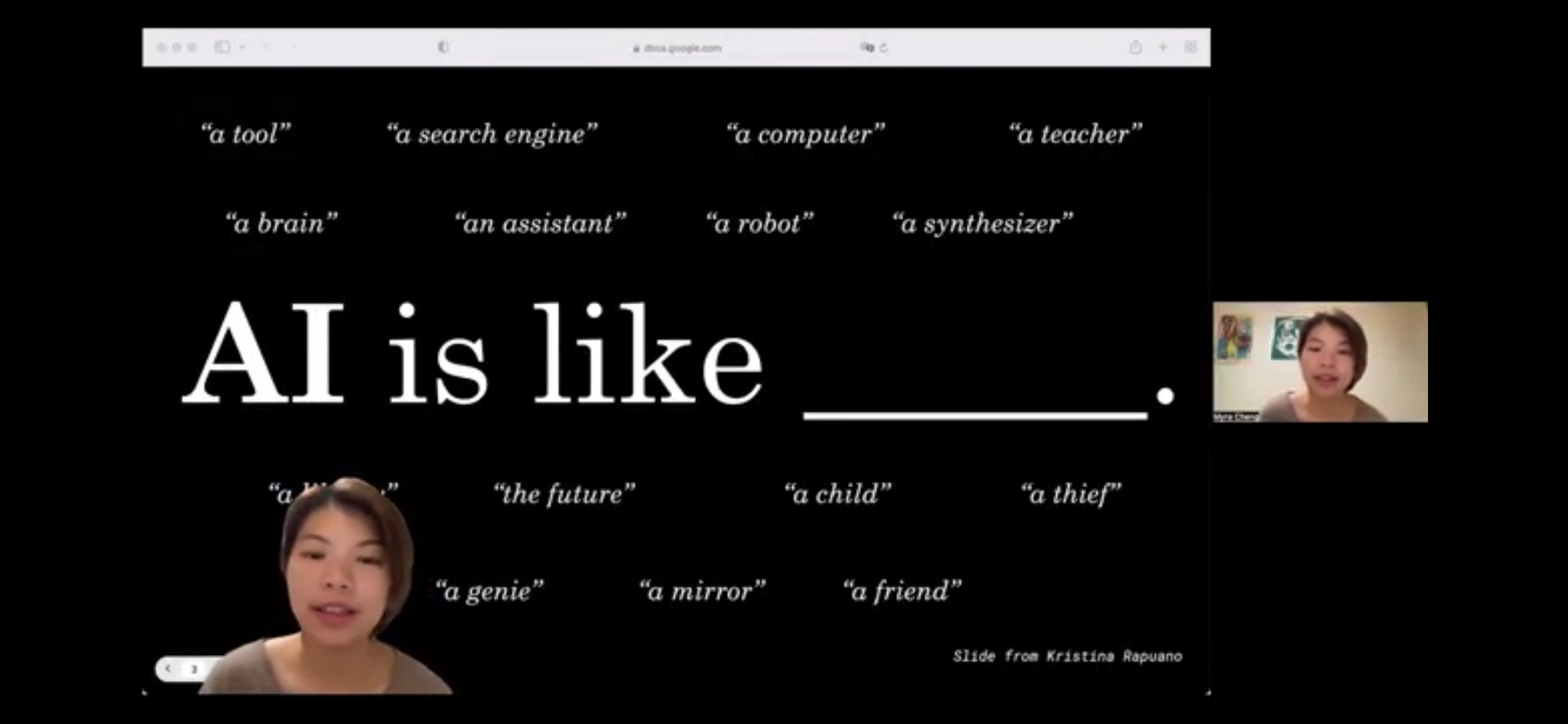

2. Myra Chen: From tools to thieves: Measuring and understanding public perceptions of AI through crowdsourced metaphors

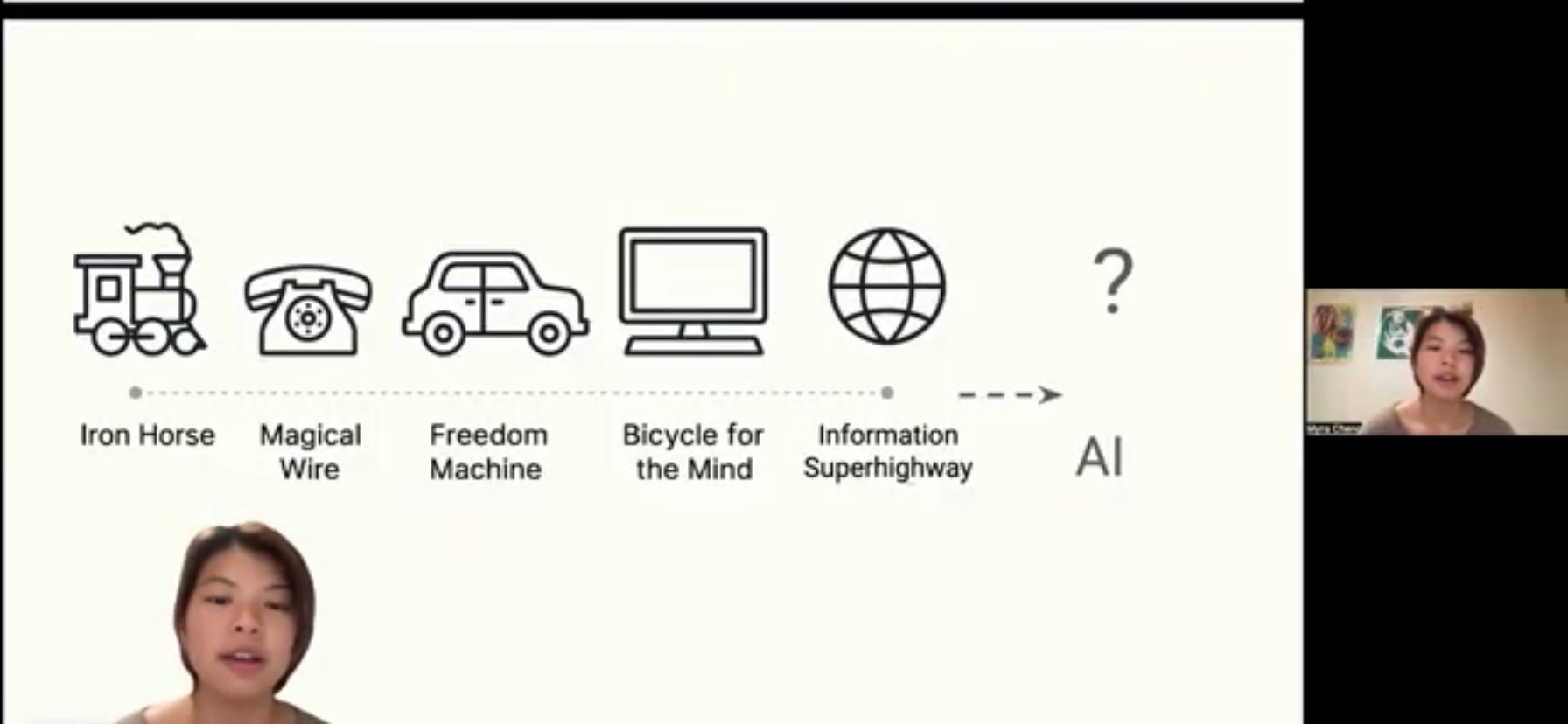

Myra Chen from Stanford University presented her research on how metaphors reveal public perceptions of AI. She began by observing that metaphors have always been used to understand emerging technologies – trains were called iron horses, electricity was described as a magical wire, and the internet as the web. However, with AI, there is no single dominant metaphor because perceptions range widely, from love to hate.

The study she carried out with her colleagues aimed to understand public perception of AI to grasp its societal impact, recognising that people’s implicit perceptions play a powerful role in shaping interaction with technology.

With that purpose, the researchers asked participants to complete the sentence “AI is like ______”. They collected over 12,000 metaphors over a 12-month period (2023-2024) from a representative sample of the American population. Then they carried out a large-scale analysis to investigate how people’s perceptions of AI changed during this time. Using mixed methods (quantitative clustering and qualitative refinement), they identified 20 dominant metaphors, with tool, brain, and thief being predominant and accounting for about 10% of the metaphors.

The wide range of metaphors included mechanical versus human-like descriptions (toolversus teacher), warm versus cold (friend versus thief), and capable versus not capable (genie versus animal). To capture the impact of the dominant metaphors, they turned to methods to measure these implicit perceptions: anthropomorphism, warmth, and competence.

To measure anthropomorphism, the researchers used Anthroscore, a method developed to score the level of human-likeness in a given text. This works by taking the word “AI” out of the “AI is like” sentences and determining whether the sentence describes a human (e.g., “is a friend”) or an object (e.g., “is a tool”). To measure warmth and competence, they used Fraser’s semantic axis methods.

Next, for each dominant metaphor, the researchers scored anthropomorphism. They found considerable variation in anthropomorphism levels across metaphors. Importantly, anthropomorphism doesn’t necessarily mean positive perceptions – for instance thief is negative, whilst library is positive. After that, they measured warmth and competence for each metaphor. For example: search engine scored high in competence but low in warmth; thief scored low in both warmth and competence; animalscored high in warmth but low in competence; assistant scored high in both warmth and competence. Across all metaphors, they noted that the majority were high in warmth and competence, while there were variations in anthropomorphism. A crucial finding was the shift in implicit perceptions over time. As the metaphors were collected over a year, the variation in AI perception revealed that AI perception evolved with the technology. In fact, in 2023, when ChatGPT became mainstream, the prevalent metaphors were computer, search engine, and synthesiser – all high in competence but low in warmth, indicating AI was perceived as a high-functioning but cold expert. Fast forward just one year, and these terms had been replaced by metaphors like assistant, friend, and teacher, which are highly warm, human-like, and collaborative. This demonstrates that in just one year, there was an intensification of anthropomorphism and warmth.

These metaphors about AI may impact how we interact with the technology and may reflect meaningful differences in how we interact with AI. For instance, people who describe AI as warm and human-like may be more likely to trust and adopt AI. The dominant metaphors and their implicit perceptions may serve as predictors of trust and adoption.

Therefore, the team ran further measurements on the dominant metaphors to see how the implicit perceptions (anthropomorphism, warmth and competence) of AI influence trust and adoption. The results were that conceptualising AI as a helpful human-like entity (friend, teacher, assistant) facilitates trust. Metaphors scoring high on warmth, competence, and anthropomorphism – like God or brain – were the most predictive of trust and willingness to adopt.

The researchers also carried out further analysis that discovered interesting demographic differences. For instance, women and non-binary people trust AI less and view it as less competent and less warm, whilst older people trust AI more and anthropomorphise it more. This is particularly important for investigating how social identities shape human-AI interactions and addressing the effects of AI on vulnerable and marginalised groups. There are lots of concerns about the harm of AI being too anthropomorphic or too warm: people may be misled by the hype, may be over-reliant on AI, or may find it deceptive. The study suggests that these harms affect different groups disproportionately, and some people may be more vulnerable than others. Specific risks include over-trust, over-reliance, and emotional dependence.

Myra concluded by stressing the importance of understanding AI hype by asking ourselves what metaphors are being used by tech companies to market AI models, and how this contributes to the hype. As these metaphors are clearly shaping people’s perceptions, we need new, more adequate metaphors to counteract the potential harms of AI.

New metaphors that may be useful would leverage the level of anthropomorphism so that they are more intuitive and help people with mental models, but also avoid the harms of anthropomorphism, like over-reliance, deception, and emotional dependence. That’s the next direction of research in AI metaphors: investigating what the metaphors are that really help people to understand the harms of AI, and how we can design better metaphors.

Myra Chen presenting a history of technology metaphors.

Myra Chen presenting a history of technology metaphors.

3. Rainer Rehak: AI Narrative Breakdown. A Critical Assessment of Power and Promise. ACM FAccT ’25

Rainer Rehak from the Weizenbaum Institut presented his research on different AI narratives and their use cases, analysing them critically through the lenses of a range of disciplines: critical informatics, critical data and algorithm studies, data protection theory, philosophy of mind, sustainability studies, and semiotics.

Rainer observed that there are currently very high expectations on AI, from transforming all areas of health and well-being to increasing the potential for democracy and as a game changer for sustainable development. They all fit into a pattern, with some very positive narratives like resolution of war and climate crisis (utopia) and some very negative like the end of humanity (dystopia). He argued that the way we narratively conceptualise AI shapes the public discourse; therefore, we need to deconstruct these narratives. This is because the meaning of AI is not clear, and this has an impact on politics, policymakers, and economic decisions. In presenting his research, he first provided clarity on the origin of AI, then presented and critically analysed some common AI narratives.

Starting with an introductory background on AI history, Rainer noted that AI narratives began emerging already in the 1950s and 1960s. The term artificial intelligence was coined in 1955 for a Stanford project with McCarthy and Minsky on computers simulating human thinking. Now it is a field in computer science. Critical work regarding AI dates back as long as AI has existed, even before the term was coined, and goes back to Turing’s work in the 1950s.

Rainer argued that there are two types of AI: strong AI and weak AI, to which he added a third type that he called Zeitgeist AI. Weak AI is designed for specific tasks (generative AI is in this category, designed to produce images and text). Strong AI or AGI (Artificial General Intelligence) is capable of autonomous learning and abstract thinking. Although there have been many efforts in this direction, to date, we don’t have such an AI. Zeitgeist AI is his proposed term, which indicates the vague way AI is currently used, which can mean anything.

Rainer then undertook an analysis of misleading narratives, focusing on the most prevalent ones, illustrated briefly below.

Decision, Agency and Autonomy: To be able to make decisions, have agency and autonomy, the actor has to have alternatives of choice, responsibility, intention, and an inner model of the world; however, none of these are present in AI systems, which can give different responses but just as a result of randomness.

Knowledge and Truth: These narratives are the result of attributing to AI the ability of communication, which is disproved by the fact that AI linguistically deals with form and not content. GPTs are statistical token transformers which measure the statistical distance of words they’ve been fed, so their final outputs are a representation of formal relations between words. Therefore, there’s no knowledge or ability to lie. The form is the prevalent function, not the content.

Prediction: As statistical systems, AI can detect patterns in a dataset based on the past to predict future outcomes. However, while this works on data depending on physical laws (like weather), it is not accurate in social sciences, like predicting crime, because societal phenomena don’t depend on physical laws. For instance, predictive policing has been done using earthquake models, but earthquake models are based on physical laws, whilst policing is based on social science, rendering predictive policing ineffective.

Neutrality and Apolitical Optimisation: The narrative of AI as neutral is equally unfounded because neutrality is based on data selection, project design and fairness, and all these must be pre-negotiated before applying AI. This also applies to optimisation: it implies negotiation, and the negotiation must be done before applying AI. For example, traffic optimisation: what does optimisation mean? Is it about cars, health, distance, or emissions? All these must be designed and negotiated in advance.

After critiquing the prevalent AI narratives propagated by organisations with political and economic power and the media, Rainer argued that the way we engage with AI is driven by these false narratives, and he therefore illustrated some consequences with concrete use cases.

Summarising Text: AI is largely used for summarising text. This mostly results in humans having to correct the summaries, which is more time-consuming than doing the summaries from scratch. For example, a study run by the Australian government found that the summaries made by AI require more work and not less.

Interaction and Communication: We see chatbots generating unreliable text. For instance, a Canadian airline was held responsible for its chatbot giving passengers bad advice.

Data Quality: The use of AI to improve data quality resulted in failure. In fact, there was an attempt to improve data quality made by Meta in 2019 with the model Galactica, which was fed only scientific data, but after three days, they had to shut it down because it was generating nonsense.

Economic Impact: This has not materialised, except for the companies that produce microchips and provide AI services.

Long-term Goals: In spite of the plan of Altman in 2019 to generate investment returns from generally intelligent systems, we are still very far away from AGI.

Sustainability: There are a lot of interesting cases ongoing for sustainability, like optimisation of the circular economy, waste separation and disposal, but they are limited in scope. What is definitely increasing instead are the cases of unsustainability, like increased consumption, production, and fakes.

Rainer concluded that we should deconstruct AI narratives and be more aware of the Zeitgeist, as AI is used to refer to many different technologies. We need to ask: What are the problems to be solved? What are the risks and benefits, and for whom? What are the optimal criteria? Currently, AI systems can do a lot of things, but of mediocre quality. We also need to consider the ecological and decolonial issues. AI does not replace the need for societal transformation. We need new guiding principles like digital sufficiency, digital decolonialism, and digital regrowth, and if AI must be used, then only in small models.

4. Anuj Gupta and Yasser Atef: Assistant, Parrot, or Colonizing Loudspeaker? ChatGPT Metaphors for Developing Critical AI Literacies

Anuj from the University of South Florida and Yasser from the American University in Cairo presented their article, written in collaboration with two other colleagues (Anna Mills and Maha Bali), as a result of group work on AI literacy. Their presentation took an engaging, lively interview style, with each taking turns asking the other questions about the paper.

Yasser started the conversation by asking how multiliteracy and critical AI literacy shape the idea of metaphor. Anuj explained that the group was reflecting on the relation between AI hype and the term “critical AI literacy”, noting that two perspectives merged in critical AI literacy: functional AI literacy (how to use these tools) and critical AI literacy (understanding the socio-cultural literacies and how these impact the understanding of AI). They grouped these perspectives under the umbrella of multiliteracy. Their research emerged from an intention to use critical AI literacy as a guiding framework to prevent harm from AI use in education and understand public discourse on AI by focusing on metaphors as discursive phenomena that shape thinking and behaviour. Then their collaboration began.

Yasser observed that metaphors are literary devices, so how did the understanding of metaphor evolve in the paper? Anuj responded that AI metaphors are important because they shape public discourse. As some researchers in the group had linguistic backgrounds, the group started reflecting on Lakoff and Johnson’s work on metaphor and their argument that metaphors are not just linguistic devices but shape our behaviour.

Lakoff and Johnson’s famous example noted that arguments are commonly described using the metaphor of war or fight (making us confrontational, as demonstrated by the degeneration of social media discussions). If we were to describe arguments as a dance instead, our perspective would change, and we would deliberate and discuss more harmoniously. On these theoretical premises, the group set out to explore how AI metaphors change the perception of AI.

Yasser asked what the approach to collaboration was. Anuj responded that, interestingly, as the researchers were based in different countries (USA, Egypt, with one originally from India), they had four different unique advantage points with different life experiences and academic roles, making their collaboration cross-country and cross-language.

The method they used was digital collaborative autoethnography. Autoethnography is a reflection on personal experiences to understand cultural experiences. As this was collaborative, each individual made their own reflection, then they collectively analysed the data. They all collected the metaphors they were exposed to from various sources, including peer-reviewed literature, news, media, blogs, workshops and conversations.

They then contributed to a collaborative Word document, writing about how these metaphors made them feel, think, and how they influenced their experience of AI. Then they collected their reflections into a heuristic map of metaphors of AI to understand their impact.

Yasser then explained that they designed a graph with two axes: one for human-likeness, ranging from human-like metaphors at one extreme to non-human metaphors at the other and animal or plant-like (semi-human) in the middle; and the other for their framework of multiliteracy, indicating functional, critical, and rhetorical approaches to AI.

They noted how some metaphors were very human-like – for instance, Gemini calls itself a helper – whilst others, like stochastic parrot, are more critical of AI.

Then, they plotted the metaphors on the diagram depending on how they related to these two dimensions, for instance, helper is human and functional, whilst stochastic parrot is semi-human and represents a critical view of AI.

Anuj added that the diagram also represents how each metaphor shapes how we use AI: helper means we think AI is unbiased and helpful, and therefore, we are less critical of it and less inclined to verify the information it gives us. On the other hand, parrot means we think AI is a statistical machine, not human-like, and therefore, we are more critical towards it.

Yasser observed that it’s crucial to explore AI and accessibility, as people do not question the biases on which AI is built. For instance, there are some risks in the classrooms, as some students are not questioning the biases that these models were trained with – for example, people in Kenya are exploited to work for AI. Additional concerns include inequality in access: students using paid services do not have equal experiences to those using free services, and so there is clear inequity. More research should be done on AI and disability, marginalisation, and more effort to teach students to be more critical, to understand and learn to question AI.

Anuj concluded by reflecting on the impact of their paper and future research. The paper has been downloaded over 6,000 times and will hopefully inspire other researchers to further explore the impact of AI metaphors. This may include using larger datasets to explore their impact on people, involving schools and students, and using well-designed resources on AI like Better Images of AI.

Yasser emphasised that AI should be better embedded in accessibility applications across the world. Currently, Be My Eyes is doing good work to support blind and low vision people to connect for assistance, but not much else is being done in this area. We know, for instance, that AI-generated text poses a lot of risks as it is not always accurate. It would be a positive step forward to make inaccessible websites accessible through AI and to do research that helps remove barriers and not increase marginalisation. AI could be better designed for disability in applications like accessible websites, and research should work to remove bias.

5. Leo Lau: Echo Techno Bio Mytho Casino: New Visual Metaphors of AI

Leo from Mixed Initiative shared his experience of using AI metaphors in creative design and creativity. As a designer in the industry working for Goodnotes, a company that uses AI to help with digital notes and note-taking, over the last two years he has designed several AI features and dealt with many metaphors or mental models, with the aim to help users to interact with AI features.

However, he noted that these metaphors were mostly about sparkles, so he decided to engage with colleagues to find some other, less common and popular alternatives for design and marketing purposes. Over the summer, he had a brainstorming session with his team, and they whiteboarded several new metaphors. It was an interesting exercise to stretch the boundaries of tech creativity, and some interesting patterns started to emerge.

Curious to explore further beyond his close circle in the office, Leo worked with Mixed Initiative, a local community in Hong Kong that he founded two years ago, where they debate, research, and create on AI, creativity, and authenticity. As Mixed Initiative often comes across topics that require explaining difficult AI concepts in plain language, their discussion about AI metaphors was highly relevant to the community.

Leo noted that AI is often described as an industry or as a product, but it is also a concept, and so they started discussing and researching this at Mixed Initiatives. They also collaborated with Tania Duarte and Better Images of AI on a workshop in London, where they used some techniques and sensory objects to create and explore metaphors.

After all these different approaches to metaphors and discussions with different groups, Leo observed five patterns or clusters of metaphors. Some metaphors are not original, coming from media or other authors, but some are a novel mix of existing ideas, culture, myths, or adaptations from different worldviews. Leo’s goal is to share these metaphors and their imagery to inspire and provoke questions.

The Five Clusters of AI Metaphors were as follows:

- Imperfect, distorted, recursive: This cluster is about metaphors referring to imperfection. For instance, mirrors that always look back or distort, implying they don’t reflect reality as it is –

rear mirror, distorted mirror. - Techno-modernity, omnipresence: These metaphors relate to how technology is being implemented in the world. The most popular metaphors are “AI as the new electricity” or “AI as plastic“, which are built on another metaphor: data as the new oil. AI is data, and data is the fuel that decomposes and is transformed into energy or plastic. “Wheels in progress” represents the image Leo built at the Better Images of AI workshop, depicting a capitalist hand running a computer mouse wheel, a metaphor of the wheel of progress that seems inevitable.

- Growth, nature, life: Metaphors related to biological entities, like animals, chaos, bonsai, dog, typing monkey, and stochastic parrot at work, where a popular AI metaphor used for criticism (the stochastic parrot) is modified here to instead show parrots as chaotic working partners collaborating with humans, so it becomes more positive and optimistic.

- Cosmic, myth, esoteric, monster: One of the most used metaphors is god – all-knowing and powerful – reflecting the rising trend of AI psychosis when people start imagining something powerful or spiritual coming from interaction with chatbots. Superstitious uses of AI are exemplified by the Ouija board, with many hands moving across the board and channelling guidance. This metaphor hints that multiple humans are involved and all share agency, representing the ones who created the data, the ones who trained the data, and the users – all involved in output and input and all sharing the illusion of agency from an object that is inanimate. Other metaphors include walking hand, and parasite.

- Exploration, chances, games: The metaphor new frontier indicates the AI industry as highly unregulated and wild, whilst slot machine, and playing dice refer to gambling and indicate LLMs as a game of probability, where one can sometimes lose and sometimes win.

Leo concluded by pointing out that reflecting on alternative AI metaphors was an opportunity to think outside the box, beyond the ordinary mainstream metaphors, because what is important is not picking the right metaphor but allowing deliberation and diversity, so that we can remain flexible and adjust our mental models as the world and technology evolve. The best metaphors, Leo argues, are those that invite dialogue with technology and use inclusive lexicons, which means there are many different ways to represent AI. The goal is to invite imagination and dialogue with rich and diverse imagery.

Leo Lau’s presentation slide.

Leo Lau’s presentation slide.

Discussion and Metaphors from the Audience

The brief discussion afterwards between Leo and Yasser highlighted how academia and industry could collaborate on exploring ethical AI. Key themes included the importance of AI for disability access and the importance of collaboration between industry and academia in research on AI.

During the presentations, participants contributed their own metaphors for AI, engaging with the workshop’s interactive format and adding to the collective exploration of how we conceptualise artificial intelligence.

Some metaphors highlighted AI as a technology of hidden control, like ventriloquist’s doll, speaking with borrowed words while unseen operators pull the strings, or ethics-washing puppet representing the performative ethics of tech companies interested only in pursuing money.

Others captured a sense of growing unease, disempowerment and anxiety, from the overoptimistic parrot, relentlessly positive to the point of being unsettling, to the Chernobyl comparison, illustrating the sense of impending catastrophe, to Sisyphus to represent the pointless effort to manage AI.

Some participants offered scholarly framings: Turkle’s Rorschach test suggests we project onto AI what we want to see; drawing from Astra Taylor, the em>enclosure of knowledge commons represents corporations privatising collectively-created knowledge; bullshit, building on Frankfurt’s book, describe LLMs ability to speak plausibly without caring about truth; Shannon Vallor’s AI mirror reflects our own biases and values back at us.

Conclusion

By bringing together empirical research, critical analysis, creative practice, and collaborative reflection, the session illustrated that the language we use to describe AI is not merely functional but fundamentally shapes technological development, public perception, and societal impact. All contributors demonstrated how most current AI metaphors can harm or distort people’s perception of AI, and pointed out that when tech companies promote metaphorical framing that makes AI seem helpful, intelligent, or human-like, they not only create cycles of hype but also encourage people to trust and adopt AI without questioning its limitations, biases, or real-world impacts.

However, there were also optimistic notes, as all the contributors emphasised the importance of building new metaphors to help people engage with AI in a more balanced, informed, and healthy way. The challenge ahead is to create diverse, accurate, realistic representations of AI, whilst simultaneously equipping people with the critical thinking skills needed to engage thoughtfully with the technology, however it is described.

Within the broader context of the Hype Studies Conference, the workshop highlighted how metaphors of AI contribute to cycles of hype and how critical engagement with these metaphors might enable more grounded, neutral approaches to AI development and deployment.

The Conference presentation with videos embedded is available to view here, on the conference’s repository.

Please reach out to research@weandai.org if you wish to contribute to our metaphor resource.

Author bio

Cinzia is a Senior Fellow of the Higher Education Academy. She has held both academic and educational development roles at universities in Italy, the UK, and the US, exploring the impact of technology on human practices across writing, education and knowledge creation. She has presented and published internationally. Recently, she has focused her research on AI in education, working with UK educational organisations to evaluate AI teaching tools and leading research projects including the creation of AI guidelines for student use. Currently, she investigates human-AI interaction in creative and learning processes, examining their impact on metacognition.

Thanks to Max Fullalove for editing this post.

This article was originally posted on We and AI.