ΑΙhub.org

Researching interdisciplinary methods in computational creativity – interview with Nadia Ady and Faun Rice

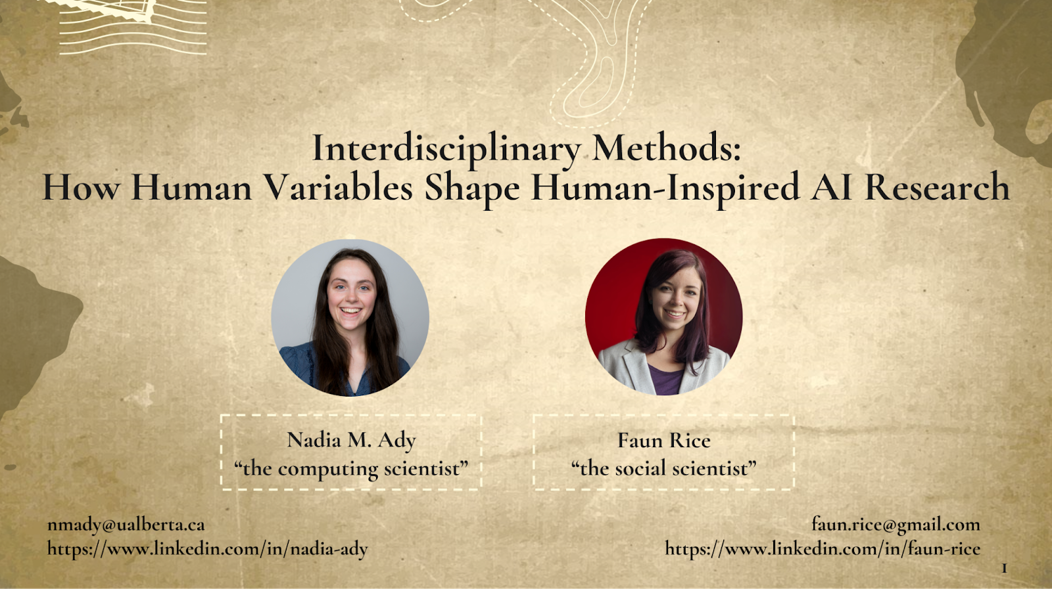

Title slide from Nadia and Faun’s presentation at ICCC’23.

Title slide from Nadia and Faun’s presentation at ICCC’23.

Nadia Ady and Faun Rice are working on a research project exploring where artificial intelligence (AI) researchers find inspiration and ideas about human intelligence and what approaches they use to translate ideas from the disciplines that study human intelligence (e.g. social sciences, psychology, neuroscience) for work in AI. We spoke to Nadia and Faun about the project, what they’ve learnt so far, and how they plan to further develop the work.

Could you start by giving an overview of the research project?

Faun: We are doing a multidisciplinary project – I’m from the social sciences, while Nadia works on artificial intelligence. We’re interviewing other artificial intelligence researchers who work with direct analogs from human psychology and try to translate them for machines in some way. For one, we talk to them about how they find the definitions that they’re working with. Taking Nadia as an example, she works on computational curiosity, so in her case we’d ask her what definition of curiosity she selected and where did it come from? We also ask how they read about what they’re studying, what literature they go to – is it psychology, neuroscience, anthropology, sociology? Then we explore what is altered or lost, due to necessity, in the process of translating an idea from other disciplines to computer science. Of course, definitions in different domains have very different structures – we’re looking at the impact of that.

Nadia: One of my largest goals with the project is to develop a greater understanding of how other researchers, like myself, go about this type of work. I’m hopeful that it will provide some guidance to others about how to do this well, and what the societal impacts of this type of work are.

What inspired you to undertake this project?

Nadia: I keep thinking back to a conversation that Faun and I had. I was working on my dissertation, thinking about what curiosity could or should mean when it’s in a machine, and we started talking about what that process was like and we became more generally interested in it.

Could you talk a bit about the interview process? How did you go about picking people to interview, and what was the structure of the interviews?

Faun: We’ve used a snowball sampling approach. Initially we read a lot of work and reached out to people who had published articles that we thought were relevant. This generally meant people who had published an article trying to translate a concept from human psychology (like forgetting) for machines. For the interviews (which were approved by the University of Alberta research ethics board) we use a semi-structured questionnaire. This means that we have themes and a set of questions that we want to hit, but we can rearrange the order based on the flow of natural conversation and we can follow up on things that are interesting. We ask people about how they do literature reviews, how they go about doing their research, what challenges (both social and technical) they might have come across in translating their concept for a machine, and what they think are the biggest implications or applications of their work. We’ve talked to 22 people so far, about half of which work with computational creativity.

Could you talk about some of the most interesting insights that came out from the interviews?

Nadia: It was interesting to hear about people recognizing what possibilities are missed along the way when you are doing this kind of work. Most people end up taking one path of deciding what a concept means to create a system, but along the way they do notice that they’ve had to make decisions. Hearing people recognize the implications of those decision-making processes is quite interesting.

Faun: One interesting thing, which confirmed what we thought initially, is how difficult it is to do interdisciplinary work. People who’ve chosen an idea in human psychology have to work really hard to do enough reading to understand it properly. Like Nadia, for example, is doing a dissertation that includes a gigantic literature review of who has described curiosity in what ways over the course of human scholarship, and that’s obviously a huge challenge. I think some of the most salient findings from the project have been what strategies people used to go out to find workable ideas in other literatures – for example, citation analysis, talking to domain experts, or even popular literature. There are just all these different ways that ideas get filtered down. It’s kind of serendipitous based on people’s lives and interests, which is interesting. It really shines a light on the human element of research – everyone’s personal habits, interests and reading patterns are showing up in what we see in the machines of tomorrow.

Are there any other themes that came out in the responses?

Faun: Yes, a few: In work trying to bridge the gap between studies of human and animal brains and machines, there’s a lot of conversation about what level of analysis you are working at. For example, if you’re interested in curiosity, are you working at the neural level or at a conceptual level with cognitive models? And, depending on who you talk to, there’s a bunch of different levels – you could go all the way down to the molecular level and all the way up to the social level. This “levels of analysis” theory is a well-thought out piece of philosophy of science that a lot of folks in interviews talked about without realizing, whilst others do explicitly cite some of the famous versions of it, like David Marr’s Vision (1982). We get into this in more detail in the paper.

Another interesting thing is this difference between focusing your emphasis on process versus product. If you’re studying computational creativity, for instance, are you interested in the creative process and replicating that for machines, or are you interested in a product that post-hoc you evaluate as creative? This has become really relevant in systems like ChatGPT and other generative AI products. After the fact, people look at the systems’ products and they say “oh wow that’s so creative”, but then there’s another school of researchers who are more interested in process. They want to know what the system is doing or if there is some sign of creative process happening before the product exists.

Nadia: There’s a really interesting history within computational creativity of the moving goalposts concern that we see throughout artificial intelligence. For example, “When computers can play chess then they’ll be intelligent”, “Oh, no, that’s not intelligent anymore now that they can do it”. That happens within creativity as well. “Once they can generate this kind of creative product, they’ll be creative”. “Oh, no, there’s something else in the process that makes humans creative and not machines”. This is an interesting phenomenon that’s well-known within computational creativity.

You mentioned ChatGPT. Have the latest iterations of generative AI models changed the narrative within the field?

Nadia: Yes, I think so. Maybe there is a bit of new thought around what creativity means, and whether there is anything further to study when these systems are generating things that are so impressive. I think generally the answer is yes. The field totally believes that there is more to learn but it’s a very different direction. Where do we incorporate these kinds of systems if we want to generate more creative products? I think that there’s still a large amount of interest in studying the creative process as well. What does it mean for a process to be creative? I don’t think these statistical decision-making devices have people convinced. You either have to clearly see that the mechanisms of the human brain are exactly doing that, and probably still be very disappointed, or find a different way of generating something creative and see that it matches better for people to really feel like the question is answered.

Faun: A lot of our data collection and interviews happened before November 2022, so before the latest iterations of these generative AI models were released. We’re following a method called grounded theory where you have iterative rounds of data collection and analysis. The idea is that you talk to the first round of people, collect early themes, and then return to collect more primary data and see how your emerging theory changes and evolves. Our findings are likely to change significantly because there is so much happening in the real world to take into account, including generative AI.

What are the next steps for the project?

Faun: We want to continue to reach out to further interviewees. We’d like to expand and talk to more researchers working with other concepts that are analogs from human psychology. Our aim is to produce a publication that looks at interdisciplinary methodologies being used in AI more broadly, beyond just the creativity space. We’d also like to do a bit more knowledge translation and write some more general science pieces.

Interested in participating in this project?

Faun and Nadia are actively looking for more interviewees who are AI researchers working with analogs from human psychology. If you are interested in contributing, by talking about your own research, you can get in touch with Faun and Nadia at faun.rice@gmail.com or nmady@ualberta.ca.

Find out more

Paper:

- Interdisciplinary Methods in Computational Creativity: How Human Variables Shape Human-Inspired AI Research, Nadia M. Ady and Faun Rice.

Talks (videos):

About the authors

|

Nadia Ady (she/they) is a PhD Candidate at the University of Alberta in the Department of Computing Science. Nadia’s current research interests are in artificial intelligence, specifically in the possibilities for imbuing machines with traits of creativity and curiosity. |

|

Faun Rice (she/her) is a social researcher and writer based in Vancouver, BC. Trained in anthropology and sociology at the University of Alberta, Faun began her career as a language revitalization researcher and then a museum researcher. Today Faun works for a non-profit science, technology, and society research group, studying the intersection of labour and emerging technologies. |