ΑΙhub.org

Exploring layers in deep learning models: interview with Mara Graziani

Mara Graziani and colleagues Laura O’ Mahony, An-Phi Nguyen, Henning Müller and Vincent Andrearczyk are researching deep learning models and the associated learned representations. In this interview, Mara tells us about the team’s proposed framework for concept discovery.

What is the topic of the research in your paper?

Our paper Uncovering Unique Concept Vectors through Latent Space Decomposition focuses on understanding how representations are organized by intermediate layers of complex deep learning models. The latent space of a layer can be interpreted as a vector space spanned by individual neuron directions. In our work, we identify a new basis that aligns with the variance of the training data. For instance, we decompose the latents of the training data into singular values and singular vectors. We demonstrate that starting from the singular vectors makes it easier to isolate areas of the space that correspond to high level concepts such as object parts, textures and shapes that have a unique semantic meaning.

Could you tell us about the implications of your research and why it is an interesting area for study?

The deep learning models that are currently de-facto standard in several applications are still perceived as black boxes. There is not a clear reasoning process behind their predictions. In this context, distilling the complex representations learned by these models into high-level concepts has a high potential to bring positive impact. If we were able to express the model’s reasoning in terms of semantically meaningful features such as object parts, shapes and textures, then the users’ interaction and reliance on these systems would become easier to regulate, fostering a more controlled and user-friendly engagement.

Some methods that attribute model outcomes to high level concepts already exist, but most of them rely on user-defined queries about the concepts. This is a limitation because there is an inherent bias introduced by user-defined queries, that is the experimenter bias (Rosenthal and Fode, 1963). Users tend to frame queries that align with their existing knowledge and expectations, inadvertently disregarding other potentially pertinent variables. The exhaustive coverage of all possible concepts, besides, is unfeasible and in some domains such as biology or chemistry, the concepts may even be unclear or difficult to define.

Could you explain your methodology?

We propose a comprehensive framework for concept discovery that can be applied across multiple architectures, tasks, and data types. Our novel approach involves the automatic discovery of concept vectors by decomposing a layer’s latent space into singular values and vectors. By analyzing the latent space along these new axes (that are aligned with the training data variance), we identify vectors that correspond to semantically unique and distinguishable concepts.

The method consists of three separate steps. In the first step, we apply singular value decomposition to the layer’s response to the entire training dataset. In the second step, we evaluate the sensitivity of the output function along the directions of the singular vectors and we use this as a novel estimate of each singular vector’s importance to the output values. For example, if the model was classifying different inputs, we would obtain a sensitivity-based ranking of the singular vectors for each class. The third and last step involves the refinement of the singular vector directions so that they point to unique concept representations.

What were your main findings?

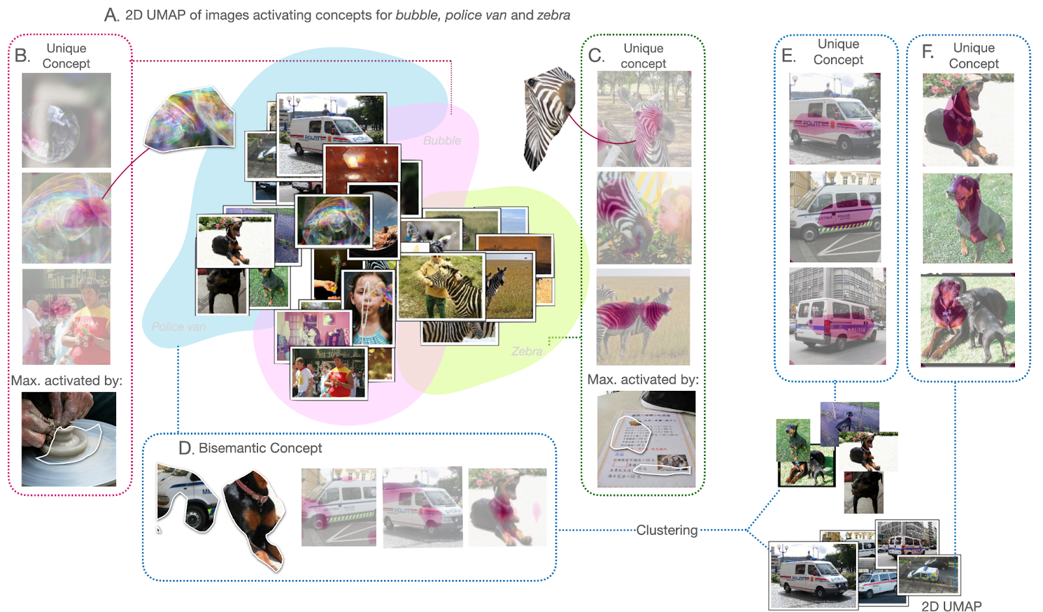

By applying our method to vision models such as Inception v3, we were able to automatically identify high-level concepts associated with natural image categories. Interestingly, these concepts confirm existing findings in the interpretability research on vision models. For images of class zebra, we identified black and white striped patterns that are typical of zebra coats. Similarly, we identified graphical features and tires associated with police vans. Concepts for the class bubble focus on glossy-like reflections and round shapes, and emerge at different scales. Some examples of the identified concepts can be seen in the images below.

What further work are you planning in this area?

Providing reliable and intuitive explanations for deep learning models is a major challenge in high-risk applications. With models reaching super-human performances that solve tasks that humans cannot solve, the automatic discovery of what they internally learn is a promising research direction that could facilitate the uncovering of new hypotheses for scientific discovery. Particularly in fields such as healthcare, biology and chemical sciences, this method could foster the identification of novel patterns. This will be the focus of future directions in this area.

Read the research in full

Uncovering Unique Concept Vectors through Latent Space Decomposition, Mara Graziani, Laura O’ Mahony, An-Phi Nguyen, Henning Müller, Vincent Andrearczyk

About Mara

|

Mara Graziani, PhD, is a postdoctoral researcher at IBM Research Europe and at HES-SO Valais. She holds a PhD in Computer Science from the University of Geneva and a MPhil in Machine Learning from the University of Cambridge (UK). Her research focuses on developing interpretable deep learning methods to support and accelerate scientific discovery. Her dissertation was awarded the IEEE Technical Committee on Computational Life Sciences PhD Thesis Award in 2021. |