ΑΙhub.org

Interview with Christopher Chandler: closed-loop robot reactive planning

In their paper Model Checking for Closed-Loop Robot Reactive Planning, Christopher Chandler, Bernd Porr, Alice Miller and Giulia Lafratta show how model checking can be used to create multi-step plans for a differential drive wheeled robot so that it can avoid immediate danger. In this interview, Christopher tells us about model checking and how it is used in the context of autonomous robotic systems. We also find out more about the team’s approach and the implementation of their method.

What is the topic of the research in your paper and why is it an interesting area for study?

Two major barriers for widespread adoption of autonomous driving are safety and public trust. While trust is notoriously difficult to define, it is generally accepted that transparency is essential for human interactions with safety-critical autonomous systems. In the context of autonomous driving, feedback on the reasons for vehicle actions can help end-users calibrate trust to the capabilities of the vehicle and promote appropriate reliance on automated driving assistance systems. Transparency in the form of explanations is also important for external structural assurance of autonomous driving technologies. Developers, technicians, and regulators all have transparency needs in this context but at different levels of granularity to promote governance and appropriate oversight. For trustworthy decision-making in autonomous driving, methods should be transparent in principle to build trust into end-user interactions with autonomous vehicles and the supporting autonomous driving ecosystem.

At present, the state-of-the-art in motion planning for autonomous driving is dominated by black box end-to-end learning systems which we argue is inappropriate for safe vehicle reasoning. These models are either trained offline on curated datasets or online using simulated data, so while predictions can be fast, out of sample inputs can lead to risky or unexpected behaviours. There are transparent planning methods which do provide guarantees of safety, such as control barrier functions, however these often focus on optimal trajectory generation which is a non-trivial task requiring significant computational resources for timely vehicle actions and in general drives up the vehicle operating cost. We therefore focus on vehicle decision-making, which is transparent, safe, reliable and computationally efficient, using a resource-constrained robotic platform as an initial test case for experimentation.

What is model checking and how is this technique generally used in autonomous robotic systems?

Model checking is a widely used technique for automatically verifying reactive systems. It is based on a precise mathematical and unambiguous model of possible system behaviour. To verify that the model meets specified requirements (usually expressed in temporal logic), all possible executions are checked systematically. If an execution failing the specification is found, the execution path which caused the violation is returned. Model checking has previously been successfully applied to a variety of different systems. It has been used to ensure the safety and reliability of safety-critical systems like flight control, space-craft controllers, and satellite positioning systems. It has also been successfully applied to many aspects of software verification, e.g., for industrial systems and operating systems.

In autonomous robotic systems, model checking has generally been used for static and runtime verification and has been proposed for controller strategy synthesis. Static verification is an offline technique for the analysis of systems which typically involves constructing a model of the robotic agent and its environment to verify that the system model satisfies desired properties, while runtime verification is an online technique for monitoring the actual execution of a system with respect to a desired specification. Controller synthesis is related to static verification, except that rather than simply verifying that the system satisfies a set of properties, a control strategy satisfying a given specification is derived for the system and subsequently returned to the robot for actual execution.

Could you talk about your approach?

Our approach reflects the response of simple biological systems (or agents) which can safely navigate through an unseen environment responding in real-time to sensory inputs. On sensing an unexpected input (e.g., an obstacle) the agent responds by performing an action to change its state. This action takes the form of a motor output which results in a change to the environment, which is in turn sensed by the agent and the loop repeats. Behaviour is egocentric and reactive—the agent is only concerned with its immediate environment and only deviates from its course (or resting state) when necessary.

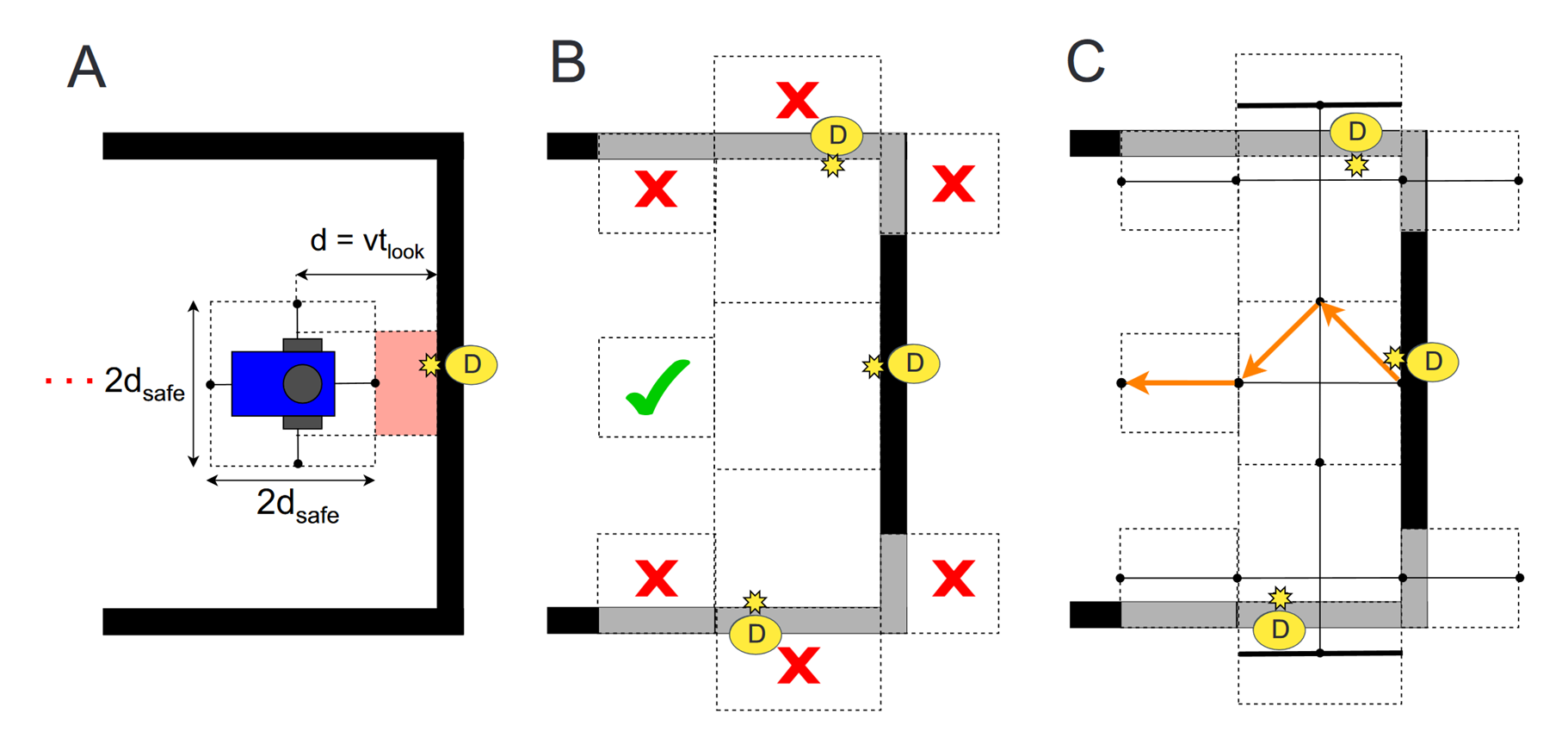

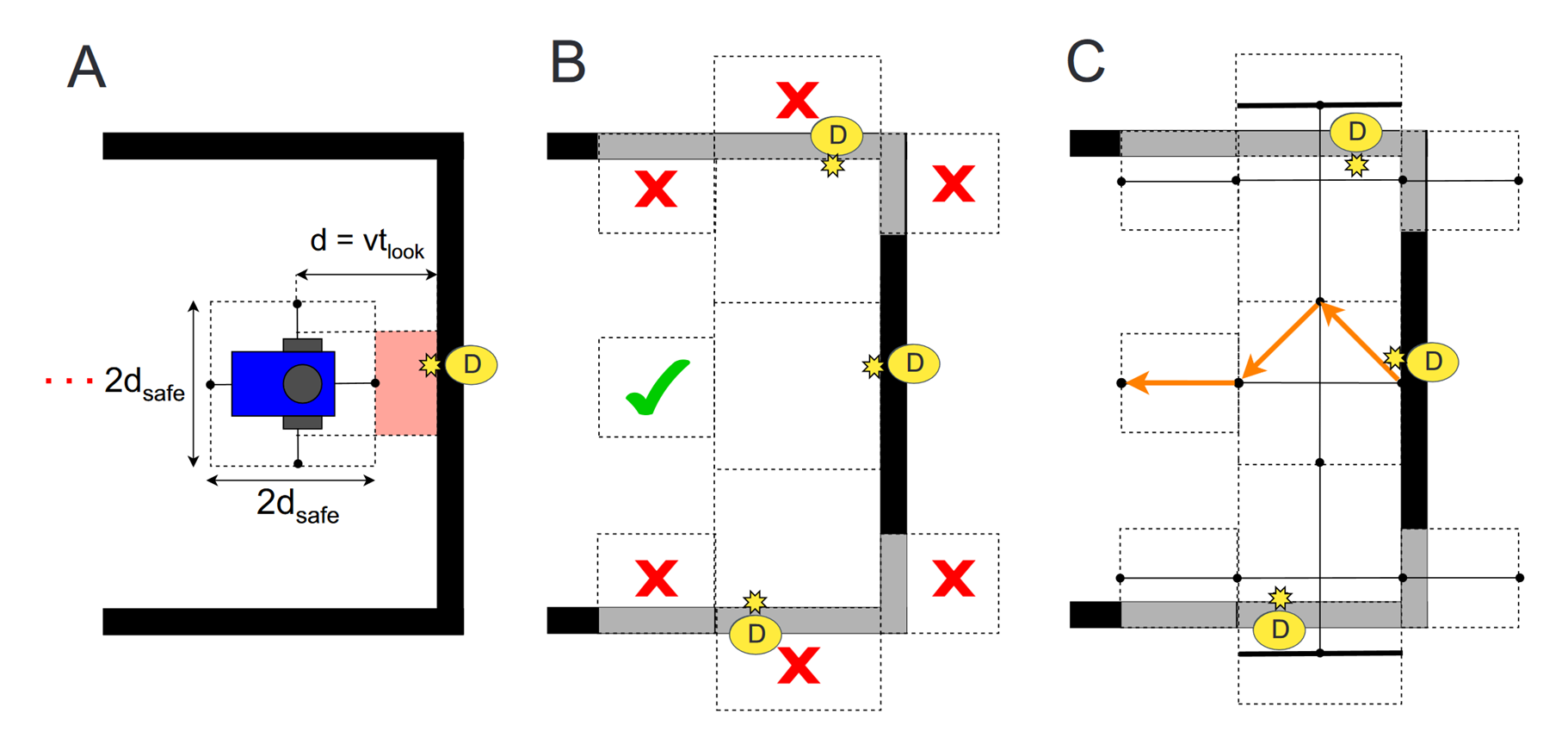

Example of planning sequence for a cul-de-sac scenario.

Example of planning sequence for a cul-de-sac scenario.

We use trees of temporary control systems (i.e., motion primitives) which have an attentional focus on disturbances encountered in the environment and self-destruct when that focus is gone, so we don’t focus on optimal trajectories which keeps the method computationally lightweight. Any trajectory which successfully counteracts disturbances in the environment is considered valid. Model checking is used to generate a chain of control systems for local obstacle avoidance in real-time. To guarantee safety, we define an egocentric safe zone around the robot which is always respected, and transparency is ensured by discrete decisions with clear success and failure criteria for control tasks.

How did you go about implementing the method, and what were your main findings?

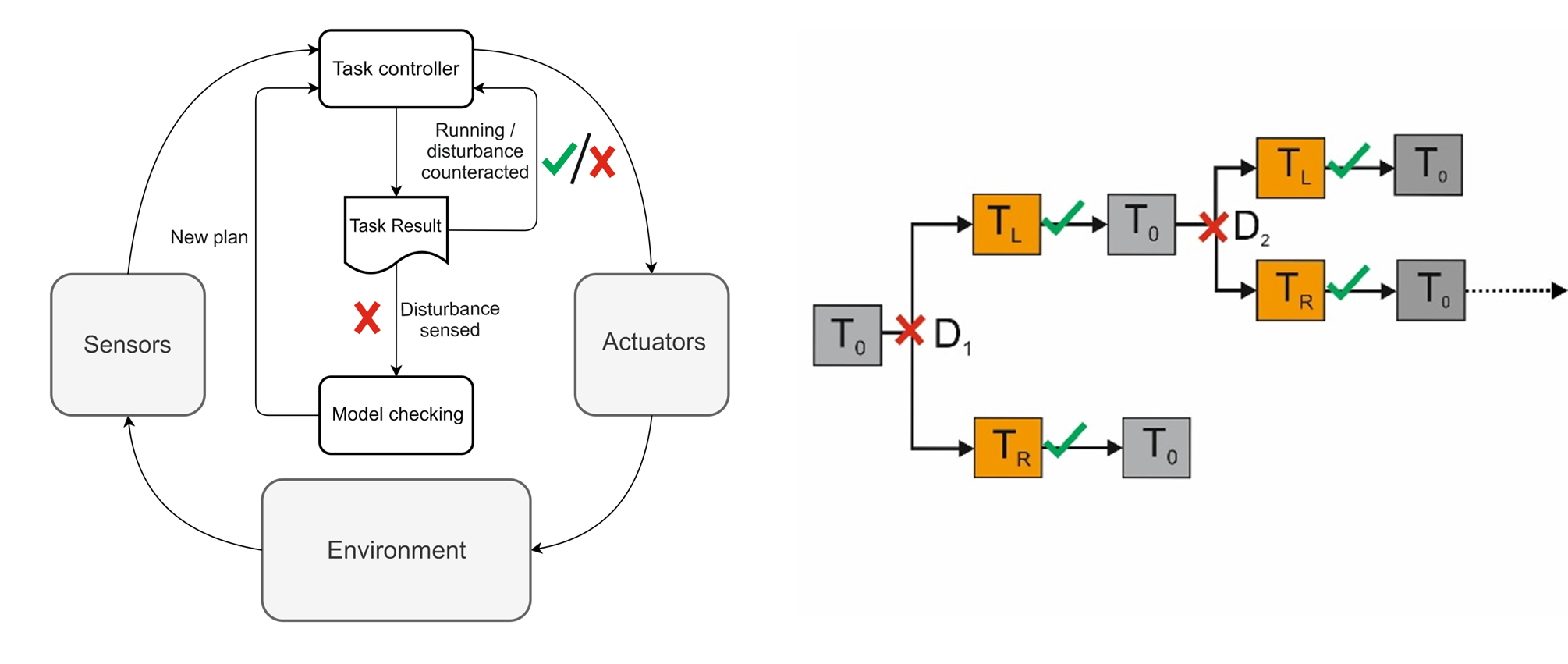

We implemented our method on an autonomous robotic agent using a closed-loop architecture, designed to reflect a reasoning tree for all possible chains of temporary control systems. Each iteration of the control loop, a task result is returned indicating whether the current control task is successful or has failed. For example, if the robot is in its resting state (driving in a straight line) and infers that it will encounter a disturbance on its present course, the task fails and planning via model checking is initiated. The plan is a path in the tree of possible temporary control system chains and the chain is a strategy for solving the local obstacle avoidance problem based on immediate sensor data. We used minimal sensor data pre-processing and kept all decision-making algorithms linear to ensure a processing latency less than 100 milliseconds for better than human performance in real scenarios. While our results in the paper are preliminary, they indicate that our method can generate more efficient trajectories compared to basic reactive control within a processing latency of less than 11 milliseconds.

Architecture of system reflecting the reasoning tree of temporary control systems with clear success and failure criteria for tasks.

What are the implications of your approach?

Our approach demonstrates that it is possible to use model checking for hard decisions in real-time using no pre-computed data. While in its early stages, one benefit of our method is that decision-making is fast and based on up-to-date information from the surrounding environment, so actions are responsive to imminent disturbances and predictable. As mentioned, this is crucial for reliability in high-speed driving environments to avoid crashes with other vehicles. Moreover, as the method uses clear success and failure criteria for tasks it is transparent and explainable in principle to various stakeholders. While our method has limitations, we believe our approach shows promise as an avenue for the development of trustworthy decision-making in the context of autonomous driving.

Do you have plans to further develop this work?

The major limitation of our approach in the paper is that it is not target driven. One of the most complicated tasks for autonomous driving is overtaking, so our next focus will be on overtaking manoeuvres, the focus of the original proof-of-concept which motivated this work. A member of our research group has already successfully achieved this using a different approach involving physics modelling on the gaming engine Box2D and the A* algorithm, also implemented in real-time on an autonomous agent. Extension of work in the paper will take a different approach using abstraction of sensor data. The next step will then likely be to incorporate uncertainty to handle stochasticity in the environment.

About Christopher

|

Christopher Chandler is a PhD candidate affiliated with the UKRI Centre for Doctoral Training in Socially Intelligent Artificial Intelligence at the University of Glasgow. Background is in data science and model checking with a research focus is on trustworthy reasoning for autonomous driving. |

Read the research in full

Model Checking for Closed-Loop Robot Reactive Planning, Christopher Chandler, Bernd Porr, Alice Miller, Giulia Lafratta.