ΑΙhub.org

The machine learning victories at the 2024 Nobel Prize Awards and how to explain them

Image credit: Osama Shukir Muhammed Amin FRCP(Glasg). CC BY-SA 4.0, Link.

Image credit: Osama Shukir Muhammed Amin FRCP(Glasg). CC BY-SA 4.0, Link.

By Anna Demming

Machine learning using apparently different architectures bagged the 2024 Nobel Prizes for Physics and Chemistry. Anna Demming reports on what the prizes were awarded for and how finding connections between the two approaches to machine learning may help towards explaining how “black box” algorithms reach their conclusions.

Few saw it coming when on 8th October 2024 the Nobel Committee awarded the 2024 Nobel Prize for Physics to John Hopfield for his Hopfield networks and Geoffrey Hinton for his Boltzmann machines as seminal developments towards machine learning that have statistical physics at the heart of them. The next day machine learning albeit using a different architecture bagged half of the Nobel Prize for Chemistry as well, with the award going to Demis Hassabis and John Jumper for the development of an algorithm that predicts protein folding conformations. The other half of the Chemistry Nobel was awarded to David Baker for successfully building new proteins.

While the AI takeover at this year’s Nobel announcements for Physics and Chemistry came as surprise to most, there has been some keen interest on how these apparently different approaches to machine learning might actually reduce to the same thing, revealing new ways of extracting some fundamental explainability from the generative AI algorithms that have so far been considered effectively “black boxes”. The “transformer architectures” behind the likes of ChatGPT and AlphaFold are incredibly powerful but offer little explanation as to how they reach their solutions so that people have resorted to querying the algorithms and adding to them in order to extract information that might offer some insights. “This is a much more conceptual understanding of what’s going on,” says Dmitry Krotov, now a researcher at IBM Research in Cambridge Massachusetts, who working alongside John Hopfield made some of the first steps that helps bring the two types of machine learning algorithm together.

Collective phenomena

Hopfield networks brought some of the mathematical toolbox long applied to extract “collective phenomena” from vast numbers of essentially identical parts such as atoms in a gas or atomic spins in magnetic materials. Although there may be too many particles to track each individually, properties like temperature and magnetic field can be extracted using statistical physics. Hopfield showed that similarly a useful phenomenon he described as “associative memory” could be constructed from large numbers of artificial neurons by defining a “minimum energy”, which describes the network of neurons. The energy is determined by connections between neurons, which store information about patterns. Thus the network can retrieve the memorized patterns by minimizing that energy, just as stable conformations of atomic spins might be found in a magnetic material1. As the energy of the network is then subsequently minimised the pattern gets closer to the one that was memorised, just as when recalling a word or someone’s name we might first run through similar sounding words or names.

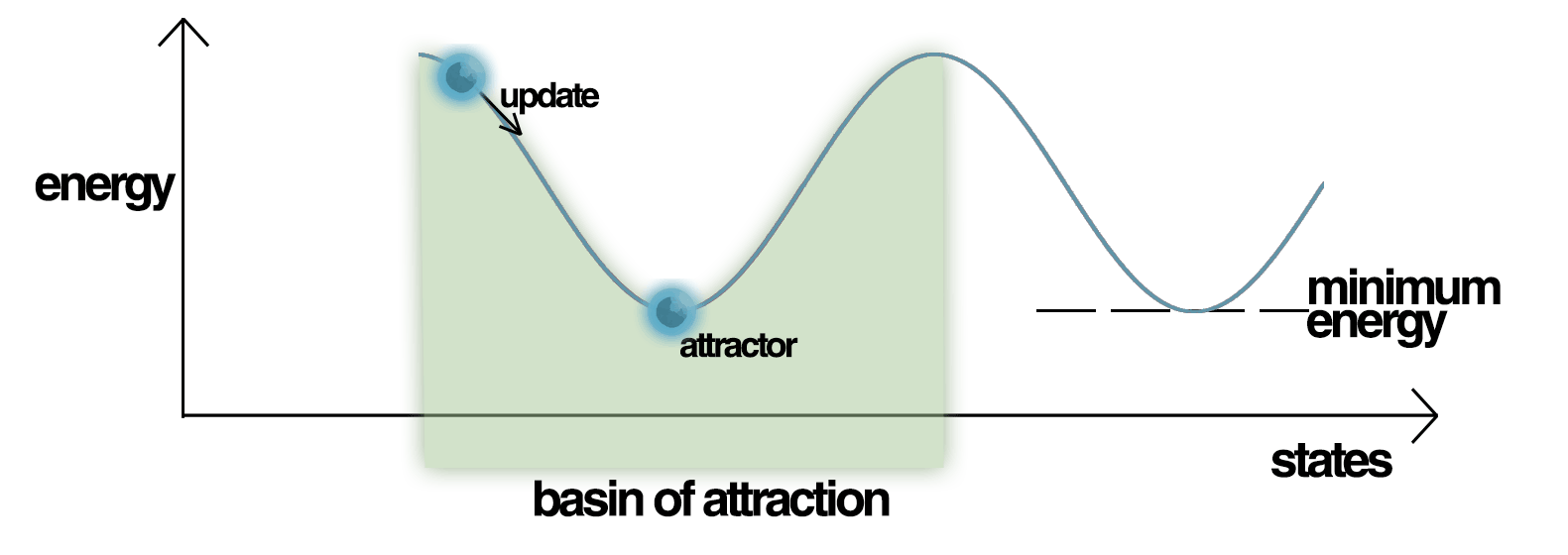

These Hopfield networks proved a seminal step in progressing AI algorithms, enabling a kind of pattern recognition from multiple stored patterns. However, it turned out that the number of patterns that could be stored was fundamentally limited due to what are known as “local” minima. You can imagine a ball rolling down a hill – it will reach the bottom of the hill fine so long as there are no dips for it to get stuck in en route. Algorithms based on Hopfield networks were prone to getting stuck in such dips or undesirable local minima, until Hopfield and Krotov put their heads together to find a way around it. Krotov describes himself as “incredibly lucky” that his research interests aligned so well with Hopfield. “He’s just such a smart and genuine person, and he has been in the field for many years,” he tells Real World Data Science. “He just knows things that no one else in the world knows.” Together they worked out they could address the problem of local minima by toggling the “activation function”.

Figure 2: Energy Landscape of a Hopfield Network, highlighting the current state of the network (up the hill), an attractor state to which it will eventually converge, a minimum energy level and a basin of attraction shaded in green. Note how the update of the Hopfield Network is always going down in Energy. Credit: Mrazvan22/wikimedia.

Figure 2: Energy Landscape of a Hopfield Network, highlighting the current state of the network (up the hill), an attractor state to which it will eventually converge, a minimum energy level and a basin of attraction shaded in green. Note how the update of the Hopfield Network is always going down in Energy. Credit: Mrazvan22/wikimedia.

In a Hopfield network all the neurons are connected to all the other neurons, however originally the algorithm only considered interactions between two neurons at each point, i.e. the interaction between neuron 1 and neuron 2, neuron 1 and neuron 3 and neuron 2 and neuron 3, but not the interactions among all three altogether. By including such “higher order” interactions between more than two neurons, Krotov and Hopfield found they made the basins of attraction for the true minimum energy states deeper. You can think of it a little like the ball rolling down a steeper hill so that it picks up more momentum along the slope of the main hill and is less prone to falling in little dips en route. This way Krotov and Hopfield increased the memory of Hopfield networks in what they called the Dense Associative Memory, which they described in 20162. Long before then, however, Geoffrey Hinton had found a different tack to follow to increase the power of this kind of neural network.

Generative AI

Geoffrey Hinton showed that by defining some neurons as a hidden layer and some as a visible layer (a Boltzmann machine3) and limiting the connections so that neurons are only connected with neurons in other layers (a restricted Boltzmann machine4), finding the most likely network would generate networks with meaningful similarities – a type of generative AI. This and many other contributions by Geoffrey Hinton also proved incredibly useful in the progress of machine learning. However, the generative AI algorithms grabbing headlines today have actually been devised using a “transformer” architecture, which differs from Hopfield networks and Boltzmann machines, or so it seemed initially.

Transformer algorithms first emerged as a type of language model and were defined by a characteristic termed “attention”. “They say that each word represents a token, and essentially the task of attention is to learn long-range correlations between those tokens,” Krotov explains using the word “bank” as an example. Whether the word means the edge of a river or a financial institution can only be ascertained from the context in which it appears. “You learn these long-range correlations, and that allows you to contextualize and understand the meaning of every word.” The approach was first reported in 2017 in a paper titled “Attention is all you need”5 by researchers at Google Brain and Google Research.

It was not long before people figured out that the approach would enable powerful algorithms for tasks beyond language manipulation, including Demis Hassabis and John Jumper at Deep Mind as they worked to figure out an algorithm that could predict the folding conformations of proteins. The algorithm they landed on in 2020 – AlphaFold2 – was capable of protein conformation prediction with a 90% accuracy, way ahead of any other algorithm at the time, including Deep Mind’s previous attempt AlphaFold, which although streaks ahead of the field at the time it was developed in 2018, still only achieved an accuracy of 60%. It was for the extraordinary predictive powers for protein conformations achieved by AlphaFold2 that Hassabis and Jumper were awarded half the 2024 Nobel Prize for Chemistry.

Connecting the dots

Transformer architectures are undoubtedly hugely powerful but how they operate can seem something of a dark art as although computer scientists know how they are programmed, even they cannot tell how they reach their conclusions in operation. Instead they query the algorithm and add to it to try and get some pointers as to what the trail of logic might have been. Here Hopfield networks have an advantage because people can hope to get a grasp on what energy minima they are converging to, and that way get a handle on their working out. However, in their paper “Hopfield networks is all you need”6, researchers in Austria and Norway showed that the activation function, which Hopfield and Krotov had toggled to make Hopfield networks store more memories, can also link them to transformer architectures – essentially if the function is exponential they can reduce to the same thing.

“We think about attention as learning long-range correlations, and this dense associative memory interpretation of attention tells you that each word creates a basin of attraction,” Krotov explains. “Essentially, the contextualization of the unknown word happens through the attraction to these different memories,” he adds. “That kind of lens of thinking about transformers through the prism of of energy landscapes – it’s opened up this whole new world where you can think about what transformers are doing computationally, and how they perform that computation.”

“I think it’s great that the power of these tools is being recognised for the impact that they can have in accelerating innovation in new ways,” says Janet Bastiman, RSS Data Science and AI Section Chair and Chief Data Scientist at financial crimes compliance solutions company Napier AI, as she comments on the Nobel Prize awards. Bastiman’s most recent work has been on adding explanation to networks. She notes how the report Hopfield networks is all you need highlights “the difference that layers can have on the final outcomes for specific tasks and a clear need for understanding some of the principles of the layers of networks in order to validate results and be aware of potential difficulties and ”best” scenarios for different use cases.”

Krotov also points out that since Hopfield networks are rooted in neurobiological interpretations, it helps to find “neurobiological ways of interpreting their computation” for transformer algorithms too. As such the vein Hopfield and Hinton tapped into with their seminal advances is proving ever richer in what Krotov describes as “the emerging field of the physics of neural computation”.

References

- Hopfield J J Neural networks and physical systems with emergent collective computational abilities PNAS 79 2554-2558 (1982)

- Krotov D and Hopfield J J Dense Associative Memory for Pattern Recognition NeurIPS (2016)

- Ackley D H, Hinton G E and Sejnowski T E A learning algorithm for boltzmann machines Cognitive Science 9 147-169 (1985)

- Salakhutdinov R, Mnih A and Hinton G Restricted Boltzmann machines for collaborative filtering ICML ’07: Proceedings of the 24th International Conference on Machine Learning 791-798 (2007)

- Vaswani A, Shazeer N, Parmar N, Uszkoreit J, Jones L, Gomez A N, Kaiser Ł, Polosukhin I Attention is all you need NeurIPS (2017)

- Ramsauer H, Schäfl B, Lehner J, Seidl P, Widrich M, Adler T, Gruber L, Holzleitner M, Pavlović M, Kjetil Sandve G, Greiff V, Kreil D, Kopp M, Klambauer G, Brandstetter J and Hochreiter S Hopfield Networks is All You Need arXiv (2020)

About the author

Anna Demming is a freelance science writer and editor based in Bristol, UK. She has a PhD from King’s College London in physics, specifically nanophotonics and how light interacts with the very small, and has been an editor for Nature Publishing Group (now Springer Nature), IOP Publishing and New Scientist. Other publications she contributes to include The Observer, New Scientist, Scientific American, Physics World and Chemistry World.

This article is republished under a Creative Commons Attribution 4.0 (CC BY 4.0) International licence.