ΑΙhub.org

How to benefit from AI without losing your human self – a fireside chat from IEEE Computational Intelligence Society

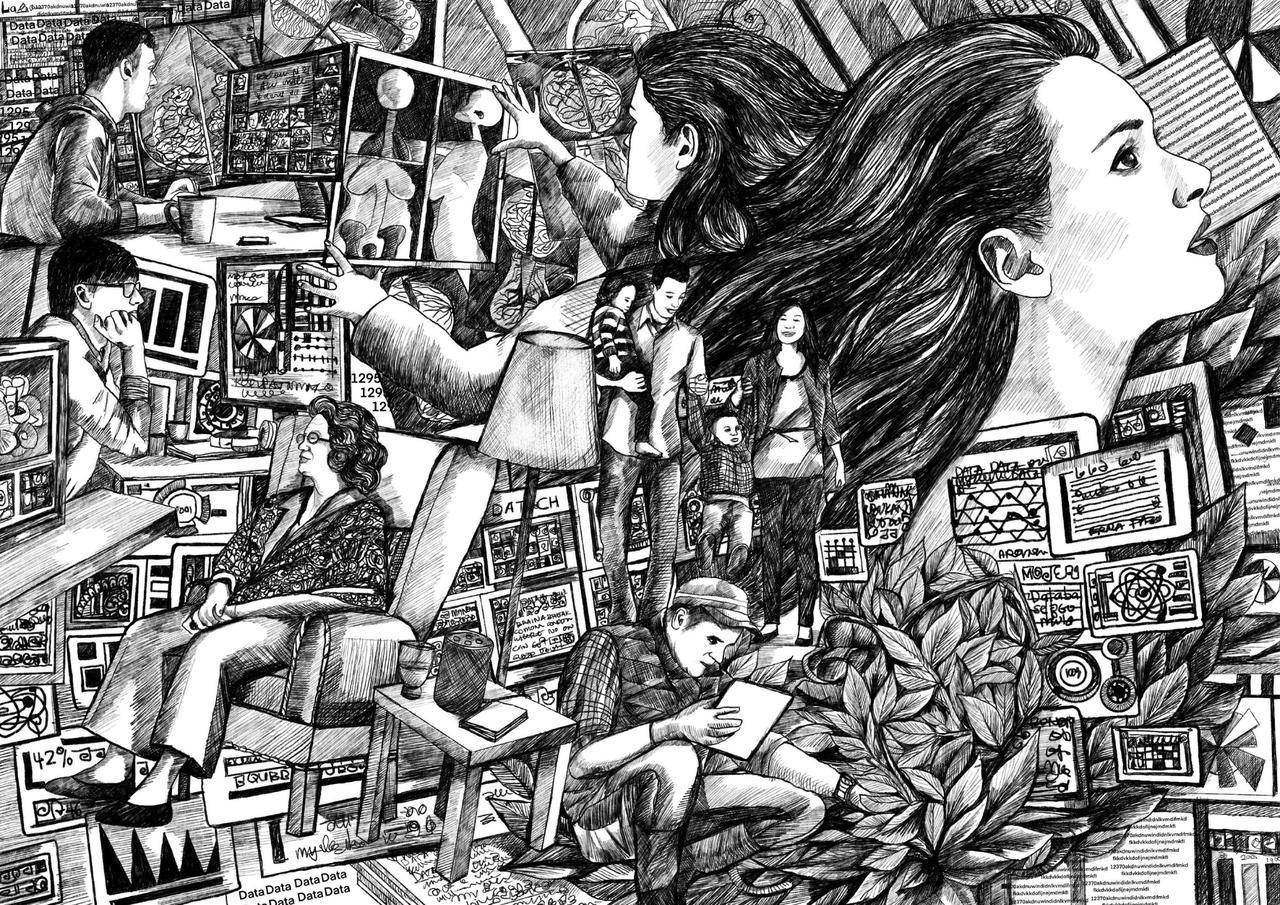

Ariyana Ahmad & The Bigger Picture / Better Images of AI / AI is Everywhere / Licenced by CC-BY 4.0

Ariyana Ahmad & The Bigger Picture / Better Images of AI / AI is Everywhere / Licenced by CC-BY 4.0

In this fireside chat from IEEE Computational Intelligence Society, Tayo Obafemi-Ajayi (Missouri State University) asks Hava T. Siegelmann (University of Massachusetts, Amherst) about how to benefit from AI without losing your human self.

You can watch the chat in full below:

IEEE Computational Intelligence Society

Related posts :

monthly digest

AIhub monthly digest: February 2026 – collective decision making, multi-modal learning, and governing the rise of interactive AI

Lucy Smith

27 Feb 2026

Welcome to our monthly digest, where you can catch up with AI research, events and news from the month past.

The Good Robot podcast: the role of designers in AI ethics with Tomasz Hollanek

The Good Robot Podcast

26 Feb 2026

In this episode, Tomasz argues that design is central to AI ethics and explores the role designers should play in shaping ethical AI systems.

Reinforcement learning applied to autonomous vehicles: an interview with Oliver Chang

Lucy Smith

25 Feb 2026

In the third of our interviews with the 2026 AAAI Doctoral Consortium cohort, we hear from Oliver Chang.

The Machine Ethics podcast: moral agents with Jen Semler

The Machine Ethics Podcast

24 Feb 2026

In this episode, Ben and Jen Semler talk about what makes a moral agent, the point of moral agents, philosopher and engineer collaborations, and more.

Extending the reward structure in reinforcement learning: an interview with Tanmay Ambadkar

Lucy Smith

23 Feb 2026

Find out more about Tanmay's research on RL frameworks, the latest in our series meeting the AAAI Doctoral Consortium participants.

The Good Robot podcast: what makes a drone “good”? with Beryl Pong

The Good Robot Podcast

20 Feb 2026

In this episode, Eleanor and Kerry talk to Beryl Pong about what it means to think about drones as “good” or “ethical” technologies.

Relational neurosymbolic Markov models

Lennert De Smet and Gabriele Venturato

19 Feb 2026

Relational neurosymbolic Markov models make deep sequential models logically consistent, intervenable and generalisable

AI enables a Who’s Who of brown bears in Alaska

EPFL

18 Feb 2026

A team of scientists from EPFL and Alaska Pacific University has developed an AI program that can recognize individual bears in the wild, despite the substantial changes that occur in their appearance over the summer season.