ΑΙhub.org

Half of UK novelists believe AI is likely to replace their work entirely

By Fred Lewsey

A new report involving hundreds of literary creatives from across the UK fiction publishing industry reveals widespread fears over copyright violation, lost income, and the future of the art form, as generative AI tools and LLM-authored books flood the market.

Just over half (51%) of published novelists in the UK say that artificial intelligence is likely to end up entirely replacing their work as fiction writers, a new report from the University of Cambridge has found.

Close to two-thirds (59%) of novelists say they know their work has been used to train AI Large Language Models (LLMs) without permission or payment.

Over a third (39%) of novelists say their income has already taken a hit from generative AI, for example due to loss of other work that facilitates novel writing. Most (85%) novelists expect their future income to be driven down by AI.

In new research for Cambridge’s Minderoo Centre for Technology and Democracy (MCTD), Dr Clementine Collett surveyed 258 published novelists earlier this year, as well as 74 industry insiders – from commissioning editors to literary agents – to gauge how AI is viewed and used in the world of British fiction.*

Genre authors are considered the most vulnerable to displacement by AI, according to the report, with two-thirds (66%) of all those surveyed listing romance authors as “extremely threatened”, followed closely by writers of thrillers (61%) and crime (60%).

Despite this, overall sentiment in UK fiction is not anti-AI, with 80% of respondents agreeing that AI offers benefits to parts of society. In fact, a third of novelists (33%) use AI in their writing process, mainly for “non-creative” tasks such as information search.

However, the report outlines profound concerns from the cornerstone of a publishing industry that contributes an annual £11bn to the UK economy, and exports more books than any other country in the world.

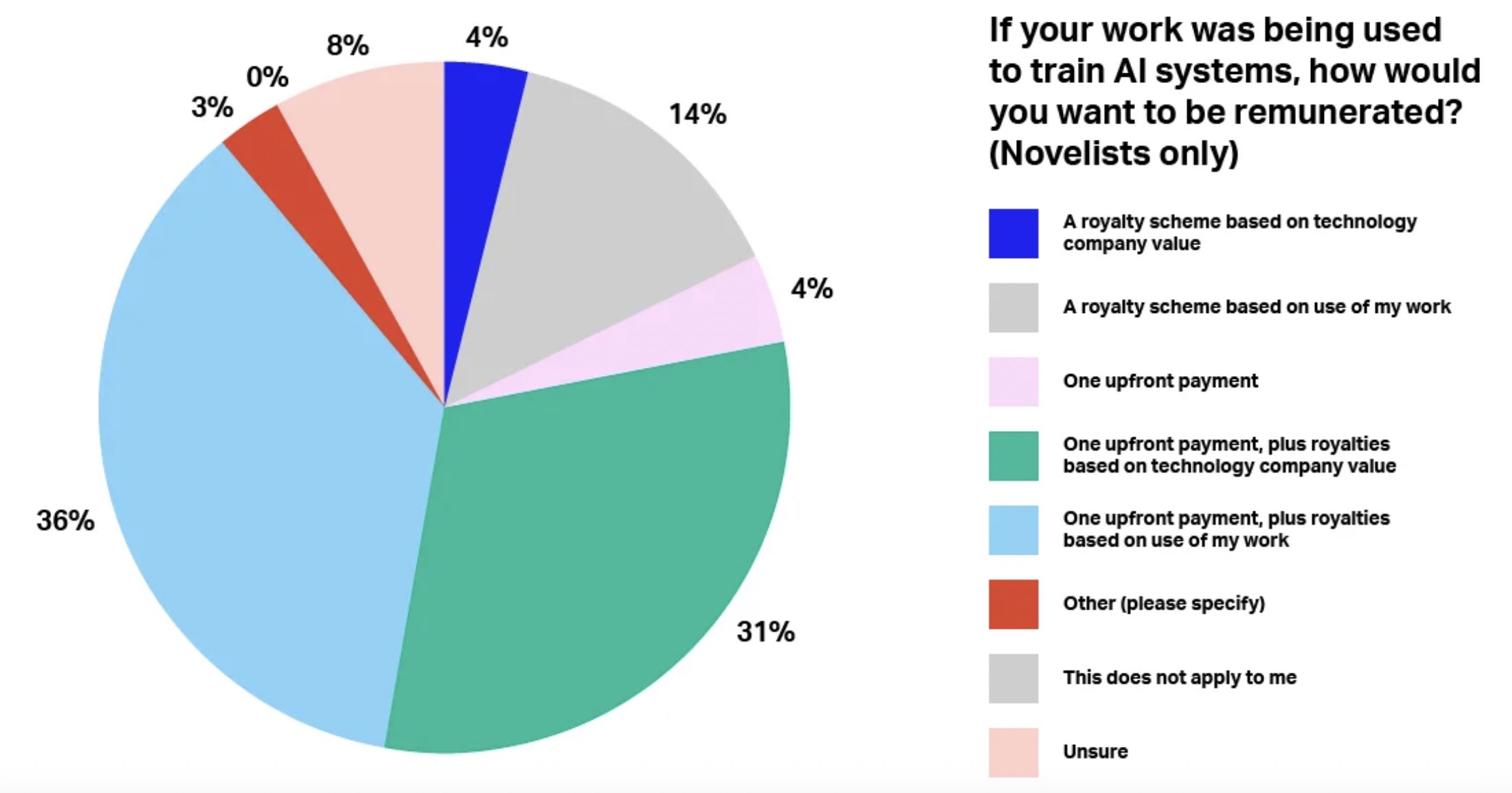

Literary creatives feel that copyright laws have not been respected or enforced since the emergence of generative AI. They call for informed consent and fair remuneration for the use of their work, along with transparency from big tech companies, and support in getting it from the UK government.

Many warn of a potential loss of originality in fiction, as well as a fraying of trust between writers and readers if AI use is not disclosed. Some novelists worry that suspicions of AI use could damage their reputation.

“There is widespread concern from novelists that generative AI trained on vast amounts of fiction will undermine the value of writing and compete with human novelists,” said Dr Clementine Collett, BRAID UK Research Fellow at Cambridge’s MCTD and author of the report, published in partnership with the Institute for the Future of Work.

“Many novelists felt uncertain there will be an appetite for complex, long-form writing in years to come.”

“Novels contribute more than we can imagine to our society, culture, and to the lives of individuals. Novels are a core part of the creative industries, and the basis for countless films, television shows, and videogames,” said Collett.

Tech companies have the fiction market firmly in their sights. Generative AI tools such as Sudowrite and Novelcrafter can be used to brainstorm and edit novels, while Qyx AI Book Creator or Squibler can be used to draft full-length books. Platforms such as Spines use AI to assist with publishing processes from cover designs to distribution.

“The brutal irony is that the generative AI tools affecting novelists are likely trained on millions of pirated novels scraped from shadow libraries without the consent or remuneration of authors,” said Collett.

“The novel is a precious and vital form of creativity that is worth fighting for”

– Dr Clementine Collett

Along with surveying a total of 332 literary creatives, who participated on condition of anonymity, Collett conducted focus groups and interviews around the country, and convened a forum in Cambridge with novelists and publishers.**

Many novelists reported lost income due to AI. Some feel the market is increasingly flooded with AI-generated books, with which they are forced to compete. Others say they have found books under their name on Amazon that they haven’t written.

Some novelists also spoke of online reviews with telltale signs of AI, such as jumbled names and characters, that give their books bad ratings and jeopardise future sales.

“Most authors do not earn enough from novels alone and rely on income streams such as freelance copywriting or translation which are rapidly drying up due to generative AI,” said Collett.***

Some literary creatives envisioned a dystopic two-tier market emerging, where the human-written novel becomes a “luxury item” while mass-produced AI-generated fiction is cheap or free.

When it came to working practices, some in the study consider AI valuable in speeding up repetitive or routine tasks, but it was seen to have little to no role to play in creativity.

Almost all (97%) novelists were “extremely negative” about AI writing whole novels, or even short sections (87% extremely negative). The aspects novelists felt least negative about using AI for were sourcing general facts or information (30% extremely negative), with around 20% of novelists saying they use AI for this purpose.

Around 8% of novelists said they use AI for editing text written without AI. However, many find editing to be a deeply creative process, and would never want AI involved. Almost half (43%) of novelists felt “extremely negative” about using AI for editing text.

Forum participant Kevin Duffy, founder of Bluemoose Books, the publisher behind novels such as The Gallows Pole and Leonard and Hungry Paul – both now major BBC TV dramas – is on record in the report saying:

“[W]e are an AI free publisher, and we will have a stamp on the cover. And then up to the public to decide whether they want to buy that book or not. But let’s tell the public what AI is doing.” Many respondents echoed this sentiment.

The research found widespread backlash against a “rights reservation” copyright model as proposed by the UK government last year, which would let AI firms mine text unless authors explicitly opted out.

Some 83% of all respondents say this would be negative for the publishing industry, and 93% of novelists said they would ‘probably’ or ‘definitely’ opt out of their work being used to train AI models if an opt out model was implemented.

The vast majority (86%) of all literary creatives preferred an ‘opt in’ principle: rights-holders grant permission before AI scrapes any work and are paid accordingly. The most popular option was for AI licensing to be handled collectively by an industry body – a writers’ union or society – with half of novelists (48%) selecting this approach.

“Our creative industries are not expendable collateral damage in the race to develop AI. They are national treasures worth defending. This report shows us how,” said Prof Gina Neff, Executive Director of the Minderoo Centre for Technology and Democracy.

Some novelists worry AI will disrupt the “magic” of the creative process. Stephen May, writer of acclaimed historical novels such as Sell Us the Rope expressed anxiety over AI taking the required “friction” and “pain” out of a first draft, diminishing the final product.

“Novelists, publishers, and agents alike said the core purpose of the novel is to explore and convey human complexity,” said Collett. “Many spoke about increased use of AI putting this at risk, as AI cannot understand what it means to be human.”

Authors fear AI may weaken the deep human connection between writers and readers at a time when reading is already at historically low levels, particularly among the next generation: only a third of UK children say they enjoy reading in their free time.

Many novelists want to see more AI-free creative writing on the school curriculum, and government-backed initiatives aimed at finding new voices from underrepresented groups to counter risks of “homogeneity” in fiction brought about by generative AI.

The research reveals a sector-wide belief that AI could lead to ever blander, more formulaic fiction that exacerbates stereotypes, as the models regurgitate from centuries of previous text.

Some suggest the AI era may see a boom in “experimental” fiction as writers work to prove they are human, and push the artistry further than AI.

“Novelists are clearly calling for policy and regulation that forces AI companies to be transparent about training data, as this would help with the enforcement of copyright law,” added Collett.

“Copyright law must continue to be reviewed and might need reform to further protect creatives. It is only fair that writers are asked permission and paid for use of their work.”

In response to the findings, Tracy Chevalier, best-selling novelist and author of Girl with a Pearl Earring and The Glassmaker, said:

“I worry that a book industry driven mainly by profit will be tempted to use AI more and more to generate books. If it is cheaper to produce novels using AI (no advance or royalties to pay to authors, quicker production, retainment of copyright), publishers will almost inevitably choose to publish them. And if they are priced cheaper than ‘human made’ books, readers are likely to buy them, the way we buy machine-made jumpers rather than the more expensive hand-knitted ones.

Is this inevitable, though? The use of AI isn’t just the responsibility of authors, it’s also crucial that publishers and readers understand their role in this. It starts with all of us understanding and cherishing the intrinsic value of work that is human made – the hand-knitted jumper, the novel written by a human – and making choices that are not only driven by making or saving money.”

* Of the published novelists involved in the survey, 90% are published “traditionally” through publishing houses, while 10% are self-published.

** This consisted of 32 literary agents for fiction (9%), 258 published novelists (78%), and 42 professionals who work in fiction publishing (13%). The survey took place between February and May 2025.

*** The median income for an author in the UK in 2022 was just £7,000, far lower than the minimum wage. [Thomas, A., Battisti, M., & Kretschmer, M. (2022). UK Authors’ Earnings and Contracts 2022.]

Full quote from Kevin Duffy as featured in the report: “’For a small independent publisher of literary fiction like Bluemoose Books, our only stand is to say we don’t want any part of this, we are AI free, and we are an AI free publisher, and we will have a stamp on the cover. And then up to the public to decide whether they want to buy that book or not. But let’s tell the public what AI is doing. It’s got brilliant capacity to do fantastic things in other avenues, but for the creative industries and for literary fiction in particular, it is very limited.”