ΑΙhub.org

A summary of the keynotes at AAMAS

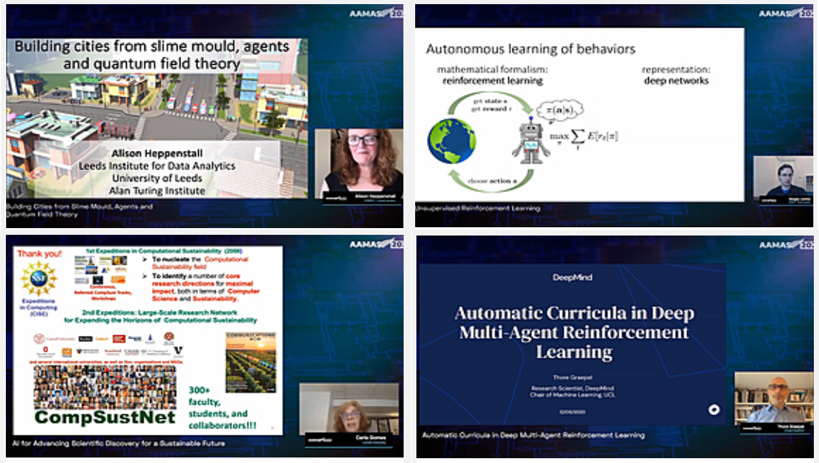

A virtual edition of the International Conference on Autonomous Agents and Multi-Agent Systems (AAMAS) conference was held on 9-13 May. Videos of the talks are now available for public viewing, and you can also see the sessions from the various workshops. In this post we summarise the four keynotes.

Building cities from slime mould, agents and quantum field theory

Alison Heppenstall, Leeds Institute for Data Analytics, University of Leeds, Alan Turing Institute

Alison is interested in how cities work and builds spatial agent-based models (ABMs) to study how people move around and how behaviour plays out in space and time. There are a number of challenges with these kinds of models and they need to be really robust if they are to be adopted by policy makers.

So, why should we be interested in modelling cities? A large proportion of the world’s population lives in cities and urbanisation is rapidly increasing. This fast change presents problems and ideally these expanding cities should be equitable, with all residents having access to energy, education, transport and healthcare. If researchers were able to create “digital twins” of cities to test the effects of changes in, for example, transport infrastructure, that could be a very attractive option for policy makers.

At the heart of this research is data. There are all kinds of data that researchers use in city modelling, including traditional datasets and those that are crowdsourced: maps, census data, mobile phone data, social media data, pollution records, to name but a few. The challenge is to bring it all together, and this is where machine learning techniques come in.

Towards real-time city simulation

Alison mentioned that there is a big push in the UK to create digital representations of our cities. If these models are to be effective and reliable, one of the big challenges concerns reducing the uncertainty in ABMs. For this, her team use a data assimilation method, where the model is constantly refined using real-time data. Obviously, creating a picture of an entire city is incredibly complex so they began by modelling a crowd in a train station. This article describes the team’s methodology.

Alison moved on to talk about quantum field theory, an area that is all about uncertainty, and how her colleague, Daniel Tang, has been building that into ABMs. He used the concept of creation and annihilation operators, and demonstrated the promise of this technique using a predator-prey model. The agents in this example all have discrete states so the next phase of this work will be to change that to continuous states. It also needs to be extended to deal with more complex real-world examples.

In terms of future work, the researchers are planning to use slime mould (Physarum polycephalum) as an experimental “lab” for testing their city models.

You can watch Alison’s talk here.

Unsupervised reinforcement learning

Sergey Levine, UC Berkeley

Sergey began by describing how we can formalise the problem of learning behaviours. This can be done by combining a mathematical formula for decision making with a deep-learning representation of the system. The model consists of an environment and an agent; the agent interacts with the environment and makes decisions. The environment responds by selecting states and by providing rewards. The agent’s goal is to find a policy that maximises the total sum of expected rewards, so it is aiming to maximise rewards for all time, not just for now. The method of marrying mathematical formulism with deep learning works well in areas such as robotic manipulation tasks, autonomous navigation systems and robot motion.

However, reinforcement learning is fundamentally a supervised learning paradigm, because the reward is picked by a human. The question Sergey posed is, can we do unsupervised reinforcement learning? This means removing the reward function and providing an objective function for the agent to optimise. In his talk Sergey used examples to demonstrate how to derive a principled objective for unsupervised reinforcement learning, how to derive unsupervised deep reinforcement learning algorithms, and how to turn those into unsupervised meta-reinforcement learning algorithms.

One of the topics that Sergey touched on was maintaining homeostasis in an agent. As an introduction to this he cited the example of a cheetah. In real-life, a cheetah that just sits around doing nothing will not stay in a stable state of equilibrium (as it would if it was being modelling in a typical reinforcement learning set-up). It could starve without food, for example, or be chased by another animal. Essentially, agents in realistic environments don’t need to seek out surprise – surprising things happen on their own. The real challenge in these settings is in maintaining homeostasis. Work by Sergey and his team studies this type of setting – they try to maximise homeostasis and avoid strange situations.

You can watch Sergey’s talk here.

AI for advancing scientific discovery for a sustainable future

Carla Gomes, Cornell University

Carla started by defining computational sustainability – an interdisciplinary field that seeks to develop computational methods for sustainable development. There are numerous sustainability challenges to which one could apply computational methods, and in her talk, Carla gave as examples three of the projects that her team are working on.

Impact of hydropower dam placement in the amazon basin on ecosystem services

Around 200 dams have already been built in the amazon basin and there are at least another 300 planned or proposed. Although these dams are important for satisfying energy needs, they can have a devastating effect on ecosystems. From a computational point-of-view this is a multi-objective optimization problem. In particular, the team computed the so-called Pareto frontier. The goal is the find an optimum balance between hydropower energy gained and minimal ecological impact. The team published their work in this area last year and you can read it here.

Species distributions

Carla focussed on a citizen science project called eBird, which has over 300,000 volunteers who have made over 300 million bird observations. The team combined this data with adaptive spatial and temporal machine learning models, and considerable computation. As a result, they were able to relate environmental predictors to observed patterns and model habitat preferences in very fine detail. This is very important for conservation as it is possible to track the migration patterns of the birds. Carla and her team have produced models for over 400 species of bird.

Inferring crystal structures for materials discovery for clean energy

The final section of Carla’s talk concerned the discovery of clean energy materials, primarily for use in fuel cells and as solar fuels. The team collaborated with materials scientists with the aim of finding new, stable materials. Experiments involved producing mixtures of three metals; the goal was to use x-ray diffraction (XRD) patterns of these new materials to determine the composition and crystal structure. Carla and her team developed a method which combines convolutive non-negative matrix factorization and symbolic AI. This method enables much faster analysis of the XRD data than has previously been possible, allowing many more metal mixtures to be analysed within a particular time-frame.

You can watch Carla’s talk here.

Automatic curricula in deep multi-agent reinforcement learning

Thore Graepel, Google DeepMind

Thore proposed three reasons why we should pay attention to multi-agent systems:

1) Applications: we live in a multi-agent world. Artificial agents need to take the agency of others into account to succeed.

2) Architectures: artificial agents can be designed as systems composed of multiple sub-agents for robustness, scalability and flexibility.

3) Automatic curricula: human intelligence did not evolve in isolation. Similarly, artificial agents can benefit from interactions and coevolution with other agents.

Thore argued that the most intelligent entity in the world is the global market economy: “if you have enough money, that is where you’ll find the solution to your problem”. That brought him to the question: “Who is really intelligent? Is it the individual, or is it civilisation itself? Or, is it the evolutionary process that got us here?” As the human population has rapidly increased, so has the number and scale of technological advances. This led him to wonder if it is the “collective brain” of humans (i.e. a multi-agent system) that has fuelled these achievements.

The next part of the talk focussed on the AI-by-learning paradigm. The premise being that human-level AI can be achieved though learning by experience (using data, simulation and real-world interactions). Solving this problem requires resolving three sub-problems: 1) the algorithm problem – designing powerful algorithms that turn experience into policies, 2) the “problem problem” – the need to design rich learning environments that provide necessary experience, 3) the curriculum problem – design efficient learning curricula to deliver experiences to the learner in the right order.

Thinking about the curriculum problem, the order of learning is very important. There are certain skills that are easier to learn when other skills have already been acquired. In addition, many states are never reached unless certain skills are already present. To facilitate automatic curricular through multi-agent learning, multiple independent reinforcement learning agents interact in a common environment. For any given agent the other agents provide a curriculum through their learning. Find out more about the team’s work on auto-curricula learning in their paper. To demonstrate multi-agent learning in action Thore presented three case studies: AlphaGo learning to play chess, learning to play Capture-the-Flag, and learning in social dilemmas.

You can watch Thore’s talk here.