ΑΙhub.org

From bench to bedside: AI for health and care

By Jessica Montgomery, Senior Policy Adviser

By Jessica Montgomery, Senior Policy Adviser

Advances in artificial intelligence (AI) technologies over the last five years have generated great excitement, especially in the areas of health and care.

Participants in the Royal Society’s public dialogues (PDF) on machine learning, for example, talked about how AI tools could support doctors, by providing new insights into diagnosis or treatment strategies.

Policymakers have shown similar enthusiasm, with missions and grand challenges setting out to harness the power of AI to improve healthcare outcomes.

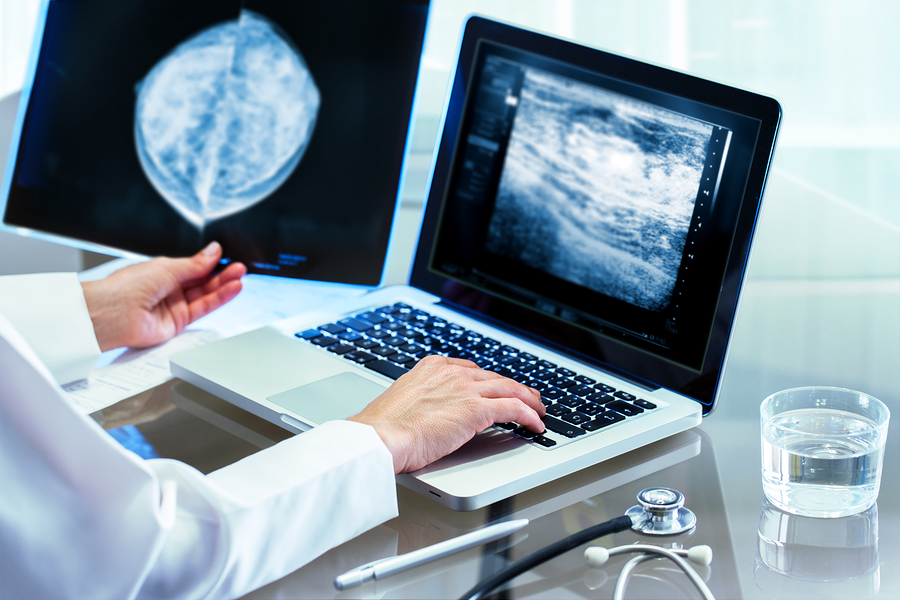

There are already examples of AI being successfully applied to healthcare challenges, especially in the use of image analysis to diagnose diseases like diabetic retinopathy. As AI technologies develop, and new research collaborations progress, the near-term could see:

- new applications of image analysis to detect and diagnose disease;

- AI tools to find efficiencies in the operational management of healthcare facilities, including scheduling appointments, checking systems, and managing waiting times;

- the use of wearables and consumer devices to manage personal wellbeing; and

- the development of natural language processing techniques to analyse electronic health records, creating better understandings of drug efficacy.

Developing such tools will rely on collaborations between researchers, clinicians, publics, and policymakers that can drive forward research agendas, while ensuring that the resulting AI tools work well for users across communities. Action in four key areas – data, skills, research, and public dialogue – can help.

Creating an amenable data environment

Today’s AI methods perform well in areas where well-curated data is available for analysis. In healthcare, however, this isn’t always easy to come by.

While a number of programmes have sought to digitalise healthcare information, the landscape of health data digitalisation and management is complex: there are many different types of health record, at varying stages of digitalisation; different commissioning groups or GPs can have different approaches to data management; and data can be in different formats or of different qualities, making it difficult to join datasets together for analysis.

While many of these are continuing challenges, there are a number of initiatives, led by the NHS and others, aimed at resolving these issues to produce a digital NHS where data are linked, interoperable and accessible for care and research.

Supporting healthcare professionals to make use of AI

One hope for the future development of AI is that these systems could create efficiencies or support processes that help address skills shortages in the delivery of health and care. If such systems are going to work well alongside healthcare professionals, they need to be designed with this purpose in mind. In cancer diagnosis, for example, there is a demand for skilled healthcare workers who are able to analyse scans to identify cancerous features. While this is an area of skills need, and AI systems might be able to address some of that need, it is not yet clear exactly what functions AI could fulfil, which functions patients would accept AI carrying out, and whether the use of AI could contribute to a broader de-skilling of people in the system.

How these technologies fit into the wider healthcare system is therefore key. Involving people with expertise and experience in healthcare delivery in the process of developing AI is important for successful application of AI to healthcare challenges. Those working in clinical settings have understanding of contexts of use that can improve the design of AI systems and ensure they are deployed effectively.

Developing robust and reproducible AI

As AI moves from being a research domain to one applied at scale, developers and users are looking afresh at the robustness and reproducibility of AI-enabled systems. For example, clinicians will need to be confident that a system for operational management designed for one hospital can be used successfully in another.

Awareness of the importance of reproducible and robust research in AI is growing. While careful study design and use of traditional statistics can help address these concerns, new tools or approaches, as well as cultural changes in the AI research community, may be necessary to develop more robust and reproducible approaches to AI. This might include codes of practice or other methods for clinicians and AI researchers to work together in evaluating the effectiveness of AI-enabled tools.

Building a well-founded public dialogue

A side-effect of the rapid recent advances in AI, and its early successes in healthcare research, has been growing hype about its near-term capabilities. This hype could lead to unrealistic expectations about the abilities of AI systems, the efforts required to develop AI that works in healthcare settings, and the timeline to deployment. It could also skew public debate about the potential of AI technologies, with implications for how confident people feel about their use.

Public dialogues (PDF) on the use of AI in health and care show there is great optimism about the potential of these technologies – to increase efficiency, make diagnosis more accurate, and save time on administrative tasks. These dialogues also show that people’s views about what kinds of data should be shared, with whom, and for what purpose are complicated, and that patterns of trust in data use depend on a range of factors. When considering whether the use of AI is desirable in any given setting, people want to know who is developing the technology, who is benefitting from the use of their data, and who bears the risks.

Building a well-founded public dialogue about AI technologies will be key to continued public confidence in the systems that deploy AI technologies, and to realising the benefits they promise in health and care. If the field is to make further progress in building this dialogue, it will be important for researchers working in AI and in medical sciences to have resources and support to co-design new studies with members of the public.

Recent reviews from a range of organisations (including the Royal Society) have stressed the need for effective and meaningful public and patient engagement, and co-development of AI applications. The challenge now is to create structures or processes that embed meaningful dialogue in the development of AI-enabled innovations.

This blog draws from discussions at a Royal Society and Academy of Medical Sciences workshop on the application of AI in health and care. You can read the full write-up (PDF) of the workshop on our webpage.