ΑΙhub.org

RoboCup@Work League: Interview with Asad Norouzi

This year, RoboCup will be taking place from 22-28 June as a fully remote event with RoboCup competitions and activities taking place all over the world.

RoboCup@Work is the newest league in RoboCup, targeting the use of robots in work-related scenarios. RoboCup@Work utilizes ideas and concepts from other RoboCup competitions to tackle open research challenges in industrial and service robotics.

We spoke to Asad Norouzi, a member of the executive committee, about the league, how the competition will work, and the changes they’ve made to the event so that it can be held virtually.

Could you tell us a bit about the league and what it involves for the competitors?

The league name is RoboCup@Work and it falls under the category of RoboCup Industrial. We have two leagues as part of RoboCup Industrial: RoboCup@Work and Logistics. Basically, the main objective of the RoboCup@Work league is to create a benchmark for robots that operate within an industrial setting.

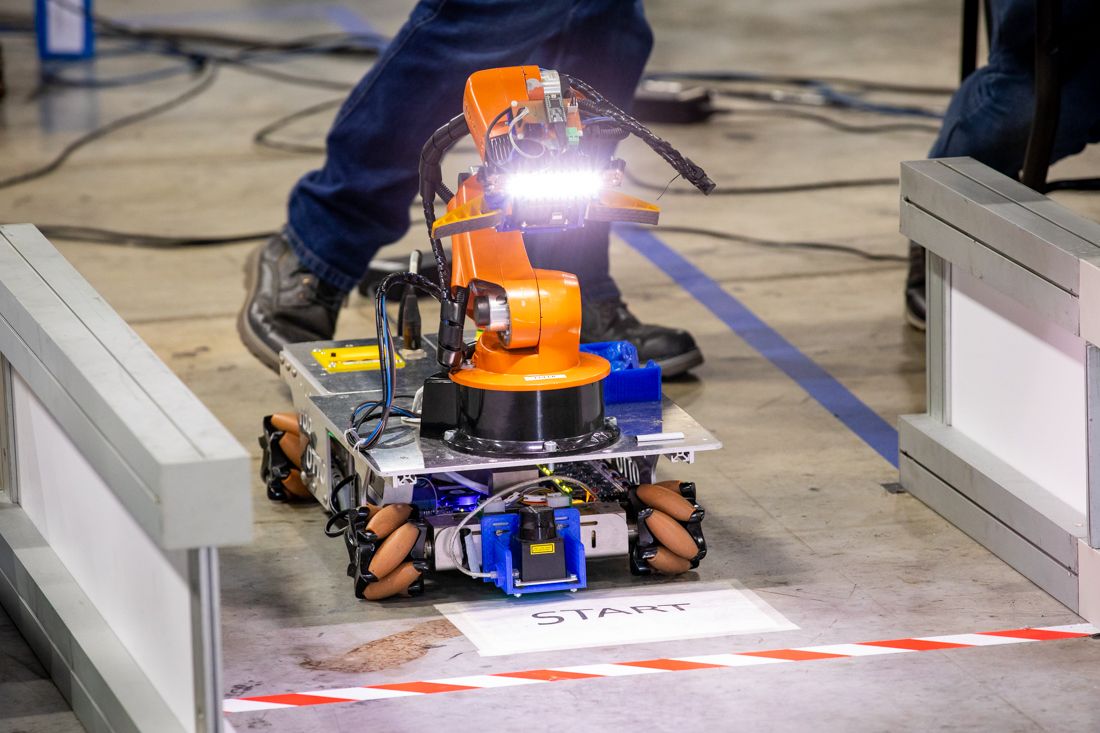

For the competition we have several benchmarking tests where we have an autonomous mobile robot that operates in an arena. This arena simulates an industrial setting, where the robot navigates within this area avoiding obstacles. There are dynamic obstacles; the robot doesn’t know they are there, but it has to dynamically locate them and find an alternative path. Then the robot has to transport objects that are relevant to industry, such as screws and nuts.

The competition involves autonomous navigation, object detection, and object manipulation. Some teams actually go beyond that and also they also try to do some advanced learning.

When a robot starts, it receives its task from a central agent (called the Referee Box) and can then plan ahead to find an efficient route. In other words, they have to do some planning – how can they finish more quickly, and in a way that they can collect more points?

How do you award points in the competition?

To start the competition, the Referee Box sends the tasks to the robot. For example, a task might be that the robot has to pick up the biggest screw from Station A and then drop it off at Station C. So the robot has to go to Station A, and if it successfully reaches that station it gets some navigation points.

Once at the correct station, the robot has to detect the object that we want it to pick up (there are multiple objects at the station but it only has to pick up the one that the Referee Box has requested). If this is done correctly, the team is awarded detection points.

The robot should also be able to grab the object properly and place it on it’s own tray. So, it reaches for the object, picks it up and puts it on its tray. This operation has another set of points available – object manipulation points.

Then, the robot goes to the destination station, and receives more navigation points, if it is able to get to the correct station.

Finally, if it is able to place the object properly on the station, such that the object doesn’t roll over and drop off the station, then the team is awarded placement points.

There are also some more advanced tasks.

What do these more advanced tasks involve?

One of these is precision placement. In this task the robot has to place the object on a precision placement table. This table has cavities that have the same shape as the target object. The robot has to place the object exactly on top of the hole so that it can fit inside. If it misses it by just a couple of centimetres, it won’t be able to fit into the hole.

We also have a rotating table challenge. If the Referee Box sends the robot there then it has to pick up an object from this table which rotates at a random speed. Before the competition the technical committee changes the speed of rotation, so the teams can’t plan for a particular speed in advance. The robot has to align, find the object, and work out how to pick it up.

We also have some stations that are more difficult because they are shelves with two levels. Usually when teams try to detect the object, most of them have the camera mounted on the robotic arm, which is typically raised above the station to find the object. However, if it does that for the bottom shelf it will hit the top level. If the robot hits anything at all in the arena then there is a penalty. So, the robot has to look at the shelf from a different perspective. The other challenge is in picking up the object. For the shelf it has to use a different technique than for the standard stations.

Could you tell us more about the obstacles on the course?

These obstacles are quite interesting. We do have some simple obstacles, where we place random boxes on the arena floor after the competition has started. So, the teams don’t know where the obstacles will be located beforehand.

We have another interesting obstacle which is tape. This is stuck on the ground and the robot has to treat it as an obstacle. In an industrial setting there are areas within which movement is restricted, and these areas are designated by tape. The robot has to use its camera to locate the tape and see it as an obstacle. This is a very difficult task.

Have you made any changes to the tasks as the competition has evolved?

In terms of object detection, since last year we’ve made it even more difficult. Previously we had standard surfaces for all the stations. Now, we are introducing arbitrary surfaces where we are going to change the surface by placing, for example, grass, paper, or black tape on it. These things make the object detection more difficult and could fool the robot image processing system. The teams have moved towards deep-learning to solve this problem.

In previous years, when the Referee Box told the robot to go to Station A, the robot would know the height of the station (e.g. ground level, 5cm, 10cm, or 15cm). Now, we have removed that information. The Referee Box will tell the robot to go to Station A but the robot won’t know the height. It has to use sensors to determine the height.

Do the teams all use the same basic hardware for their robots?

When RoboCup@Work started, one of the sponsors actually provided the robotic base and the arm, but the company stopped production of these robots about five or six years ago. So, we are at the stage where most of the teams are retiring these robots. Some teams have started manufacturing their own mobile base or purchasing from other vendors. A few years ago all the teams had the same robot but now they are becoming more and more different.

Do the teams have the freedom to use any robot they like as long as it carries out the task?

Essentially, yes. In our rule book we do have some constraints regarding the size of the robot, so that it can fit into the arena. Other than that, they can be as creative as they want.

How have you had to adapt the competition this year, for the virtual environment?

This is the second time we’ve run it virtually. The first time was during RoboCup Asia Pacific back in 2020. That event was much simpler because it wasn’t a worldwide version so we had fewer teams involved.

It will be different for this event; we are doing a lot of other things that will make the competition more competitive.

We are one of the few leagues in RoboCup that is going to run the competition in full size. It will be more or less the same as if the teams were physically in the competition. The difference is that, instead of everyone using the same arena, all the teams are going to use their own labs as their arena. We had a lot of discussions in the technical committee about how to make it consistent because we wanted a fair competition for everyone. We asked all the teams what facilities they had at their Universities, and what size field, what objects, what stations they had. We collected all of that information and, based on that, we designed an arena that every team can use.

How will the competition run virtually?

The teams will receive their task just before it is their turn to compete, just as would happen if they were physically in the arena. Each team has a local Referee Box, and when we send them the task, they can start very soon after that. That means they don’t have time to see what the field looks like, they don’t have time to know what task the Referee Box has generated. That makes it a fair, autonomous competition.

Something else we need to consider is how to detect collisions. Even when the referees are physically in the arena it’s difficult to see all the angles and spot collisions. We have two methods for checking collisions in this virtual competition. One is that we ask teams to use cameras. We also ask the teams to provide the logs, from which we can see the robot’s run.

Another challenge is with time zones. Because we have teams from different time zones, we tried to find a time zone that would fit for all the teams.

Do the teams compete at the same time or do they all have different time slots?

When we announce the time slot, that is for all the teams. They all need to be prepared, but they go in order. Team A runs, then team B, then team C, etc.

When the teams get the task through from the Referee Box, do they have to put the obstacles, such as the tape, in place?

Yes, they will get this information five minutes before they need to start the tasks.

Do you have events running throughout the entire week (22-28 June)?

Yes we do. There are several tests throughout the competition week. As we move through the week we make it more and more difficult.

Teams are going to collect points for each benchmarking test. All the teams can take part in all of the challenges. At the very end, the teams that get the highest points will be ranked 1st, 2nd and 3rd.

There will be live video streams, for the participants and for the spectators.

How long do the runs take?

For the initial tests it’s only about five minutes per run. The more advanced tests take 10 minutes, and the last one, which is the final on the last day, will be a maximum of 20 minutes.

How many teams will be participating this year and where are they from?

This year we have 10 teams. These are:

AuonOHM, Nuremberg Institute of Technology, Germany

bitbots, Bonn-Rhein-Sieg University of Applied Sciences, Germany

Democritus Industrial Robotics (DIR), DIR Research Group, Greece

luhbots, Leibniz University of Hanover, Germany

MRL@Work, Qazvin Azad University, Iran

Robo-Erectus@Work, Singapore Polytechnic, Singapore

RoboHub Eindhoven, Fontys University of Applied Sciences, Netherlands

robOTTO, Otto von Guericke University Magdeburg, Germany

SWOT, University of Applied Sciences Würzburg-Schweinfurt, Germany

Team Tyrolics, University of Innsbruck, Austria

The teams consist of roughly between 5-10 people each.

What kinds of performance improvements have you seen since the league started?

We’ve had big improvements in performance, especially in terms of object detection. Around two years ago, most of the teams moved from conventional image processing to deep learning methods. Some teams have already publicly provided their data to other teams so they can train their models. So, we’ve seen a big jump in terms of object detection accuracy. As I mentioned earlier, we’ve made object detection more difficult and teams are coping with it because they’ve been able to make these advances.

There’s also another problem to solve: because this mobile base has limited battery power and limited space you can’t have GPUs anywhere you want. So, there is a system engineering challenge here. You are using deep-learning but you need to provide the computational resources for that. There have been some big improvements in that area too,

We’ve also seen many improvements in terms of autonomous navigation. Most teams are using an open-source package but they are extensively tuning it. Two or three teams have written their own package for autonomous navigation. We are seeing a lot of research in this area.

In short, there have been many significant improvements since the league started.

Find out more

RoboCup 2021 event website.

RoboCup@Work League website.

RoboCup@Work competition schedule.

tags: RoboCup, RoboCup2021