ΑΙhub.org

Artificial intelligence helps speed up ecological surveys

Image © NIOZ.

Image © NIOZ.

By Sandrine Perroud

Scientists at EPFL, the Royal Netherlands Institute for Sea Research and Wageningen University & Research have developed a new deep-learning model for counting the number of seals in aerial photos that is considerably faster than doing it by hand. With this new method, valuable time and resources could be saved which can be used to further study and protect endangered species.

Ecologists have been monitoring seal populations for decades, building up vast libraries of aerial photos in the process. Counting the number of seals in these photos require hours of meticulous work to manually identify the animals in each image.

A cross-disciplinary team of researchers including Jeroen Hoekendijk, a PhD student at Wageningen University & Research (WUR) and employed by the Royal Netherlands Institute for Sea Research (NIOZ), and Devis Tuia, an associate professor and head of the Environmental Computational Science and Earth Observation Laboratory at EPFL Valais, have come up with a more efficient approach to count objects in ecological surveys. In their study, published in Scientific Reports, they use a deep-learning model to count the number of seals in archived photos. Their method could run through 100 images in less than one minute – versus one hour for a human expert.

No labeling needed

“In ecology, the most commonly employed deep-learning models are first trained to detect individual objects, after which the detected objects are counted. This type of model requires extensive annotations of individual objects during training,” says Hoekendijk. However, the method applied by the research team eliminates the need to label individual seals beforehand, dramatically speeding up the procedure since only the total number of animals in the picture is needed. What’s more, their method can be used to count any items or individual animals, and thus potentially help to process not only the new photos, but also those that could not be analyzed for lack of time. This represents decades of photos that could provide important insight into how population size has evolved over time.

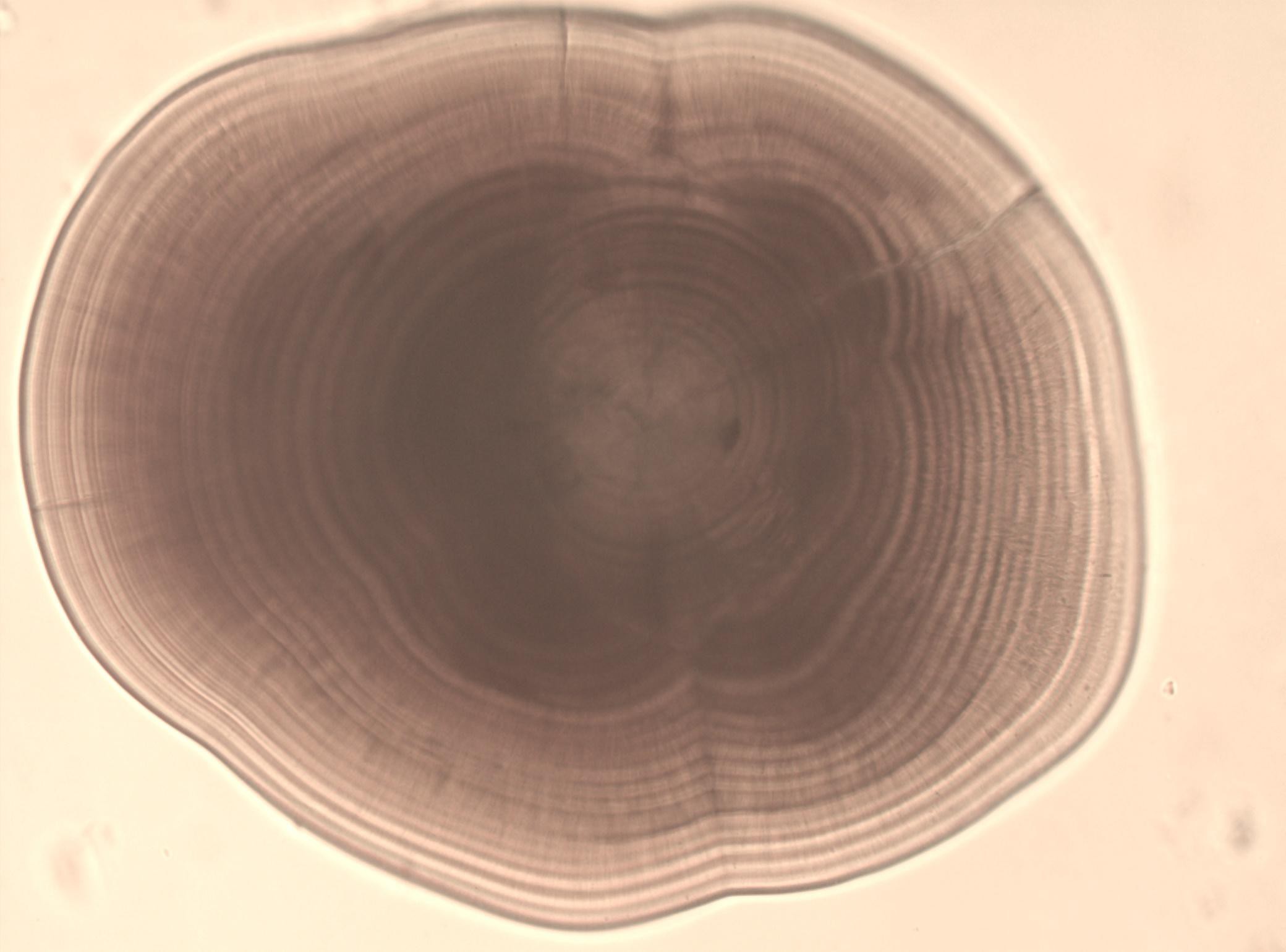

Example of otolith image under the microscope. © NIOZ

Example of otolith image under the microscope. © NIOZ

From the macroscopic to the microscopic

The way seals appear in aerial photos can vary significantly from one batch to the next, depending on the altitude and angle at which the photo was taken. The research team therefore evaluated robustness to such variation. In addition, to demonstrate the potential of their deep-learning model, the scientists tested their approach on a fundamentally different dataset, of a much smaller scale: images of microscopic growth rings in fishbones called otoliths. These otoliths, or hearing stones, are hard, calcium carbonate structures located directly behind a fish’s brain. The scientists trained their model to count the daily growth rings visible in the images, which are used to estimate the age of the fish. These growth rings are known for being extremely challenging to annotate individually. The research team found that their model had roughly the same margin of error as manual methods, but could work through 100 images in under a minute, whereas it would take three hours for an expert.

Next step

The next step will be to apply similar approaches to satellite images of inaccessible Arctic regions where several seal populations live that are on the Red List of Threatened Species compiled by the International Union for Conservation of Nature. “We plan to use this approach to study endangered species in this remote part of the world, where temperatures are rising twice as fast as elsewhere on the planet”, says Tuia. “Knowing where the animals concentrate is essential to protect these often-endangered species.”

Read the paper in full

Counting using deep learning regression gives value to ecological surveys

Jeroen PA Hoekendijk, Benjamin Kellenberger, Geert Aarts, Sophie Brasseur, Suzanne SH Poiesz, and Devis Tuia

tags: Focus on life on land, Focus on UN SDGs