ΑΙhub.org

Faithfully reflecting updated information in text: Interview with Robert Logan – #NAACL2022 award winner

Robert Logan, and co-authors Alexandre Passos, Sameer Singh and Ming-Wei Chang, won a best new task award at NAACL 2022 (Annual Conference of the North American Chapter of the Association for Computational Linguistics) for their paper FRUIT: Faithfully Reflecting Updated Information in Text. Here, Robert tells us about their methodology, the main contributions of the paper, and ideas for future work.

What is the topic of the research in your paper?

Our paper introduces the new task of faithfully reflecting updated information in text or FRUIT for short. Given an outdated Wikipedia article and new information about the article’s subject, the goal is to edit the article’s text to be consistent with the new information.

Could you tell us about the implications of your research and why it is an interesting area for study?

Textual knowledge bases such as Wikipedia are essential resources for both humans and machine learning models. However, as these knowledge bases grow, it becomes increasingly difficult to keep the information within them consistent. Every time an entry is changed or added, it could potentially introduce a contradiction with one or more of the other entries. One reason FRUIT is a valuable task is that solving it will produce systems that could help substantially reduce the burden required to maintain consistency in these settings.

In addition, solving the task requires addressing several challenges in modern NLP. First, models cannot perform well solely by relying on their parametric world knowledge. The model must prefer the evidence whenever it contradicts the model’s parametric knowledge, which recent work has shown is difficult for pretrained language models [1] [2]. Furthermore, the generated text needs to be faithful to both the original article and the provided evidence, but prefer claims in the evidence when they invalidate information in the original article. Lastly, the provided evidence can contain both unstructured text and structured objects like tables and lists, so solving the task involves challenging aspects of both multi-document summarization and data-to-text generation.

Based on our results, we’ve also found that FRUIT can be useful for measuring different models’ propensities to hallucinate as most hallucinations are usually easy to detect since they typically introduce new entities or numbers.

Could you explain your methodology?

Our main contributions in this paper were designing and releasing an initial dataset for benchmarking systems on this task and measuring baseline results for several T5 (Text-to-Text Transfer Transformer)-based models .

For data collection, we computed the diff between two snapshots of Wikipedia to collect: 1) the set of updated articles, and 2) evidence from other articles that could justify these updates. We then employed several heuristics to match the evidence to the articles and filter out unwanted data. To ensure that the collected evidence supported all of the edits in our evaluation dataset, we additionally hired human annotators to edit out any unsupported text from the updated article in a way that preserved fluency. Our dataset (FRUIT-Wiki) and the code we used for data collection are publicly available here.

We measured baseline results for several T5-based models and a trivial baseline that generates a verbatim copy of the original article. For the neural models, we investigated a straightforward application of T5 where we concatenated the original article and evidence to form the input and trained the model to decode the entire updated article from scratch. We additionally investigated an alternative approach we call EdiT5 that we trained to instead generate the diff between the original and the updated article and predict which pieces of evidence were used to generate the updates. To evaluate the faithfulness of generations, we introduced a variant of the ROUGE score we call UpdateROUGE that only considers the updated sentences instead of the entire article (this prevents systems that do not update the text from receiving high scores). We additionally introduced metrics that use the output of a NER system to measure the precision and recall of entities in generated vs. ground truth updates, as well as how many “hallucinated” entities appear in the generated updates but not in the original article or provided evidence.

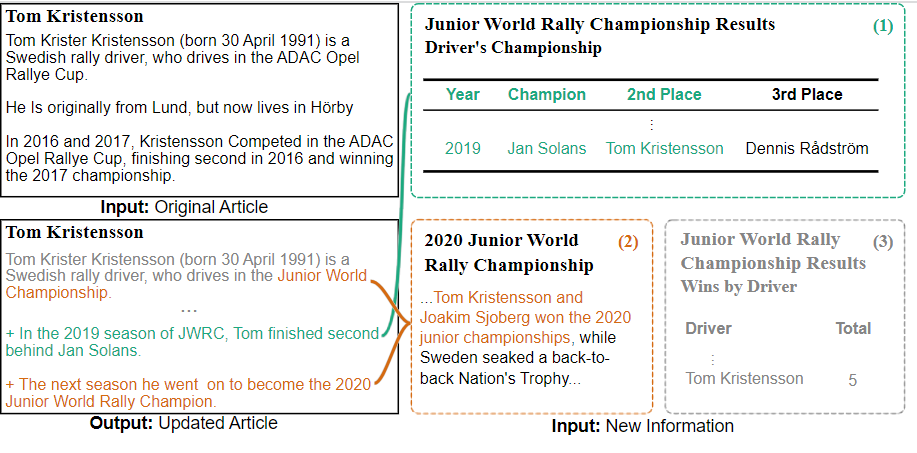

Illustration of the FRUIT task. An outdated original article and relevant new information are provided as inputs, and the goal is to generate the updated article

Illustration of the FRUIT task. An outdated original article and relevant new information are provided as inputs, and the goal is to generate the updated article

What were your main findings?

Regarding our data collection pipeline, we found that it produces high-quality data according to many measures. By comparing the pipeline outputs to the human annotations, we found that our heuristics correctly associate evidence to updates about 85% of the time and that 90% of the mentions in the updated text are supported. Furthermore, we detected a high rank correlation in system performance on the automatically generated vs. human-annotated data (>90% for most metrics), which suggests that researchers that use our pipeline to collect datasets for other time periods and language should be able to estimate the relative performance of different systems without requiring human annotation.

On the modeling side, we were pleasantly surprised at how well our baselines performed. I pretty much jumped out of my seat when I saw how plausible the initial model generations were! In terms of the metrics I mentioned above, we found that the T5-based models typically obtain UpdateROUGE scores in the mid-40s (which is comparable to ROUGE scores for other generation tasks) and that the number of hallucinated entities is typically quite small (1.5 hallucinated tokens per updated article on average). In addition, we found that our proposed EdiT5 approach outperformed the basic approach by about 2-5% (absolute) on all metrics. We also performed qualitative analysis on 100 test instances to better understand the models’ mistakes. We found the EdiT5 model generated ungrounded claims in only 35% of the updated articles. In those articles, most mistakes were due to the model hallucinating numbers and dates (21 out of 35).

Overall, these results suggest that the benchmark dataset and data collection pipeline we’ve compiled for this task will be useful for conducting research on it. They also highlight some areas of improvement for future work to concentrate on.

What further work are you planning in this area?

One area that I’d really like to focus on is improving evaluation for this task. While using ROUGE and NER-based metrics was acceptable for a first pass at measuring faithfulness, these metrics only penalize the model when new n-grams and mentions appear in the generated updates. Meanwhile, there’s been a lot of exciting work on model-based metrics for evaluating faithfulness in other generative tasks (this paper that was published alongside FRUIT at NAACL gives a good overview) that I would be really excited to see applied to FRUIT but will likely need some modification to deal with things like structured inputs and conflicting information in the source article and evidence.

Read the paper in full

FRUIT: Faithfully Reflecting Updated Information in Text

Robert L. Logan IV, Alexandre Passos, Sameer Singh, Ming-Wei Chang.

About Robert Logan

|

Robert L. Logan IV is a research scientist at Dataminr. His research primarily focuses on the interplay between language modeling and information extraction. Prior to joining Dataminr, he was a Ph.D. student at the University of California, Irvine, studying machine learning and natural language processing under Padhraic Smyth and Sameer Singh, and received BAs in Mathematics and Economics from the University of California, Santa Cruz. He has also conducted machine learning research as an intern at Google Research and Diffbot and worked as a research analyst at Prologis. |