ΑΙhub.org

#AAAI20 Turing Award session: Bengio, Hinton and LeCun talk about their research

One of the highlights of the AAAI-20 conference this month was the special event featuring Turing Award winners, Yoshua Bengio (University of Montreal and Mila), Geoffrey E. Hinton (Google, The Vector Institute, and University of Toronto) and Yann LeCun (New York University and Facebook)

The Association for Computing Machinery (ACM) named Bengio, Hinton, and LeCun recipients of the 2018 ACM A.M. Turing Award for conceptual and engineering breakthroughs that have made deep neural networks a critical component of computing.

The two-hour AAAI-20 event featured individual talks by each speaker, followed by a panel session. The trio discussed their work, their views on current challenges facing deep learning and where they think it could be heading. You can watch the event in full in the live-streamed video provided by AAAI:

Geoffrey Hinton — Stacked Capsule Autoencoders

First to speak was Geoffrey Hinton and he focussed on stacked capsule encoders and their role in object recognition. There are two approaches when it comes to object recognition. Firstly, good old-fashioned parts models. These provide a sensible modular representation. However, they involve a lot of hand-engineering. The second method is convolutional neural networks (CNNs). They learn everything end-to-end but work in a way that is very different from human perception.

Hinton jokingly aimed the first part of his talk at Yann LeCun declaring he was going to outline the “problems with CNNs and why they are rubbish”. CNNs are designed to cope with translations of objects but they are not so good at dealing with other effects such as rotation or scaling. The ideal scenario is to have neural networks that generalise to new viewpoints as quickly as people do. Computer graphics are also able to quickly generalise. The reason for this is because they use hierarchical models in which the spatial structure is modelled by matrices that represent the transformation from a coordinate frame embedded in the whole to a coordinate frame embedded in each part. As that is just a matrix operation there is a linear relationship between whole and part. Hinton explained that it would be good to make use of this linear relationship to deal with viewpoint in neural networks.

This led Hinton onto the main part of his talk: stacked capsule auto-encoders, and how these could be used to improve the CNN method. He introduced the latest (2019) version of capsules, entertaining the packed crowd with his comment: “forget what you knew about the previous versions, they were all wrong, but this one’s right”. This new version uses unsupervised learning and matrices to represent whole-part relationships. The previous versions, which Hinton mentioned he instinctively felt were wrong, used discriminative learning and part-whole relationships.

The idea of a capsule is to build more structure into neural networks and hope that that extra structure helps you generalise more. A capsule is a group of neurons that learns to represent a familiar shape or part. It consists of a logistic unit that represents whether the shape exists in the current image, a matrix that represents the geometrical relationship between the shape and the camera, and a vector that represents other properties, such as deformation, velocity, colour. You can read the details of stacked capsule auto-encoders in this 2019 work, by Adam Kosiorek, Sara Sabour, Yee Whye Teh and Geoffrey Hinton.

One of the problems the team came up against was how to infer the whole object from the parts. Here they turned to set transformers, which are used in natural language processing.

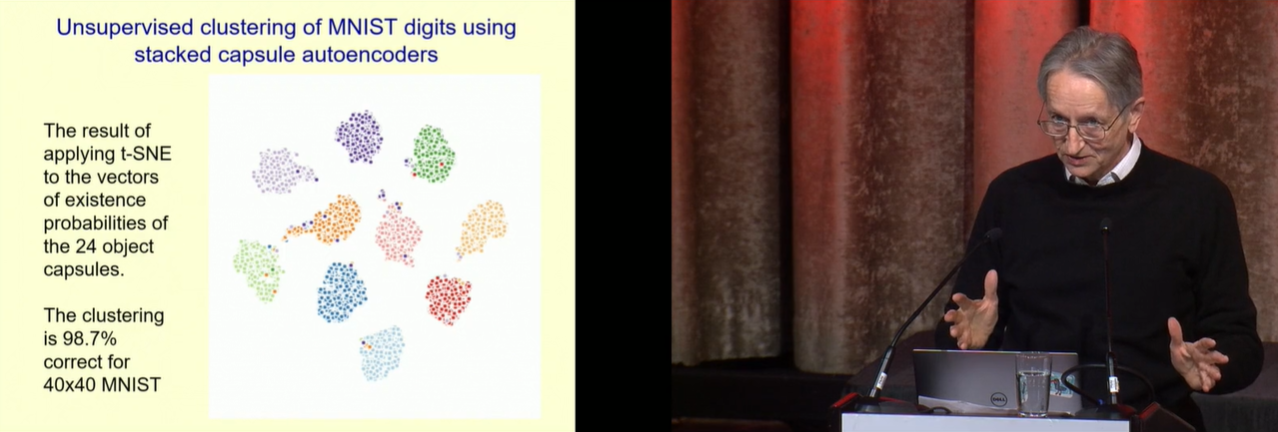

To prove the concept of their model the team tested on the MNIST dataset. Here are the results of unsupervised clustering of these MNIST digits using the stacked capsule auto-encoder method:

Additional work on this project involved dealing with backgrounds and 3d images. Hinton concluded his talk by saying that prior knowledge about coordinate transforms and parse trees is quite easy to put into a simple generative model. Inferring which higher level capsules are present is hard but a set transformer can learn to solve this problem. The complexity of the encoder is irrelevant to the complexity of the model – so if you train a big neural network to do the encoding, success is guaranteed.

Yann LeCun — Self-Supervised Learning

Yann LeCun began his talk with a definition of deep learning. He noticed, from his activity on social media, that there seems to be a confusion about what this really is. His definition is that “deep learning is building a system by assembling parameterized modules into a (possibly dynamic) computation graph, and training it to perform a task by optimising the parameters using a gradient-based method”. LeCun noted that we hear a lot today about the limitations of deep learning, but these are actually limitations of supervised learning.

LeCun explained that supervised learning works really well but it requires a lot of training data. One of the reasons neural network research diminished in the 1990s was because there wasn’t enough data at our disposal to train the models. Supervised learning excels at tasks such as natural language processing and computer vision. He pointed to a very recent example of supervised deep learning where Lample and Charton solved integrals and differential equations with a transformer architecture.

There has been a lot of excitement about reinforcement learning and it works very well for games and simulation. However, it is very slow and requires many trials to train a system to reach performance level. For example, OpenAI’s single-handed Rubik Cube took the equivalent of 10,000 human years’ worth of simulation. For real-world problems reinforcement learning requires too many trials to learn anything.

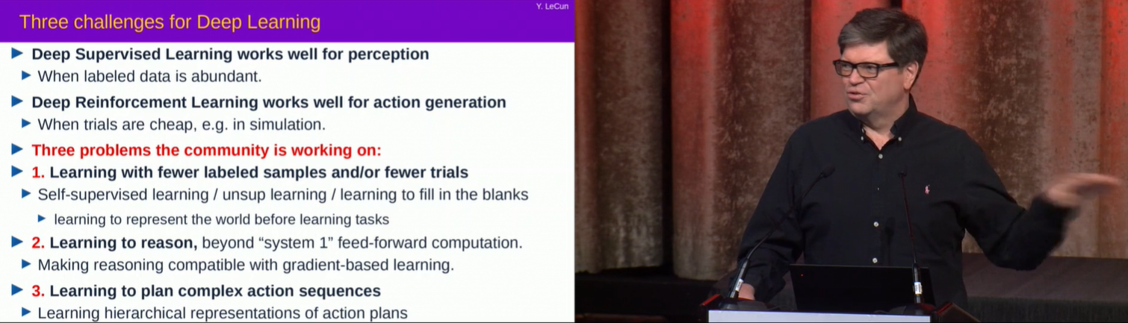

The rest of LeCun’s talk largely addressed the first of his three challenges for deep-learning; using self-supervised learning as a means to learn with fewer samples and trials. Self-supervised learning has a proven track-record in the area of natural language processing. Things become a little more tricky when applying this method to images and videos. It is much more difficult to represent uncertainty and prediction in images and videos than it is in text because the systems are not discrete. We can produce distributions over all the words in the dictionary but we don’t know how to represent distributions over all possible video frames. If you ask a neural network to predict the next frames in a video it will produce a blurry image. That is because it cannot predict exactly what will happen so it shows an average over many possible outcomes. In LeCun’s opinion this is the main issue we need to solve if we are to apply self-supervised learning to a wide variety of problems.

There are a few possible options to solve the problem but LeCun put his money on latent variable energy-based models. These are essentially like a probabilistic model and latent variables allow the system to make multiple predictions. There are a number of ways in which the model can be trained and LeCun gave a brief overview of these. As he noted, using energy minimisation models for inference is far from new as it is used in almost every probabilistic model. As an example, he presented theoretical research on using a latent variable model to train neural networks for autonomous driving.

LeCun concluded by saying that he thought self-supervised learning was the future: “the revolution will not be supervised”.

Yoshia Bengio — Deep Learning for System 2 Processing

Yoshia Bengio’s talk focussed on deep learning for system 2 processing. “System 1” and “System 2” were proposed by Daniel Kahneman as two different ways the brain forms thoughts, and his book “Thinking Fast and Slow” has been somewhat of an inspiration for Bengio. The thesis is that there are two kinds of computation that the brain is doing. The first (system 1) is labelled as intuitive, fast, unconscious and habitual. Current deep learning is very good at these things. The second (system 2) processing allows us to do things that require consciousness, things that take more time to compute, things such as planning and reasoning. This kind of thinking allows humans to deal with very novel situations that are very different from what we’ve been trained on.

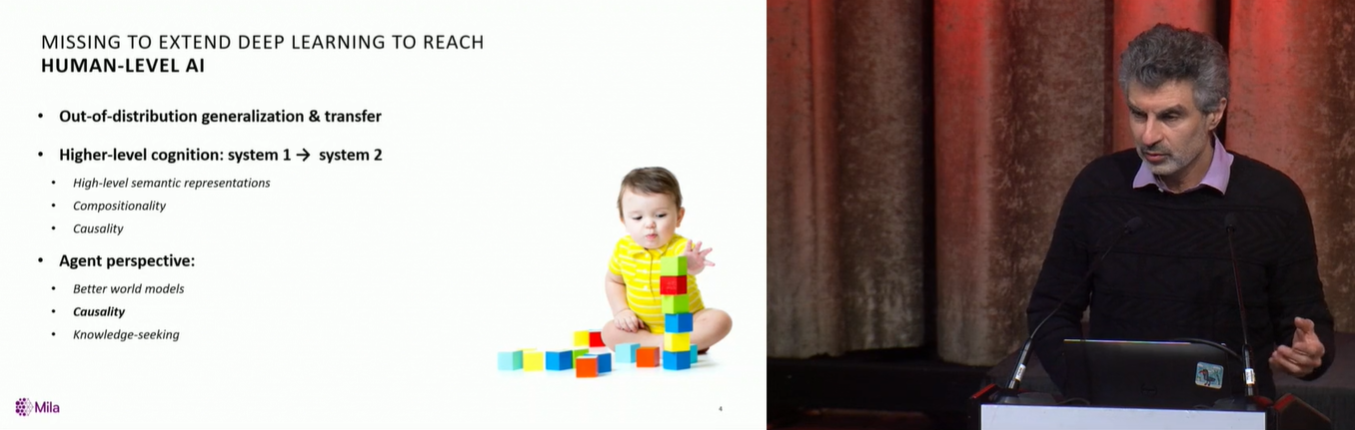

Bengio began his talk by explaining why we need to think about inductive biases and priors, mentioning the “No Free Lunch Theorem”, which tells us that there is no complete AI algorithm to cover all scenarios. Therefore, we need to consider priors for our models, but the question is: how much prior knowledge should we put in? Bengio said: “the kind of priors that evolution has enabled in human brains, some of them are very general and allow us to tackle a wide variety of tasks…deep learning already incorporates many of these priors”. One of his research areas is development of powerful priors that can bring an exponential advantage in sample complexity. The motivation behind this research is the desire to develop deep learning that can reach human level AI.

In 2017 Bengio introduced his concept of a “consciousness prior”, for learning representations of high-level concepts of the kind we manipulate with language. This was one of the priors that he touched on during his talk. Another was the important concept of out-of-distribution (OOD) generalisation – the innate ability that humans (and many animals) have enabling them to deal with new scenarios, containing many agents, without specific training.

Bengio moved on to talk about the link between current system 2 research and previous work in the area (many decades ago) using symbolic AI methods. He explained that we would like the best of both worlds: avoiding the pit-falls of classical AI rule-based symbol manipulation whilst building on the symbolic concepts, such as systematic generalisation. In order to progress researchers will need efficient large-scale learning, a grounding in system 1 and OOD generalisation. Bengio believes that the main building block that will allow us to do these kinds of things in deep learning is attention, a crucial ingredient of conscious processing. Attention allows you to focus on a few elements at a time and can be built into neural networks using transformers.

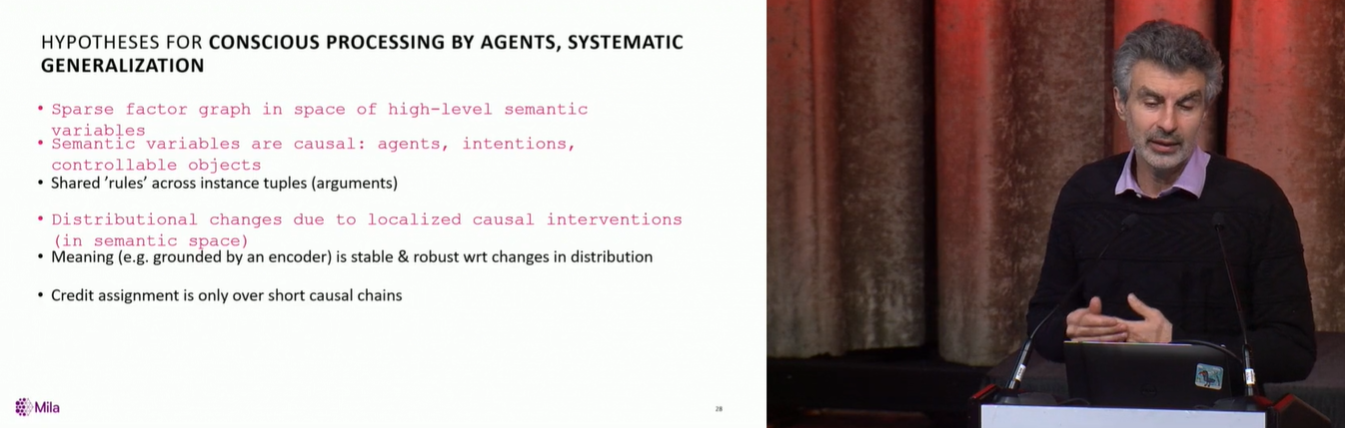

Bengio concluded his talk by reiterating some of the hypotheses (see figure below) that he and his collaborators have been exploring. He believes that these can be incorporated into machine learning systems to implement some of the computational abilities that are associated with system 2.

Further viewing:

This video by ACM provides a very brief history of the development of neural networks and features short interviews with the three prize-winners:

tags: AAAI, AAAI2020