ΑΙhub.org

Using machine learning to identify different types of brain injuries

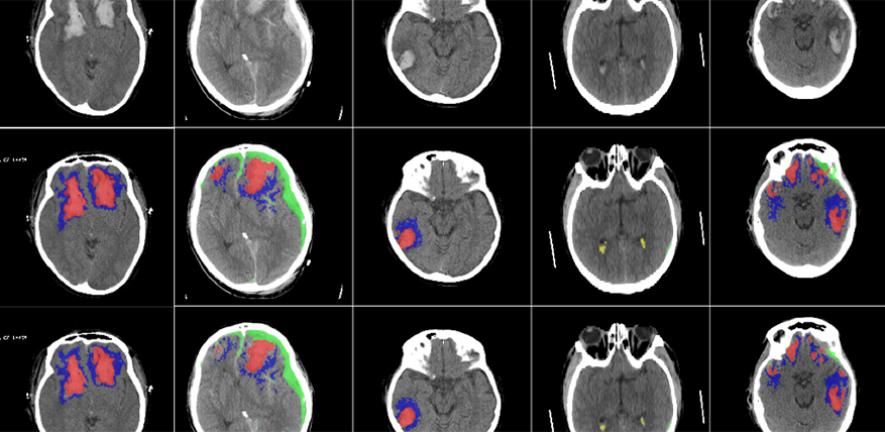

Researchers have developed an algorithm that can detect and identify different types of brain injuries. The team, from the University of Cambridge, Imperial College London and CONICET, have clinically validated and tested their method on large sets of CT scans and found that it was successfully able to detect, segment, quantify and differentiate different types of brain lesions.

Their results, reported in The Lancet Digital Health, could be useful in large-scale research studies, for developing more personalised treatments for head injuries and, with further validation, could be useful in certain clinical scenarios, such as those where radiological expertise is at a premium.

Head injury is a huge public health burden around the world and affects up to 60 million people each year. It is the leading cause of mortality in young adults. When a patient has had a head injury, they are usually sent for a CT scan to check for blood in or around the brain, and to help determine whether surgery is required.

“CT is an incredibly important diagnostic tool, but it’s rarely used quantitatively,” said co-senior author Professor David Menon, from Cambridge’s Department of Medicine. “Often, much of the rich information available in a CT scan is missed, and as researchers, we know that the type, volume and location of a lesion on the brain are important to patient outcomes.”

Different types of blood in or around the brain can lead to different patient outcomes, and radiologists will often make estimates in order to determine the best course of treatment.

“Detailed assessment of a CT scan with annotations can take hours, especially in patients with more severe injuries,” said co-first author Dr Virginia Newcombe, also from Cambridge’s Department of Medicine. “We wanted to design and develop a tool that could automatically identify and quantify the different types of brain lesions so that we could use it in research and explore its possible use in a hospital setting.”

The researchers used DeepMedic, a three-dimensional convolutional neural network (CNN) with three parallel pathways that process the input at different resolutions. They trained the network on more than 600 different CT scans, showing brain lesions of different sizes and types. They then validated the tool on an existing large dataset of CT scans.

The network was able to classify individual parts of each image and tell whether it was normal or not. This could be useful for future studies in how head injuries progress, since it may be more consistent than a human at detecting subtle changes over time.

“This tool will allow us to answer research questions we couldn’t answer before,” said Newcombe. “We want to use it on large datasets to understand how much imaging can tell us about the prognosis of patients.”

“We hope it will help us identify which lesions get larger and progress, and understand why they progress so that we can develop more personalised treatment for patients in future,” said Menon.

While the researchers are currently planning to use the CNN for research only, they say with proper validation, it could also be used in certain clinical scenarios, such as in resource-limited areas where there are few radiologists.

In addition, the researchers say that it could have a potential use in emergency rooms, helping get patients home sooner. Of all the patients who have a head injury, only between 10 and 15% have a lesion that can be seen on a CT scan. The tool could help identify these patients who need further treatment, so those without a brain lesion can be sent home, although any clinical use of the tool would need to be thoroughly validated.

The ability to analyse large datasets automatically will also enable the researchers to solve important clinical research questions that have previously been difficult to answer, including the determination of relevant features for prognosis which in turn may help target therapies.

The research was supported in part by the European Union, the European Research Council, the Engineering and Physical Sciences Research Council, Academy of Medical Sciences/The Health Foundation, and the National Institute for Health Research.

Read the research article

Multi-class semantic segmentation and quantification of traumatic brain injury lesions on head CT using deep learning: an algorithm development and multicentre validation study

Miguel Monteiro, Virginia F J Newcombe, Francois Mathieu, Krishma Adatia, Konstantinos Kamnitsas, Enzo Ferrante, Tilak Das, Daniel Whitehouse, Daniel Rueckert, David K Menon, Ben Glocker

The Lancet Digital Health (2020)