ΑΙhub.org

Michael Wooldridge: Talking to the public about AI – #EAAI2021 invited talk

Michael Wooldridge is the winner of the 2021 Educational Advances in Artificial Intelligence (EAAI) Outstanding Educator Award. He gave a plenary talk at AAAI/EAAI in February this year, focussing on lessons he has learnt in communicating AI to the public.

Who speaks for AI?

Michael’s public science journey began in 2014 when the press and social media became awash with stories of AI. He wondered who was going to respond to these, often exaggerated, narratives and to add some nuance to the discussion. It turned out that nobody did, and there was a noticeable absence of expert opinion reported. Concerned about this issue, the following year, Michael organised a panel at the International Joint Conference on Artificial Intelligence (IJCAI) entitled “Who speaks for AI?” His aim for this session was to facilitate a discussion around who should be the voice that responds when the press report grand proclamations about AI. Not expecting it to be the most lively or well-attended of sessions, he was surprised by the turnout and by the heated nature of the debate.

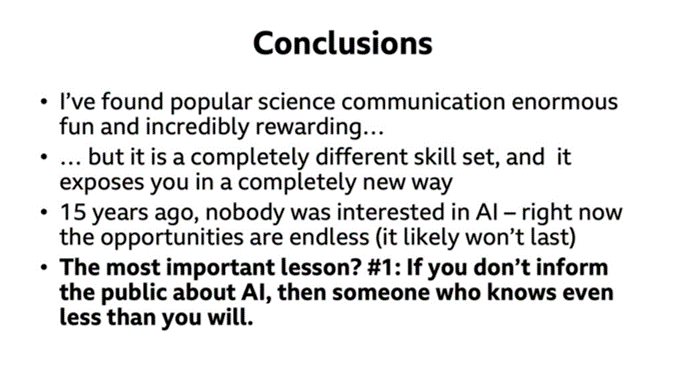

That panel event catalysed his serious involvement in science communication and he has since written two popular science books, sat on UK Government committees, given many radio and TV interviews, and spoken at popular science and literary festivals. Through all of these different media, his aim is to inform the public debate in as balanced and as transparent a way as possible.

Learning lessons from talking about AI

Michael condensed everything he has learnt, with regards to talking to non-specialists about AI, into 14 lessons. During the talk he illustrated these with examples of personal experiences. The first experience he mentioned was turning down an interview with the BBC, assuming that they would find someone else who was more eloquent and informed. It turned out that they didn’t, and this taught him the first lesson: “If you don’t inform the public about AI, then someone who knows even less than you will”.

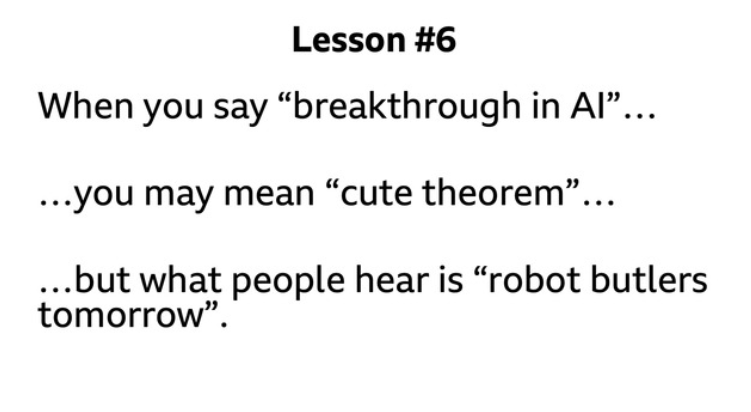

Michael went on to speak about his experiences advising the UK Government. The rise of AI has prompted governments across the world to investigate AI, and to consider national responses. Michael has given evidence at an all-party parliamentary group in the UK, and has been involved in Select Committees. He learnt a number of lessons from these parliamentary sessions. One of these was that you need to keep your message clear and simple. He also highlighted the need to take care with the language you use when talking to non-specialists. This is illustrated by lesson number six in the figure below:

Michael talked about his two popular science books and provided some guidance on writing books for non-specialist audiences. His first book (The Ladybird Expert Guide to AI) was a short-format, illustrated, historical introduction to AI. The challenge was to distil AI into 54 pages, whilst giving a balanced view and making sure there was no hype. However, he still wanted to convey a sense of wonder as to what was possible with AI.

His second book (The Road to Conscious Machines) was more ambitious and tells the story of what AI is, the ideas that have formed AI (such as symbolic AI, behavioural AI, neural AI), and what AI can and can’t do.

One important lesson he learnt was to remember your key message, and to keep focussed on that. It is vital that the opening chapters of the book are clear and communicate your message. Also, to keep readers interested there must be a clear narrative running through the book.

Since Michael’s journey began he, and many of his colleagues, have found themselves in the spotlight, trying to communicate what AI is and what the future holds. In general, he has found it an incredibly rewarding experience. He believes that researchers have a responsibility to step up and communicate the reality of AI today.

Find out more

Who speaks for AI? – following the IJCAI panel discussion (mentioned above), the participants of that debate, and others from the field, wrote about the topic is this article, published in AI Matters.

You can watch the talk in full here.

About Michael Wooldridge

Michael Wooldridge is a Professor of Computer Science and Head of Department of Computer Science at the University of Oxford, and a programme director for AI at the Alan Turing Institute. His research interests are in the use of formal techniques for reasoning about multiagent systems. He is particularly interested in the computational aspects of rational action in systems composed of multiple self-interested computational systems. As well as more than 400 technical articles on AI, he has published two popular science introductions to the field: The Ladybird Expert Guide to AI (2018), and The Road to Conscious Machines (2020).

tags: AAAI2021, Focus on quality education, Focus on UN SDGs