ΑΙhub.org

#AAAI2022 invited talk – Cynthia Rudin on interpretable machine learning

In October 2021, Cynthia Rudin was announced as the winner of the AAAI Squirrel AI award. This award recognizes positive impacts of artificial intelligence to protect, enhance, and improve human life in meaningful ways. Cynthia was formally presented with the prize during an award ceremony at the AAAI Conference on Artificial Intelligence, following which she delivered an invited talk.

Cynthia began her talk, and set the scene for her research in interpretable AI, with the story of a project she carried out in New York City, where the goal was to maintain the power grid using machine learning.

Some parts of the grid infrastructure in the city are as old as 140 years, and this inevitably leads to failures in parts of the system. Cynthia and her team were tasked with using historical records of past grid failures to predict future ones. Many of the failures occur underground at manhole locations, and these failures may manifest themselves as fires or explosions. Typically the failure is due to wire degradation, so the ability to predict this and replace wires before a failure occurs would be highly beneficial.

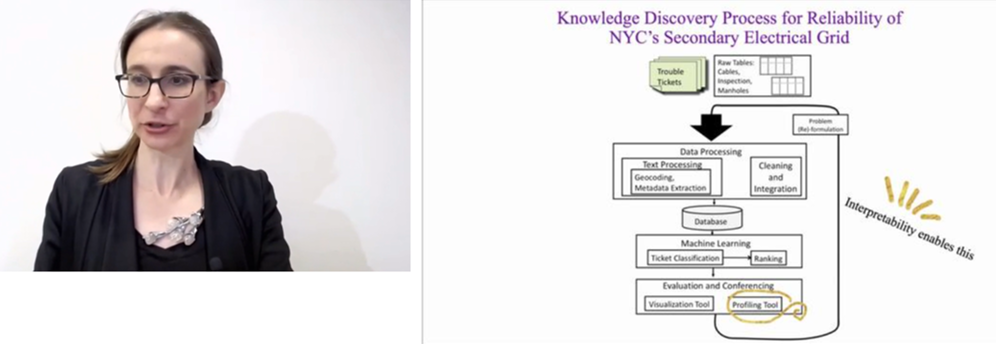

Predicting manhole events – the process. Screenshot from Cynthia Rudin’s talk.

Predicting manhole events – the process. Screenshot from Cynthia Rudin’s talk.

One of the challenges with this project was the data, which took the form of written tickets. It was very difficult to extract the required information from these tickets. In addition, pockets of domain knowledge were spread across different databases. The slide above outlines the process that Cynthia and her team designed. They first had to clean the data and classify what would constitute a “serious event” at a manhole location (e.g. a fire or explosion). Their model then ranked the manholes in order of vulnerability. The final part of the process concerned the design of two tools to show what was going on in the analysis. These tools helped with interpretability and provided the electricity company with report cards that they could act on. A test of their method on unseen data revealed that if the electricity company had acted on the top 10% of their ranked list, they could’ve reduced the number of manholes events by up to 44% for that time period.

This project shaped how Cynthia thinks about what is important in machine learning problems. During the project she learnt that more powerful machine learning methods were not as effective as having an interpretable process. They tried a range of machine learning models, from the most basic up to the most powerful, and found no performance difference.

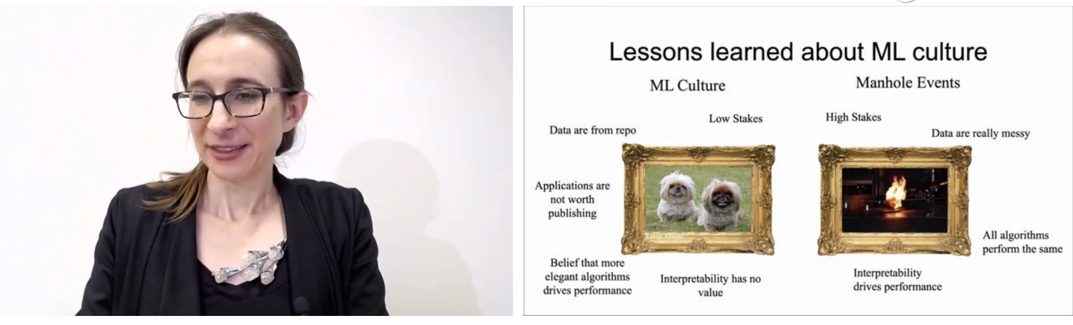

Cynthia spoke about some of the lessons she learnt about machine learning culture at that time. The stakes for the majority of problems tended to be low, and data (which was usually clean) came from repositories. This was quite a contrast to the real-world manhole problem, with high stakes and messy data. She noted that problems arise when a low stakes mentality is applied to high stakes fields, for example parole decisions. There are bad decisions being made because someone typed the wrong number into a black-box model.

Lessons learned. Screenshot from Cynthia Rudin’s talk.

Lessons learned. Screenshot from Cynthia Rudin’s talk.

Something else that Cynthia realised was that people’s experiences with machine learning are wildly different depending on what type of problem they are working on. Specifically, raw data are very different from tabular data, and these two data types are like two different worlds of machine learning. For raw data, neural networks are the only technique that works right now. In contrast, with tabular data, all methods have a similar performance. That includes very sparse models, such as decision trees or scoring systems.

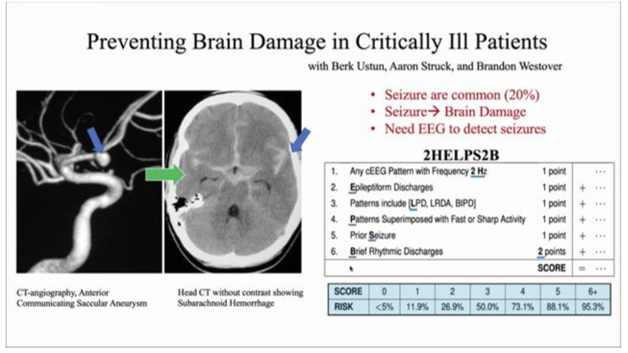

Therefore, working with tabular data gives us the opportunity to create simple models that are easy for the users to interpret. An example of such a model is one that Cynthia and her team developed to aid doctors in preventing brain damage in critically ill patients, where EEG measurements are used to detect seizures. Their model, 2HELPS2B, is now widely used. It is a score-based model, which is simple for doctors to use and they can memorise it just by knowing its name. Although the end product of the model is very simple, the design of it was not. It was necessary to work out which sub-set of features work together and lead to the most effective prediction of seizures. It’s a combinatorially hard problem to design these generalized additive models.

2HELPS2B model. Screenshot from Cynthia Rudin’s talk.

2HELPS2B model. Screenshot from Cynthia Rudin’s talk.

Cynthia’s lab does a lot of work on sparse generalised additive models. They have designed different models for various medical applications. These include models for ADHD screening, sleep apnea screening, and for the clock drawing test to detect dementia. You can read their latest work on sparse generalised additive models, which was recently published on arXiv.

To close, Cynthia issued a call to the AI community that applied AI research be more accepted into the fold. She suggested applied-focussed tracks at major conferences as a good starting point.

You can watch the talk in full here.

tags: AAAI, AAAI2022