ΑΙhub.org

AIhub coffee corner: Is AI-generated art devaluing the work of artists?

The AIhub coffee corner captures the musings of AI experts over a short conversation. This month, we tackle the topic of AI-generated art and what this means for artists.

Joining the discussion this time are: Tom Dietterich (Oregon State University), Sabine Hauert (University of Bristol), Sarit Kraus (Bar-Ilan University), Michael Littman (Brown University), Lucy Smith (AIhub), Anna Tahovská (Czech Technical University), and Oskar von Stryk (Technische Universität Darmstadt).

Sabine Hauert: This month our topic is AI-generated art. There are lots of questions relating to the value of the art that’s generated by these AI systems, whether artists should be working with these tools, and whether that devalues the work that they do.

Lucy Smith: I was interested in this case, whereby Shutterstock is now going to sell images created exclusively by OpenAI’s DALL-E 2. They say that they’re going to compensate the artists whose work they used in training the model, but I don’t know how they are going to work out how much each training image has contributed to each created image that they sell. Their model would have to be quite transparent.

Sabine: Do they have a way of reverse engineering that? You put a prompt in the system, it generates an image, and it tells you from what images it was trained on? Do they have that transparency?

Tom Dietterich: It’s a very active area of research, as far as I can tell. [For example: this blog post by Anna Rogers about the problem, and this thread, and this web site show how to search the training corpus to find the relevant supporting training examples. There is a similar effort looking at models of code.] There are definitely methods where they take the generated image, or the internal activations, and they search against the training data and find the most similar ones. So, it’s a post-hoc attribution. Obviously, we want this in general for debugging, at a minimum. If we think about some of these other AI systems, like the ones that write software, when they make a mistake, we want to figure out why. So, it’s a hugely urgent thing to be able to attribute success and failure back to the training data.

Sabine: I personally don’t think it devalues the work of the artists. I think people will still buy their work because it’s more than just an image, it’s what the artist put in, the meaning, the talent, the skill, that you buy into. And sometimes that’s what makes the beauty of it. It’s what’s behind the painting, rather than the painting or the image itself. So, I think people will still value that. If anything, we’ll value real humans more. If people can see that art can be generated by AI just a little bit too easily, to a certain extent, they might value the complexity or hard work of handmade things a little bit more. I’d like to think that that’s the case. Now, there’s people who work in marketing who generate logos or quick images for publications for newspapers. That can be done, to a certain extent, by other tools, so maybe in that sense it’s not the art that’s devalued, it’s the profession in which people generate specific pieces of work for mass consumption.

Tom: We’re seeing people reporting success using language models as brainstorming tools, either visual or just textual. There’s a guy I follow on Twitter, Aaron Hertzmann, who’s a computational art person and he’s got some interesting blog posts on the topic. Basically, he focuses on the intent of the artist rather than the object – just like Andy Warhol and his pop art. In a Twitter conversation, I suggested that people will go meta on this and produce DALL-E-based things, and someone had already done a DALL-Ean version of Andy Warhol pop art. Human creativity will put layers on top of the layers of the technology. It’s always happened that way, and it’s always been a big disruption. There’s always been a big soul searching about philosophy of art.

Sabine: I wonder how much cherry picking has been going on with these images. My PhD students have been putting their theses in, and the images aren’t that useful. They’ll give you a humanoid image merged with all sorts of funky things, even though we’re not working on humanoids. I think we might be over expecting what these technologies can do at this stage, just because there’s been so much cherry picking of cool images generated, rather than what does come out more broadly.

Michael Littman: My experience with DALL-E 2 is that it is fun and useful, but far from professional. Artists are still needed to create high-quality work. Photographers didn’t eliminate painters.

Tom: But photography did have a big impact on painting.

Michael: True.

Tom: Somebody made a very clever statement on Twitter, that the big revolution will be in hotel room art. We can only improve!

Sarit Kraus: Applied to mass production, this AI-generated art will work. But who would like to put it on their wall? That is a different question.

Michael: I’m decorating my new office in DALL-E 2 images…

Sabine: That’s true, you can completely innovate, right? If you can constantly generate art, then you don’t need a static painting, you can have a dynamic painting. It’s always updating, being retrained, you get new images that come out. So, art might be just very different in how it’s presented and what it looks like.

Sabine: Has anyone seen any beautiful examples of AI art?

Tom: I like the work of Helena Sarin (#NeuralBricolage). She uses LLMs to generate images and then renders them in ceramics.

Oskar von Stryk: I was also looking at the entertaining posts which came out of the use of AI for creating art. In our group we have tried it to create pictures of scenes which we use for training robotic vision in cases where we didn’t have enough training pictures. But this was just a preliminary experiment.

Oskar: Regarding art and AI, in the movie I, Robot, which came out in 2004, there was also a discussion about art. The scene I am referring to contains this quote: Del Spooner (Will Smith’s character): “Can a robot write a Symphony? Can a robot turn a canvas into a beautiful masterpiece?”. Sonny (the robot): “Can you?” Our discussion today reminded me of this scene. 18 years after the movie came out, it is still more relevant than ever.

Sabine: And the “can you?” is a good question, because I cannot! But maybe now I could, with these tools. I feel like I’m creative, I would love to be able to paint, but I can’t. But maybe now I can do something that represents what’s in my head.

Tom: There’s also a lot of research now on interactive editing, linguistically or with a bunch of different tools. Not only for these images, but also for code – people are really taking up using these systems for code. When it doesn’t do exactly what you want then you need to highlight the lines and say “no, I meant, blah blah blah”. So, by iterating Sabine, you’ll be able to do much better.

Sabine: I wonder what kids will learn in school, in art class. Hopefully they’ll still do pen and paper paintings but maybe there’s a cool world where you get them excited about technology by doing a DALL-E class in their early-stage art classes.

Anna Tahovská: Is AI generated art copyrighted?

Michael: I used some images from DALL-E 2 in my book (currently in production). The MIT Press was very confused about how to get permission to use the images. Ultimately, Open AI made it easy because they aren’t claiming rights to the specific images.

Sabine: I guess the problem is, if you go back to the original and note that there’s a little corner of that image that obviously comes from an artist’s picture, that’s going to be tricky.

Tom: People have pointed out that sometimes DALL-E generates the watermark for the source.

Sabine: Moving on to talk about the artists, many of them were a bit distressed by all of this AI-generated art. Should we do something to protect artists against these technologies?

Tom: Aaron Hertzmann gave a talk at SIGGRAPH, evidently about this question.

Oskar: I think all of this challenges us to think about the tasks that humans can do and can’t do, and what tasks machines can do and can’t do. On the robotic side, robotics is successful only for repetitive, frequent, and non-complex tasks. If you look at deep learning, this is based on expertise which is coded into the training data. In general, any job develops further with the advance of technology. The question is, what would the artist of the future look like, using these tools? Humans can create and bring uniqueness into this, which the algorithms, at least in the foreseeable future, cannot do. I think this is the challenge. This is the challenge to be solved.

Sabine: We had a similar conversation with the Olympics, I remember, about robots and humans, and the fact that humans do these amazing sports and push really hard. There’s something similar with artists.

Oskar: What I’m trying to say is that, for me, it is not the algorithm or the robot, or the human, it’s both together and how they might work together in the future.

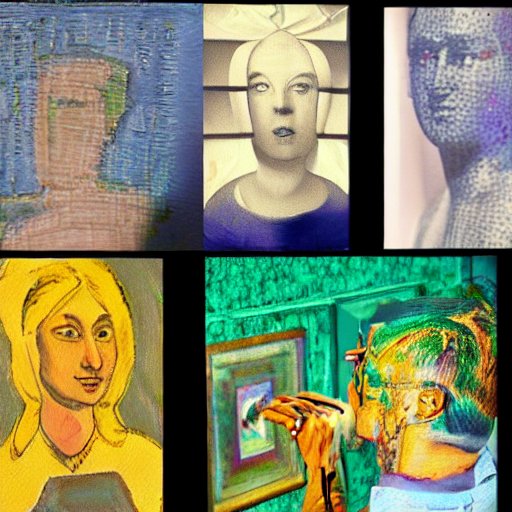

Following our discussion, we used Stable Diffusion and Craiyon (formerly DALL-E Mini) to generate images for the prompt “AI experts discussing AI-generated art”. These are the first images that were generated by each:

Image generated by Craiyon. Prompt: “AI experts discussing AI-generated art”.

Image generated by Craiyon. Prompt: “AI experts discussing AI-generated art”.

Image generated by Stable Diffusion Online. Prompt: “AI experts discussing AI-generated art”.

Image generated by Stable Diffusion Online. Prompt: “AI experts discussing AI-generated art”.

tags: coffee corner