ΑΙhub.org

Experimenting with generative AI in the classroom

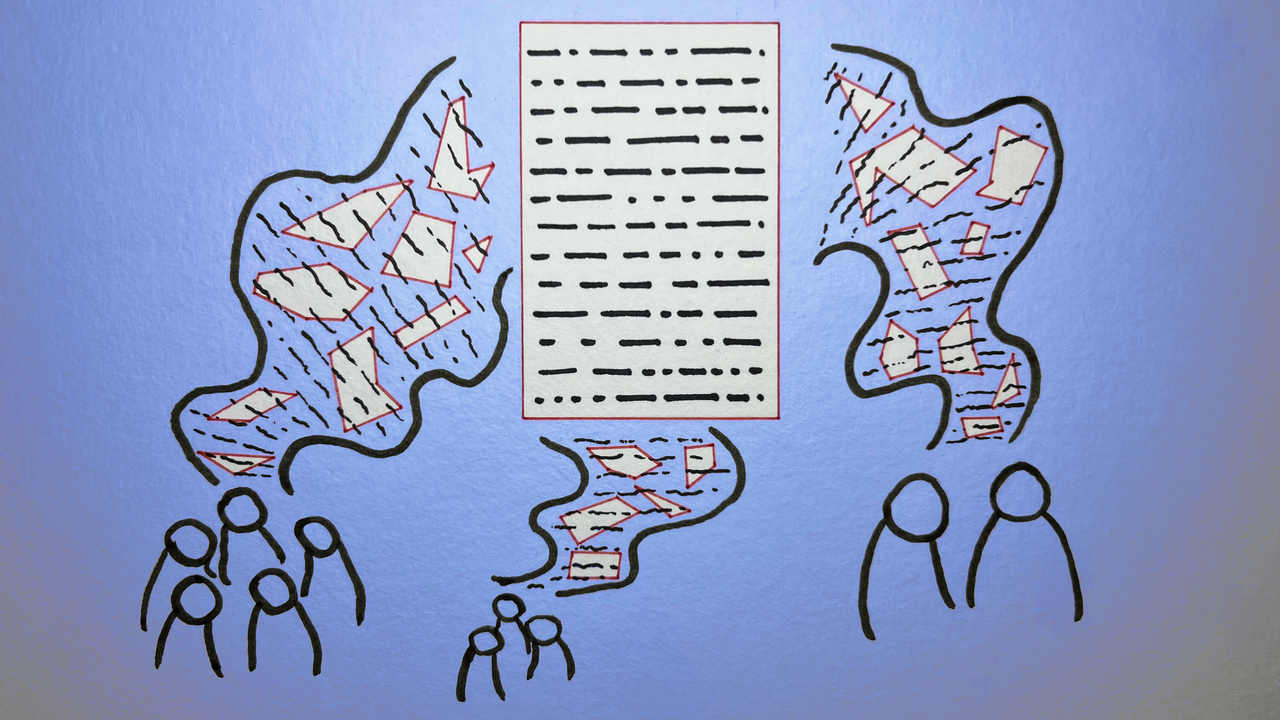

Yasmine Boudiaf & LOTI / Better Images of AI / Data Processing / Licenced by CC-BY 4.0

Yasmine Boudiaf & LOTI / Better Images of AI / Data Processing / Licenced by CC-BY 4.0

By Darren McAlmont

As artificial intelligence (AI) challenges us to reimagine new ways of doing and being, Dr Marcel O’Gorman, professor of English Language and Literature, embraces emerging technologies and applies them to his pedagogy in the classroom.

O’Gorman has published widely about the impacts of technology, and his most recent research focuses on how critical and inclusive design methods might help tackle some of the moral and ethical issues faced by contemporary technoculture.

O’Gorman recently wrapped up teaching a fourth-year undergraduate course on techno-critical writing and design that focused on key issues around responsible innovation, such as algorithmic bias, conflict minerals and the colonial practices of big tech on the global stage. Students applied what they learned by writing and designing projects throughout the course.

“They wrote stories in ChatGPT that tested the AI for gender bias. They generated images in DALL-E 2 that traced a racist history in the AI’s training data,” O’Gorman says. “The point of all this was to provide a way for students to respond critically and creatively to big problems in tech that can leave us all feeling a little helpless.”

Many of the students from the course will cross the stage at the Fall 2023 Convocation, and O’Gorman shares some of the skills the graduating students can leverage as they start their careers. “They have learned how to co-produce knowledge with generative AI, but they have also learned its limitations,” he says.

“Maybe the biggest gain was the collaborative experience the course offered these students. This class was run like an experimental design studio, and the students really built a safe and brave community out of it. It was a joy to watch it all come together,” O’Gorman continues.

The course culminated in the design and production of a mega-zine. In addition, students participated in the Tech Stewardship Practice Program, which equipped them with skills and a credential they can leverage on the job market.

Even though O’Gorman was the visionary behind the course, he emphasizes that the students were the real stars. “Kathleen Ball, Emma Flaxman, Amy Loggan, Karen Ma, Tanishi Naik, Chinye Obiago, Stephanie Powers, Fatima Siddiqui and Monica Terzic all worked collaboratively to put together a publication they should be very proud of,” he says.

“Each class felt like a community get-together, in which everyone had the equal opportunity to share and expand on the newly learned concepts and ideas,” Obiago adds. “It was one of the most enjoyable courses I have taken in my undergraduate career. The readings were very informative and opened my eyes to a lot of issues and concerns within the world of technology from an ethics standpoint.”

Asked for his take on the expressed concern of the threat of AI technology on human jobs, O’Gorman insists that the technology is primarily a threat to genres of writing that is technical and bureaucratic. “What it can’t do is write on a human scale, and there will always be a need for that kind of writing because it builds trust,” he explains.

“Right now, I’m more concerned about the kinds of jobs it’s creating, not the ones it’s replacing. Do my students who love writing want minimum wage jobs training an AI how to write better than them? Probably not. A bigger concern is people in places like Kenya who work as data labellers, getting paid $2 an hour to censor torture and child abuse content from training data.”

For this reason, O’Gorman urges for a broader consideration of AI technology than just its threat to human jobs and more on the colonial impact of these new tools. “As the technology continues to grow, the focus should be not just on labour but on human rights,” he says.

As for what’s next, O’Gorman says that he’s teaching a version of the course at the graduate level and is using generative AI in all his courses, including one in engineering communication. “I strongly believe that it’s possible to be a critical thinker and designer without ‘gumming up the works’ of the innovation process,” O’Gorman says. “We need more innovators who are trained to think critically about the tech they are developing.”