ΑΙhub.org

#RoboCup2024 – daily digest: 21 July

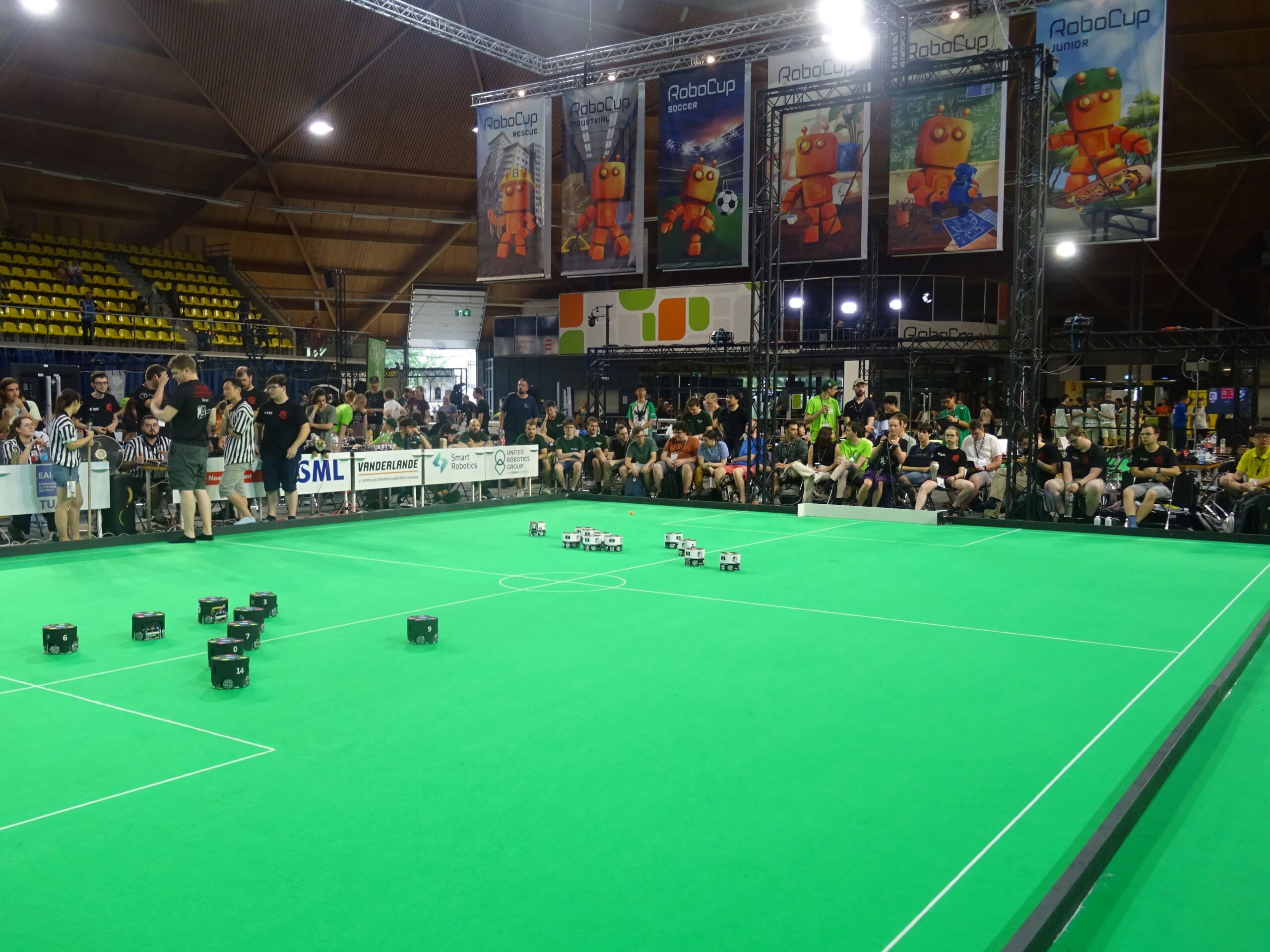

A break in play during a Small Size League match.

A break in play during a Small Size League match.

Today, 21 July, saw the competitions draw to a close in a thrilling finale. In the third and final of our round-up articles, we provide a flavour of the action from this last day. If you missed them, you can find our first two digests here: 19 July | 20 July.

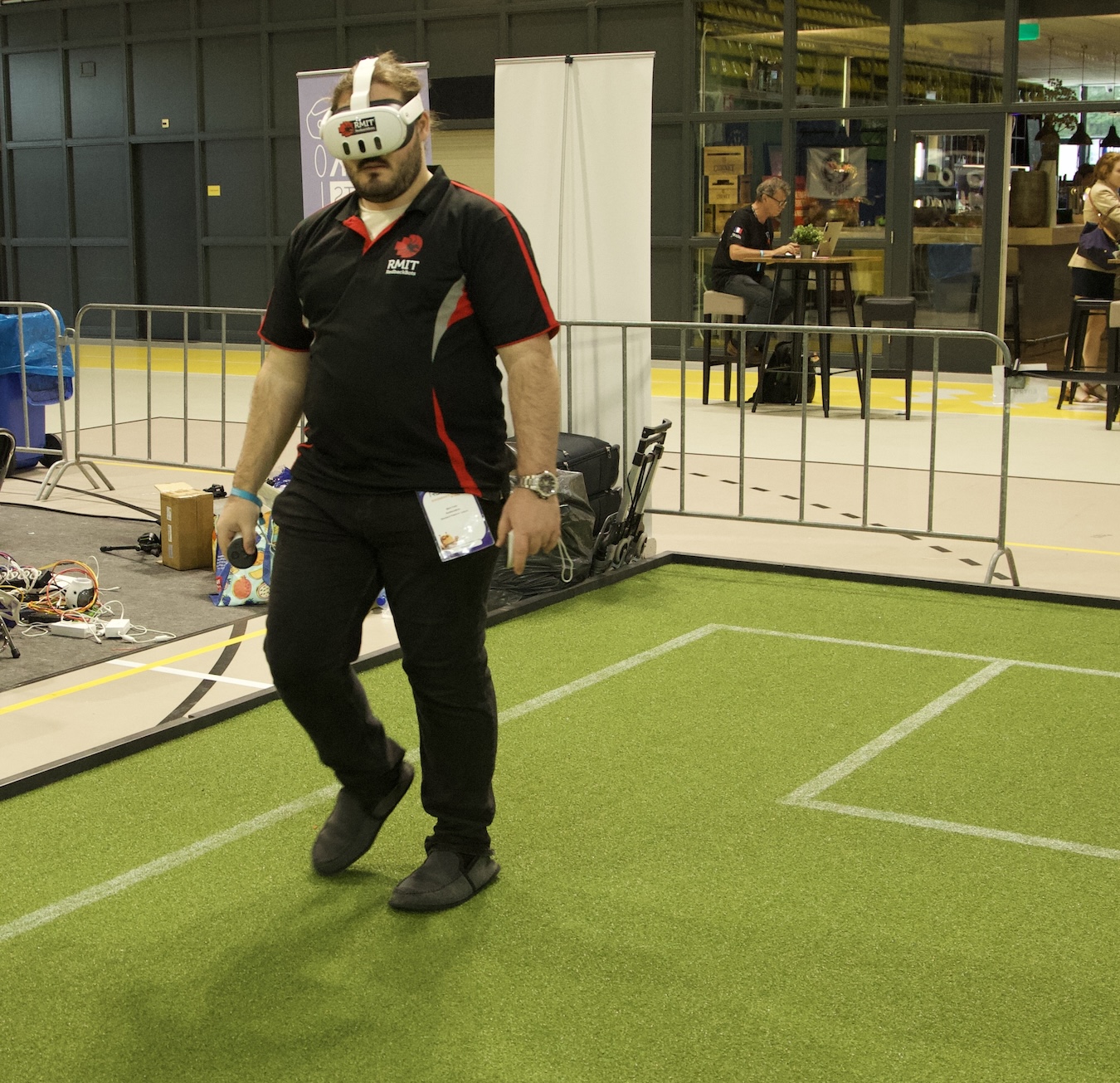

My first port of call this morning was the Standard Platform League, where Dr Timothy Wiley and Tom Ellis from Team RedbackBots, RMIT University, Melbourne, Australia, demonstrated an exciting advancement that is unique to their team. They have developed an augmented reality (AR) system with the aim of enhancing the understanding and explainability of the on-field action.

The RedbackBots travelling team for 2024 (L-to-R: Murray Owens, Sam Griffiths, Tom Ellis, Dr Timothy Wiley, Mark Field, Jasper Avice Demay). Photo credit: Dr Timothy Wiley.

The RedbackBots travelling team for 2024 (L-to-R: Murray Owens, Sam Griffiths, Tom Ellis, Dr Timothy Wiley, Mark Field, Jasper Avice Demay). Photo credit: Dr Timothy Wiley.

Timothy, the academic leader of the team explained: “What our students proposed at the end of last year’s competition, to make a contribution to the league, was to develop an augmented reality (AR) visualization of what the league calls the team communication monitor. This is a piece of software that gets displayed on the TV screens to the audience and the referee, and it shows you where the robots think they are, information about the game, and where the ball is. We set out to make an AR system of this because we think it’s so much better to view it overlaid on the field. What the AR lets us do is project all of this information live on the field as the robots are moving.”

The team has been demonstrating the system to the league at the event, with very positive feedback. In fact, one of the teams found an error in their software during a game whilst trying out the AR system. Tom said that they’ve received a lot of ideas and suggestions from the other teams for further developments. This is one of the first (if not, the first) AR system to be trialled across the competition, and first time it has been used in the Standard Platform League. I was lucky enough to get a demo from Tom and it definitely added a new level to the viewing experience. It will be very interesting to see how the system evolves.

Mark Field setting up the MetaQuest3 to use the augmented reality system. Photo credit: Dr Timothy Wiley.

Mark Field setting up the MetaQuest3 to use the augmented reality system. Photo credit: Dr Timothy Wiley.

From the main soccer area I headed to the RoboCupJunior zone, where Rui Baptista, an Executive Committee member, gave me a tour of the arenas and introduced me to some of the teams that have been using machine learning models to assist their robots. RoboCupJunior is a competition for school children, and is split into three leagues: Soccer, Rescue and OnStage.

I first caught up with four teams from the Rescue league. Robots identify “victims” within re-created disaster scenarios, varying in complexity from line-following on a flat surface to negotiating paths through obstacles on uneven terrain. There are three different strands to the league: 1) Rescue Line, where robots follow a black line which leads them to a victim, 2) Rescue Maze, where robots need to investigate a maze and identify victims, 3) Rescue Simulation, which is a simulated version of the maze competition.

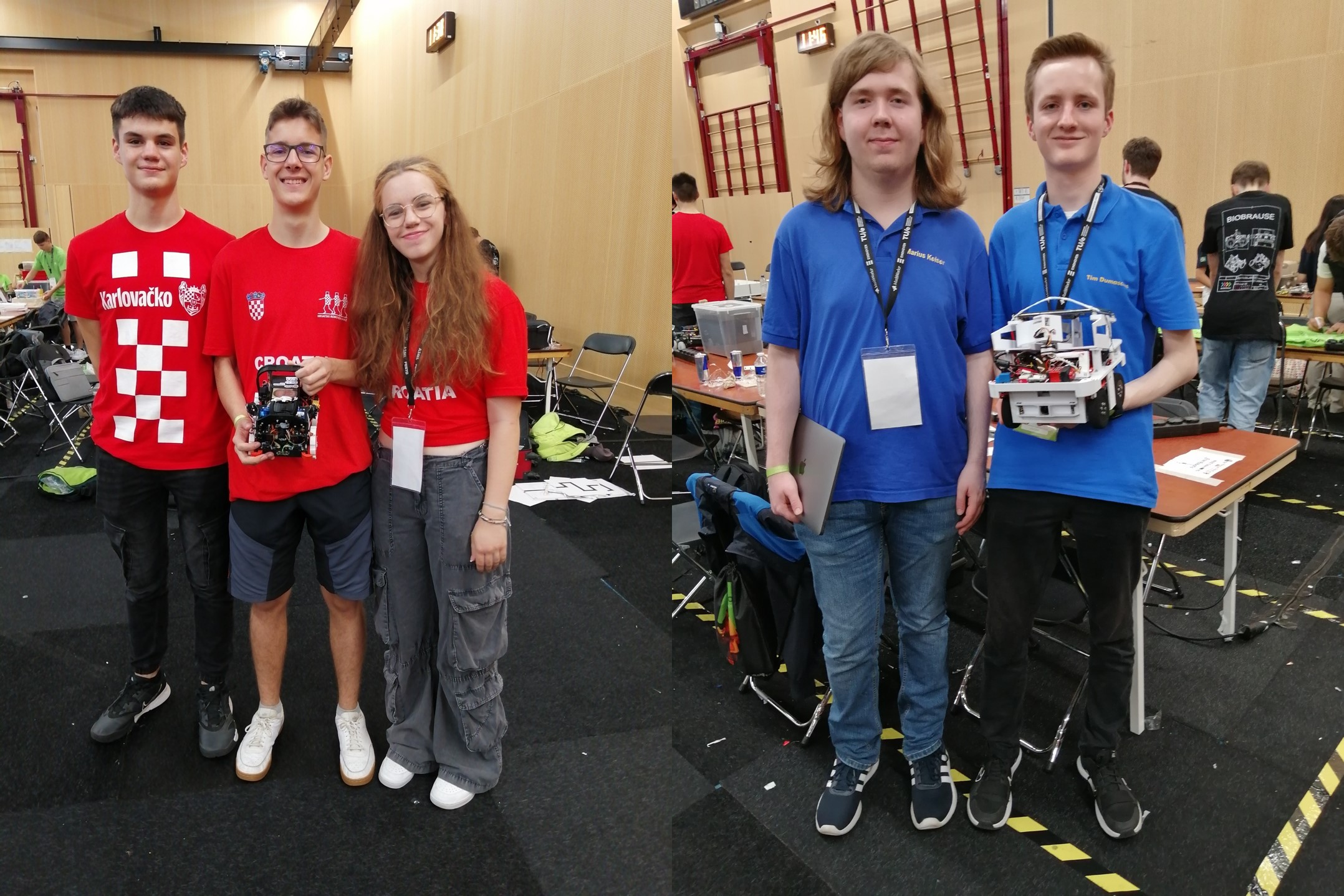

Team Skollska Knijgia, taking part in the Rescue Line, used a YOLO v8 neural network to detect victims in the evacuation zone. They trained the network themselves with about 5000 images. Also competing in the Rescue Line event were Team Overengeniering2. They also used YOLO v8 neural networks, in this case for two elements of their system. They used the first model to detect victims in the evacuation zone and to detect the walls. Their second model is utilized during line following, and allows the robot to detect when the black line (used for the majority of the task) changes to a silver line, which indicates the entrance of the evacuation zone.

Left: Team Skollska Knijgia. Right: Team Overengeniering2.

Left: Team Skollska Knijgia. Right: Team Overengeniering2.

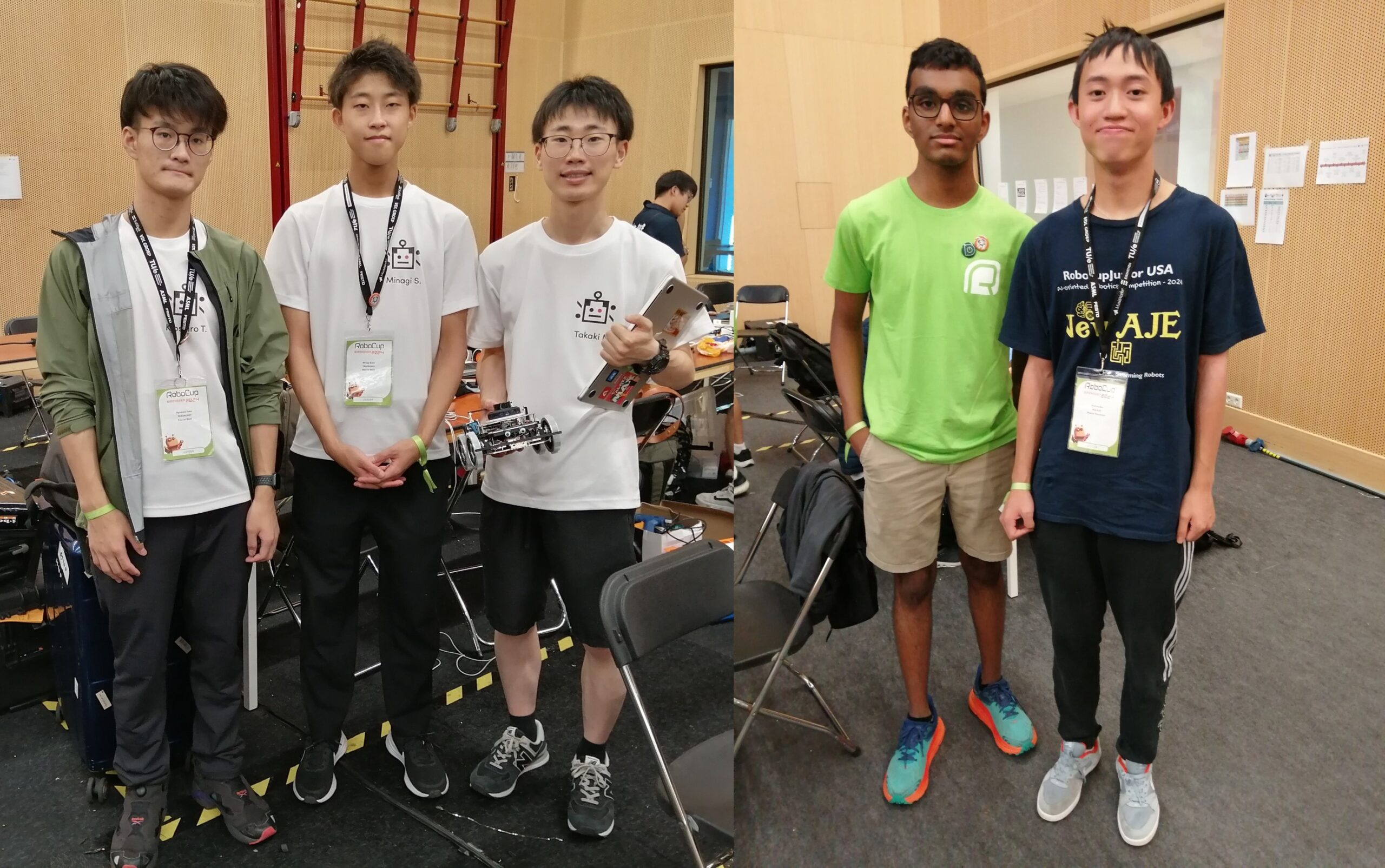

Team Tanorobo! were taking part in the maze competition. They also used a machine learning model for victim detection, training on 3000 photos for each type of victim (these are denoted by different letters in the maze). They also took photos of walls and obstacles, to avoid mis-classification. Team New Aje were taking part in the simulation contest. They used a graphical user interface to train their machine learning model, and to debug their navigation algorithms. They have three different algorithms for navigation, with varying computational cost, which they can switch between depending on the place (and complexity) in the maze in which they are located.

Left: Team Tanorobo! Right: Team New Aje.

Left: Team Tanorobo! Right: Team New Aje.

I met two of the teams who had recently presented in the OnStage event. Team Medic’s performance was based on a medical scenario, with the team including two machine learning elements. The first being voice recognition, for communication with the “patient” robots, and the second being image recognition to classify x-rays. Team Jam Session’s robot reads in American sign language symbols and uses them to play a piano. They used the MediaPipe detection algorithm to find different points on the hand, and random forest classifiers to determine which symbol was being displayed.

Left: Team Medic Bot Right: Team Jam Session.

Left: Team Medic Bot Right: Team Jam Session.

Next stop was the humanoid league where the final match was in progress. The arena was packed to the rafters with crowds eager to see the action.

Standing room only to see the Adult Size Humanoids.

Standing room only to see the Adult Size Humanoids.

The finals continued with the Middle Size League, with the home team Tech United Eindhoven beating BigHeroX by a convincing 6-1 scoreline. You can watch the livestream of the final day’s action here.

The grand finale featured the winners of the Middle Size League (Tech United Eindhoven) against five RoboCup trustees. The humans ran out 5-2 winners, their superior passing and movement too much for Tech United.

tags: RoboCup, RoboCup2024