ΑΙhub.org

Human-AI collaboration in physical tasks

By Riku Arakawa

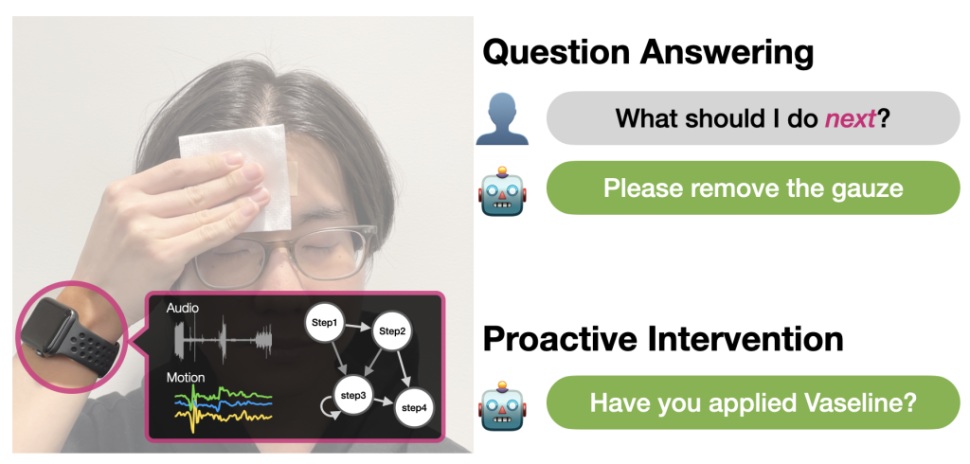

TL;DR: At SmashLab, we’re creating an intelligent assistant that uses the sensors in a smartwatch to support physical tasks such as cooking and DIY. This blog post explores how we use less intrusive scene understanding—compared to cameras—to enable helpful, context-aware interactions for task execution in their daily lives.

Thinking about AI assistants for tasks beyond just the digital world? Every day, we perform many tasks, including cooking, crafting, and medical self-care (like the COVID-19 self-test kit), which involve a series of discrete steps. Accurately executing all the steps can be difficult; when we try a new recipe, for example, we might have questions at any step and might make mistakes by skipping important steps or doing them in the wrong order.

This project, Procedural Interaction from Sensing Module (PrISM), aims to support users in executing these kinds of tasks through dialogue-based interactions. By using sensors such as a camera, wearable devices like a smartwatch, and privacy-preserving ambient sensors like a Doppler Radar, an assistant can infer the user’s context (what they are doing within the task) and provide contextually situated help.

To achieve human-like assistance, we must consider many things: how does the agent understand the user’s context? How should it respond to user’s spontaneous questions? When should it decide to intervene proactively? And most importantly, how do both human users and AI assistants evolve together through everyday interactions?

While different sensing platforms (e.g., cameras, LiDAR, Doppler Radars, etc.) can be used in our framework, we focus on a smartwatch-based assistant in the following. The smartwatch is chosen for its ubiquity, minimal privacy concerns compared to camera-based systems, and capability for monitoring a user across various daily activities.

Tracking User Actions with Multimodal Sensing

Human Activity Recognition (HAR) is a technique to identify user activity contexts from sensors. For example, a smartwatch has motion and audio sensors to detect different daily activities such as hand washing and chopping vegetables [1]. However, out of the box, state-of-the-art HAR struggles from noisy data and less-expressive actions that are often part of daily life tasks.

PrISM-Tracker (IMWUT’22) [2] improves tracking by adding state transition information, that is, how users transition from one step to another and how long they usually spend at each step. The tracker uses an extended version of the Viterbi algorithm [3] to stabilize the frame-by-frame HAR prediction.

As shown in the above figure, PrISM-Tracker improves the accuracy of frame-by-frame tracking. Still, the overall accuracy is around 50-60%, highlighting the challenge of using just a smartwatch to precisely track the procedure state at the frame level. Nevertheless, we can develop helpful interactions out of this imperfect sensing.

Responding to user ambiguous queries

Voice assistants (like Siri and Amazon Alexa), capable of answering user queries during various physical tasks, have shown promise in guiding users through complex procedures. However, users often find it challenging to articulate their queries precisely, especially when unfamiliar with the specific vocabulary. Our PrISM-Q&A (IMWUT’24) [4] can resolve such issues with context derived from PrISM-Tracker.

When a question is posed, sensed contextual information is supplied to Large Language Models (LLMs) as part of the prompt context used to generate a response, even in the case of inherently vague questions like “What should I do next with this?” and “Did I miss any step?” Our studies demonstrated improved accuracy in question answering and preferred user experience compared to existing voice assistants in multiple tasks: cooking, latte-making, and skin care.

Because PrISM-Tracker can make mistakes, the output of PrISM-Q&A may also be incorrect. Thus, if the assistant uses the context information, the assistant first characterizes its current understanding of the context in the response to avoid confusing the user, for instance, “If you are washing your hands, then the next step is cutting vegetables.” This way, it tries to help users identify the error and quickly correct it interactively to get the desired answer.

Intervening with users proactively to prevent errors

Next, we extended the assistant’s capability by incorporating proactive intervention to prevent errors. Technical challenges include noise in sensing data and uncertainties in user behavior, especially since users are allowed flexibility in the order of steps to complete tasks. To address these challenges, PrISM-Observer (UIST’24) [5] employs a stochastic model to try to account for uncertainties and determine the optimal timing for delivering reminders in real time.

Crucially, the assistant does not impose a rigid, predefined step-by-step sequence; instead, it monitors user behavior and intervenes proactively when necessary. This approach balances user autonomy and proactive guidance, enabling individuals to perform essential tasks safely and accurately.

Future directions

Our assistant system has just been rolled out, and plenty of future work is still on the horizon.

Minimizing the data collection effort

To train the underlying human activity recognition model on the smartwatch and build a transition graph, we currently conduct 10 to 20 sessions of the task, each annotated with step labels. Employing a zero-shot multimodal activity recognition model and refining step granularity are essential for scaling the assistant to handle various daily tasks.

Co-adaptation of the user and AI assistant

As future work, we’re excited to deploy our assistants in healthcare settings to support everyday care for post-operative skin cancer patients and individuals with dementia.

Mackay [6] introduced the idea of a human-computer partnership, where humans and intelligent agents collaborate to outperform either working alone. Also, reciprocal co-adaptation [7] refers to where both the user and the system adapt to and affect the others’ behavior to achieve certain goals. Inspired by these ideas, we’re actively exploring ways to fine-tune our assistant through interactions after deployment. This helps the assistant improve context understanding and find a comfortable control balance by exploring the mixed-initiative interaction design [8].

Conclusion

There are many open questions when it comes to perfecting assistants for physical tasks. Understanding user context accurately during these tasks is particularly challenging due to factors like sensor noise. Through our PrISM project, we aim to overcome these challenges by designing interventions and developing human-AI collaboration strategies. Our goal is to create helpful and reliable interactions, even in the face of imperfect sensing.

Our code and datasets are available on GitHub. We are actively working in this exciting research field. If you are interested, please contact Riku Arakawa (HCII Ph.D. student).

Acknowledgments

The author thanks every collaborator in the project. The development of the PrISM assistant for health applications is in collaboration with University Hospitals of Cleveland Department of Dermatology and Fraunhofer Portugal AICOS.

References

[1] Mollyn, V., Ahuja, K., Verma, D., Harrison, C., & Goel, M. (2022). SAMoSA: Sensing activities with motion and subsampled audio. Proceedings of the ACM on Interactive, Mobile, Wearable and Ubiquitous Technologies, 6(3), 1-19.

[2] Arakawa, R., Yakura, H., Mollyn, V., Nie, S., Russell, E., DeMeo, D. P., … & Goel, M. (2023). Prism-tracker: A framework for multimodal procedure tracking using wearable sensors and state transition information with user-driven handling of errors and uncertainty. Proceedings of the ACM on Interactive, Mobile, Wearable and Ubiquitous Technologies, 6(4), 1-27.

[3] Forney, G. D. (1973). The viterbi algorithm. Proceedings of the IEEE, 61(3), 268-278.

[4] Arakawa, R., Lehman, JF. & Goel, M. (2024) “Prism-q&a: Step-aware voice assistant on a smartwatch enabled by multimodal procedure tracking and large language models.” Proceedings of the ACM on Interactive, Mobile, Wearable and Ubiquitous Technologies, 8(4), 1-26.

[5] Arakawa, R., Yakura, H., & Goel, M. (2024, October). PrISM-Observer: Intervention agent to help users perform everyday procedures sensed using a smartwatch. In Proceedings of the 37th Annual ACM Symposium on User Interface Software and Technology (pp. 1-16).

[6] Mackay, W. E. (2023, November). Creating human-computer partnerships. In International Conference on Computer-Human Interaction Research and Applications (pp. 3-17). Cham: Springer Nature Switzerland.

[7] Beaudouin-Lafon, M., Bødker, S., & Mackay, W. E. (2021). Generative theories of interaction. ACM Transactions on Computer-Human Interaction (TOCHI), 28(6), 1-54.

[8] Allen, J. E., Guinn, C. I., & Horvtz, E. (1999). Mixed-initiative interaction. IEEE Intelligent Systems and their Applications, 14(5), 14-23.

This article was initially published on the ML@CMU blog and appears here with the author’s permission.

tags: deep dive