ΑΙhub.org

Microsoft cuts data centre plans and hikes prices in push to make users carry AI costs

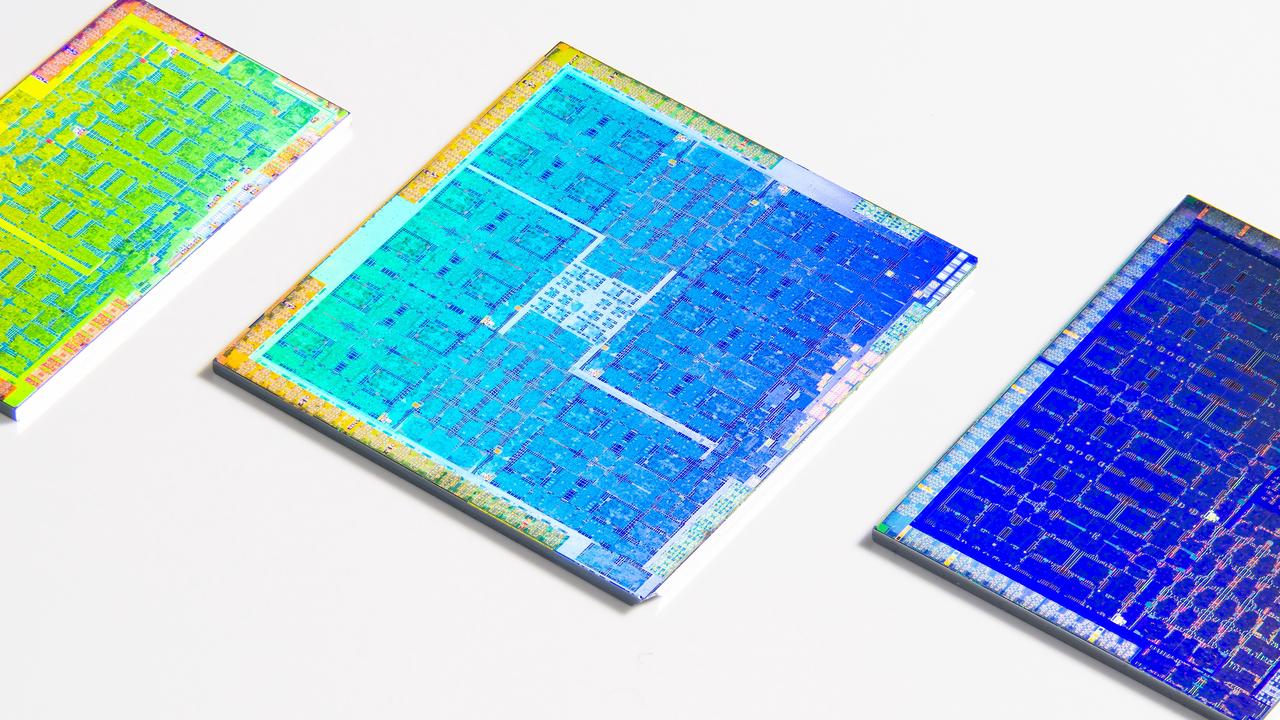

Fritzchens Fritz / Better Images of AI / GPU shot etched 2 / Licenced by CC-BY 4.0

Fritzchens Fritz / Better Images of AI / GPU shot etched 2 / Licenced by CC-BY 4.0

By Kevin Witzenberger, Queensland University of Technology and Michael Richardson, UNSW Sydney

After a year of shoehorning generative AI into its flagship products, Microsoft is trying to recoup the costs by raising prices, putting ads in products, and cancelling data centre leases. Google is making similar moves, adding unavoidable AI features to its Workspace service while increasing prices.

Is the tide finally turning on investments into generative AI? The situation is not quite so simple. Tech companies are fully committed to the new technology – but are struggling to find ways to make people pay for it.

Shifting costs

Last month, Microsoft unceremoniously pulled back on some planned data centre leases. The move came after the company increased subscription prices for its flagship 365 software by up to 45%, and quietly released an ad-supported version of some products.

The tech giant’s CEO, Satya Nadella, also recently suggested AI has so far not produced much value.

Microsoft’s actions may seem odd in the current wave of AI hype, coming amid splashy announcements such as OpenAI’s US$500 billion Stargate data centre project.

But if we look closely, nothing in Microsoft’s decisions indicates a retreat from AI itself. Rather, we are seeing a change in strategy to make AI profitable by shifting the cost in non-obvious ways onto consumers.

The cost of generative AI

Generative AI is expensive. OpenAI, the market leader with a claimed 400 million active monthly users, is burning money.

Last year, OpenAI brought in US$3.7 billion in revenue – but spent almost US$9 billion, for a net loss of around US$5 billion.

Microsoft is OpenAI’s biggest investor and currently provides the company with cloud computing services, so OpenAI’s spending also costs Microsoft.

What makes generative AI so expensive? Human labour aside, two costs are associated with AI models: training (building the model) and inference (using the model).

While training is an (often large) up-front expense, the costs of inference grow with the user base. And the bigger the model, the more it costs to run.

Smaller, cheaper alternatives

A single query on OpenAI’s most advanced models can cost up to US$1,000 in compute power alone. In January, OpenAI CEO Sam Altman said even the company’s US$200 per month subscription is not profitable. This signals the company is not only losing money through use of its free models, but through its subscription models as well.

Both training and inference typically take place in data centres. Costs are high because the chips needed to run them are expensive, but so too are electricity, cooling, and the depreciation of hardware.

To date, much AI progress has been achieved by using more of everything. OpenAI describes its latest upgrade as a “giant, expensive model”. However, there are now plenty of signs this scale-at-all-costs approach might not even be necessary.

Chinese company DeepSeek made waves earlier this year when it revealed it had built models comparable to OpenAI’s flagship products for a tiny fraction of the training cost. Likewise, researchers from Seattle’s Allen Institute for AI (Ai2) and Stanford University claim to have trained a model for as little as US$50.

In short, AI systems developed and delivered by tech giants might not be profitable. The costs of building and running data centres are a big reason why.

What is Microsoft doing?

Having sunk billions into generative AI, Microsoft is trying to find the business model that will make the technology profitable.

Over the past year, the tech giant has integrated the Copilot generative AI chatbot into its products geared towards consumers and businesses.

It is no longer possible to purchase any Microsoft 365 subscription without Copilot. As a result subscribers are seeing significant price hikes.

As we have seen, running generative AI models in data centres is expensive. So Microsoft is likely seeking ways to do more of the work on users’ own devices – where the user pays for the hardware and its running costs.

A strong clue for this strategy is a small button Microsoft began to put on its devices last year. In the precious real estate of the QWERTY keyboard, Microsoft dedicated a key to Copilot on its PCs and laptops capable of processing AI on the device.

Apple is pursuing a similar strategy. The iPhone manufacturer is not offering most of its AI services in the cloud. Instead, only new devices offer AI capabilities, with on-device processing marketed as a privacy feature that prevents your data travelling elsewhere.

Pushing costs to the edge

There are benefits to the push to do the work of generative AI inference on the computing devices in our pockets, on our desks, or even on smart watches on our wrists (so-called “edge computing”, because it occurs at the “edge” of the network).

It can reduce the energy, resources and waste of data centres, lowering generative AI’s carbon, heat and water footprint. It could also reduce bandwidth demands and increase user privacy.

But there are downsides too. Edge computing shifts computation costs to consumers, driving demand for new devices despite economic and environmental concerns that discourage frequent upgrades. This could intensify with newer, bigger generative AI models.

And there are more problems. Distributed e-waste makes recycling much harder. What’s more, the playing field for users won’t be level if a device dictates how good your AI can be, particularly in educational settings.

And while edge computing may seem more “decentralised”, it may also lead to hardware monopolies. If only a handful of companies control this transition, decentralisation may not be as open as it appears.

As AI infrastructure costs rise and model development evolves, shifting the costs to consumers becomes an appealing strategy for AI companies. While big enterprises such as government departments and universities may manage these costs, many small businesses and individual consumers may struggle.![]()

Kevin Witzenberger, Research Fellow, GenAI Lab, Queensland University of Technology and Michael Richardson, Associate Professor of Media, UNSW Sydney

This article is republished from The Conversation under a Creative Commons license. Read the original article.