ΑΙhub.org

#ICML2025 outstanding position paper: Interview with Jaeho Kim on addressing the problems with conference reviewing

At this year’s International Conference on Machine Learning (ICML2025), Jaeho Kim, Yunseok Lee and Seulki Lee won an outstanding position paper award for their work Position: The AI Conference Peer Review Crisis Demands Author Feedback and Reviewer Rewards. We hear from Jaeho about the problems they were trying to address, and their proposed author feedback mechanism and reviewer reward system.

Could you say something about the problem that you address in your position paper?

Our position paper addresses the problems plaguing current AI conference peer review systems, while also raising questions about the future direction of peer review.

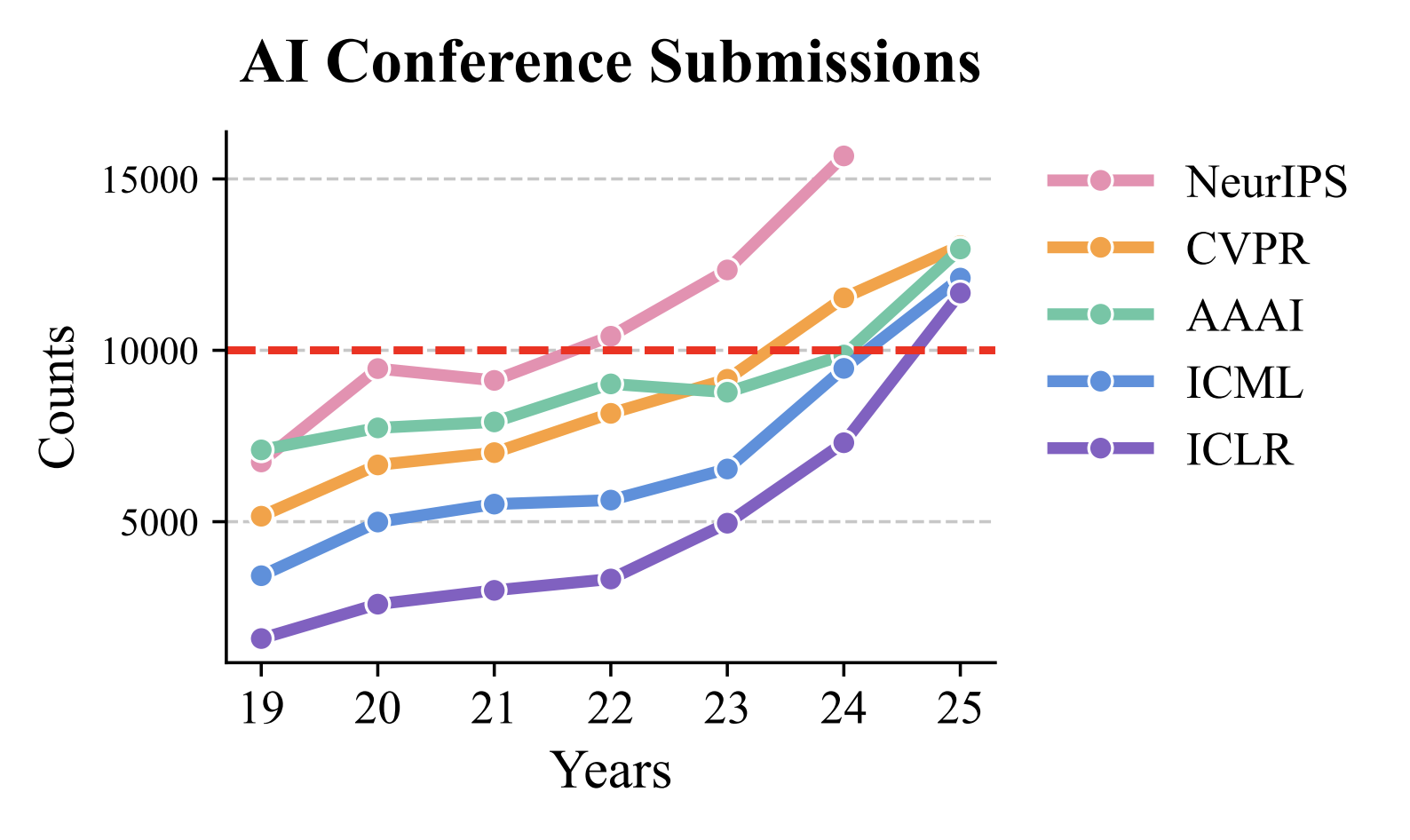

The imminent problem with the current peer review system in AI conferences is the exponential growth in paper submissions driven by increasing interest in AI. To put this with numbers, NeurIPS received over 30,000 submissions this year, while ICLR saw a 59.8% increase in submissions in just one year. This huge increase in submissions has created a fundamental mismatch: while paper submissions grow exponentially, the pool of qualified reviewers has not kept pace.

Submissions to some of the major AI conferences over the past few years.

Submissions to some of the major AI conferences over the past few years.

This imbalance has severe consequences. The majority of papers are no longer receiving adequate review quality, undermining peer review’s essential function as a gatekeeper of scientific knowledge. When the review process fails, inappropriate papers and flawed research can slip through, potentially polluting the scientific record.

Considering AI’s profound societal impact, this breakdown in quality control poses risks that extend far beyond academia. Poor research that enters the scientific discourse can mislead future work, influence policy decisions, and ultimately hinder genuine knowledge advancement. Our position paper focuses on this critical question and proposes methods on how we can enhance the quality of review, thus leading to better dissemination of knowledge.

What do you argue for in the position paper?

Our position paper proposes two major changes to tackle the current peer review crisis: an author feedback mechanism and a reviewer reward system.

First, the author feedback system enables authors to formally evaluate the quality of reviews they receive. This system allows authors to assess reviewers’ comprehension of their work, identify potential signs of LLM-generated content, and establish basic safeguards against unfair, biased, or superficial reviews. Importantly, this isn’t about penalizing reviewers, but rather creating minimal accountability to protect authors from the small minority of reviewers who may not meet professional standards.

Second, our reviewer incentive system provides both immediate and long-term professional value for quality reviewing. For short-term motivation, author evaluation scores determine eligibility for digital badges (such as “Top 10% Reviewer” recognition) that can be displayed on academic profiles like OpenReview and Google Scholar. For long-term career impact, we propose novel metrics like a “reviewer impact score” – essentially an h-index calculated from the subsequent citations of papers a reviewer has evaluated. This treats reviewers as contributors to the papers they help improve and validates their role in advancing scientific knowledge.

Could you tell us more about your proposal for this new two-way peer review method?

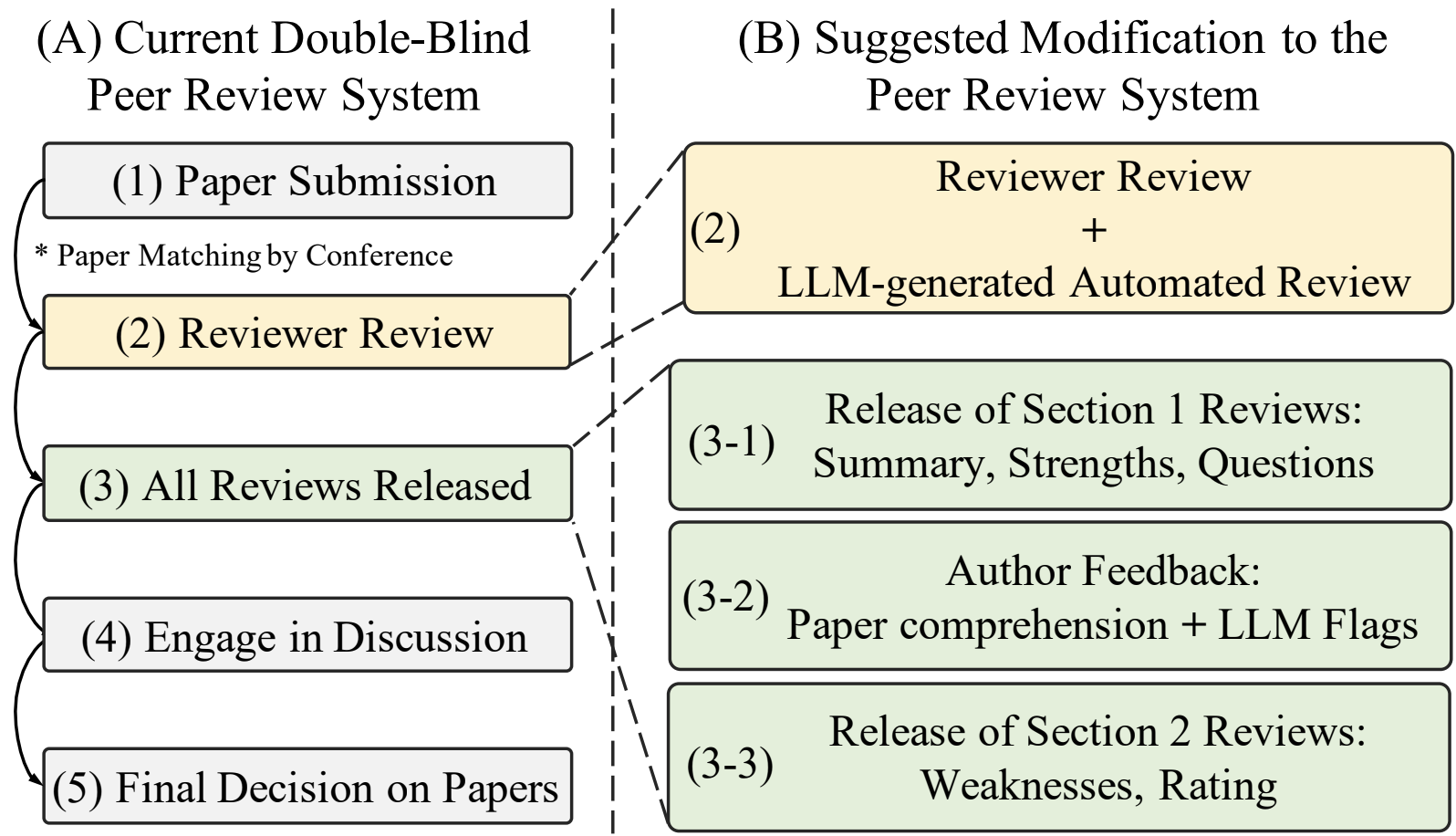

Our proposed two-way peer review system makes one key change to the current process: we split review release into two phases.

The authors’ proposed modification to the peer-review system.

The authors’ proposed modification to the peer-review system.

Currently, authors submit papers, reviewers write complete reviews, and all reviews are released at once. In our system, authors first receive only the neutral sections – the summary, strengths, and questions about their paper. Authors then provide feedback on whether reviewers properly understood their work. Only after this feedback do we release the second part containing weaknesses and ratings.

This approach offers three main benefits. First, it’s practical – we don’t need to change existing timelines or review templates. The second phase can be released immediately after the authors give feedback. Second, it protects authors from irresponsible reviews since reviewers know their work will be evaluated. Third, since reviewers typically review multiple papers, we can track their feedback scores to help area chairs identify (ir)responsible reviewers.

The key insight is that authors know their own work best and can quickly spot when a reviewer hasn’t properly engaged with their paper.

Could you talk about the concrete reward system that you suggest in the paper?

We propose both short-term and long-term rewards to address reviewer motivation, which naturally declines over time despite starting enthusiastically.

Short-term: Digital badges displayed on reviewers’ academic profiles, awarded based on author feedback scores. The goal is making reviewer contributions more visible. While some conferences list top reviewers on their websites, these lists are hard to find. Our badges would be prominently displayed on profiles and could even be printed on conference name tags.

Example of a badge that could appear on profiles.

Example of a badge that could appear on profiles.

Long-term: Numerical metrics to quantify reviewer impact at AI conferences. We suggest tracking measures like an h-index for reviewed papers. These metrics could be included in academic portfolios, similar to how we currently track publication impact.

The core idea is creating tangible career benefits for reviewers while establishing peer review as a professional academic service that rewards both authors and reviewers.

What do you think could be some of the pros and cons of implementing this system?

The benefits of our system are threefold. First, it is a very practical solution. Our approach doesn’t change current review schedules or review burdens, making it easy to incorporate into existing systems. Second, it encourages reviewers to act more responsibly, knowing their work will be evaluated. We emphasize that most reviewers already act professionally – however, even a small number of irresponsible reviewers can seriously damage the peer review system. Third, with sufficient scale, author feedback scores will make conferences more sustainable. Area chairs will have better information about reviewer quality, enabling them to make more informed decisions about paper acceptance.

However, there is strong potential for gaming by reviewers. Reviewers might optimize for rewards by giving overly positive reviews. Measures to counteract these problems are definitely needed. We are currently exploring solutions to address this issue.

Are there any concluding thoughts you’d like to add about the potential future

of conferences and peer-review?

One emerging trend we’ve observed is the increasing discussion of LLMs in peer review. While we believe current LLMs have several weaknesses (e.g., prompt injection, shallow reviews), we also think they will eventually surpass humans. When that happens, we will face a fundamental dilemma: if LLMs provide better reviews, why should humans be reviewing? Just as the rapid rise of LLMs caught us unprepared and created chaos, we cannot afford a repeat. We should start preparing for this question as soon as possible.

About Jaeho

|

Jaeho Kim is a Postdoctoral Researcher at Korea University with Professor Changhee Lee. He received his Ph.D. from UNIST under the supervision of Professor Seulki Lee. His main research focuses on time series learning, particularly developing foundation models that generate synthetic and human-guided time series data to reduce computational and data costs. He also contributes to improving the peer review process at major AI conferences, with his work recognized by the ICML 2025 Outstanding Position Paper Award. |

Read the work in full

Position: The AI Conference Peer Review Crisis Demands Author Feedback and Reviewer Rewards, Jaeho Kim, Yunseok Lee, Seulki Lee.

tags: ICML, ICML2025