ΑΙhub.org

An approach for automatically determining the possible actions in computer game states

Due to the great difficulty of thoroughly testing video game software by hand, it is desirable to have AI agents that can automatically explore different game functionalities. A key requirement of such agents is a model of the player actions that the agent can use to both determine the set of possible actions in different game states, as well as perform a chosen action on the game selected by the agent’s policy. The typical game engines that are in use today do not offer such a model of actions, leading existing work to either require human effort to manually define the action model or imprecisely guess the possible actions. In our work, we demonstrate how program analysis is an effective solution to this problem by developing a state-of-the-art analysis for the user input handling logic present in games that can automatically model game actions with a discrete action space.

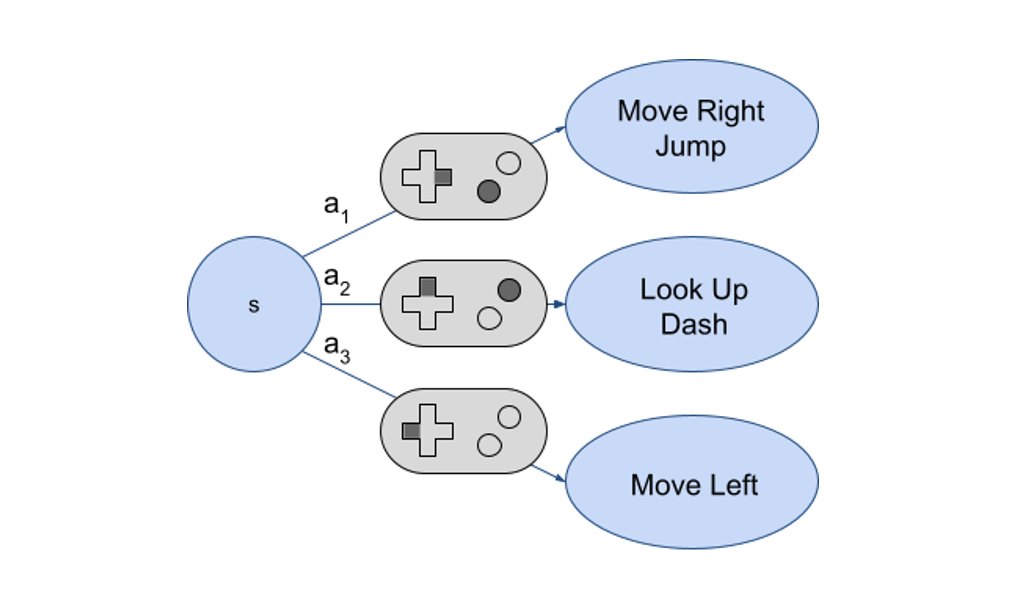

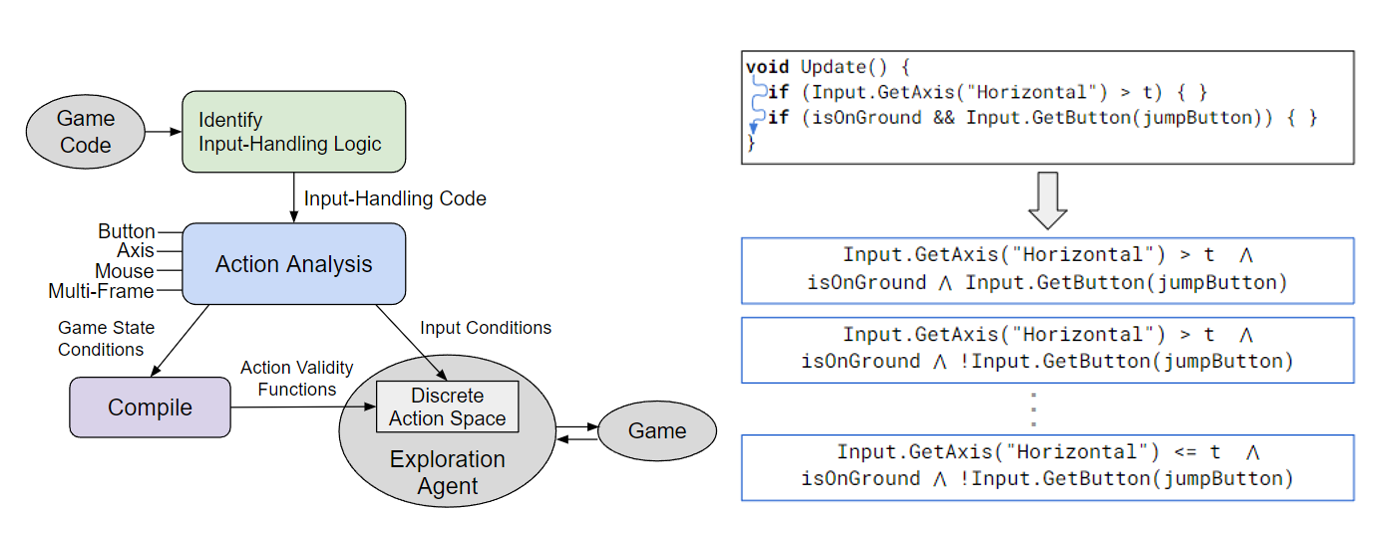

Our key insight is that the possible actions of games correspond to the different execution paths that can be taken through the user input handling logic present in the game’s code. Our methodology first uses techniques such as dependency analysis and program slicing to identify the parts of code responsible for user input handling. Next, we designed a specialized symbolic execution that evaluates the input handling code with symbolic representations of the user input and game state, giving us a set of conditions under which the different game actions occur. This set of conditions is used to define a discrete action space for the game, where each action corresponds to distinct execution path. Finally, we proposed efficient analyses for determining the set of valid actions as the agent plays the game, as well as the set of relevant device inputs to simulate on the game in order to perform a chosen action.

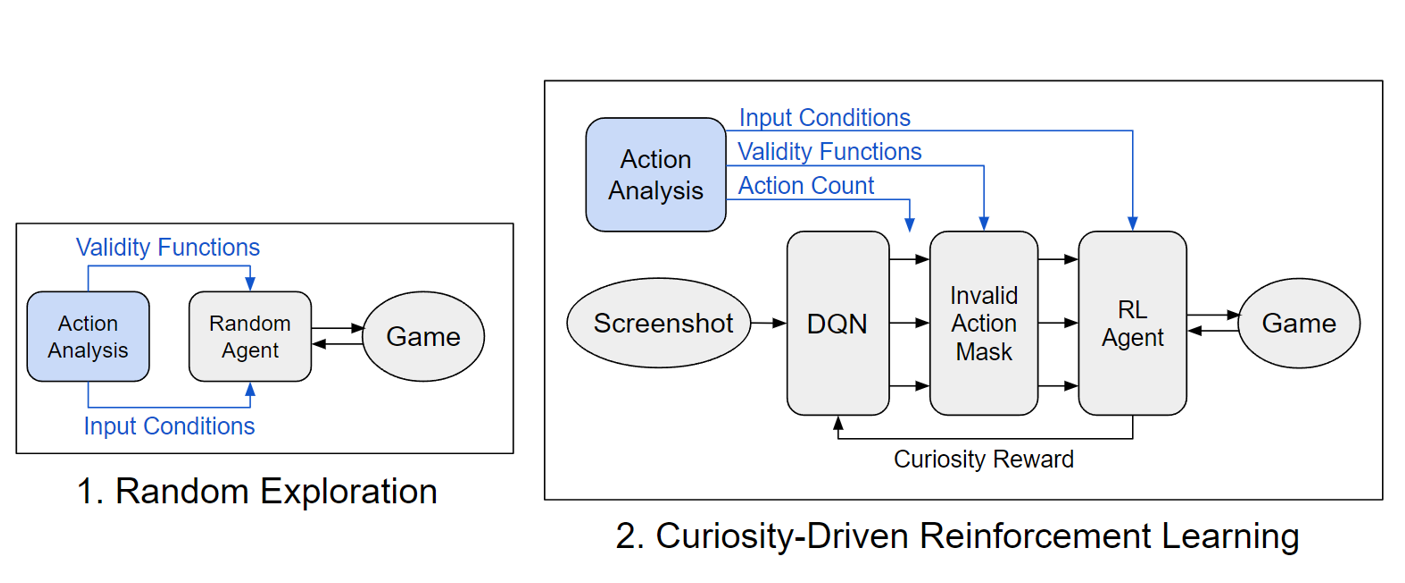

We implemented a prototype of our action analysis for the Unity game engine, then used it to automate the specification of actions for two popular exploration strategies: simple random exploration, where agents select among the valid actions uniformly at random, and curiosity-driven reinforcement learning, where agents learn over time to prioritize actions more likely to lead to new states. Our key finding was that, for the majority of games in our data set, agents using the actions determined by our analysis achieved exploration performance matching or exceeding that of the ideal case of a manual annotation of the game actions, on average achieving better performance. This demonstrates a key advantage of the capability of the automated analysis to exhaustively consider all possible execution paths, therefore often identifying more combinations of valid inputs than the human annotation.

With the increasing importance of automated testing and analysis techniques for computer games, we believe our work provides a crucial component for the deployment of next generation game testing tools based on intelligent agents. However, even with our automated approach to identifying valid actions and their relevant device inputs, the exploration of large game state spaces remains difficult. The development of novel exploration strategies, refinements, and heuristics to be used with our analysis are important next steps to achieving better game testing agents.

Read the work in full

Automatically Defining Game Action Spaces for Exploration Using Program Analysis, Sasha Volokh, William G.J. Halfond, Proceedings of the Nineteenth AAAI Conference on Artificial Intelligence and Interactive Digital Entertainment (AIIDE2023).

This work won the best student paper award at the Nineteenth AAAI Conference on Artificial Intelligence and Interactive Digital Entertainment (AIIDE2023).

tags: AAAI, AIIDE