ΑΙhub.org

Interview with Zahra Ghorrati: developing frameworks for human activity recognition using wearable sensors

In this interview series, we’re meeting some of the AAAI/SIGAI Doctoral Consortium participants to find out more about their research. Zahra Ghorrati is developing frameworks for human activity recognition using wearable sensors. We caught up with Zahra to find out more about this research, the aspects she has found most interesting, and her advice for prospective PhD students.

Tell us a bit about your PhD – where are you studying, and what is the topic of your research?

I am pursuing my PhD at Purdue University, where my dissertation focuses on developing scalable and adaptive deep learning frameworks for human activity recognition (HAR) using wearable sensors. I was drawn to this topic because wearables have the potential to transform fields like healthcare, elderly care, and long-term activity tracking. Unlike video-based recognition, which can raise privacy concerns and requires fixed camera setups, wearables are portable, non-intrusive, and capable of continuous monitoring, making them ideal for capturing activity data in natural, real-world settings.

The central challenge my dissertation addresses is that wearable data is often noisy, inconsistent, and uncertain, depending on sensor placement, movement artifacts, and device limitations. My goal is to design deep learning models that are not only computationally efficient and interpretable but also robust to the variability of real-world data. In doing so, I aim to ensure that wearable HAR systems are both practical and trustworthy for deployment outside controlled lab environments.

This research has been supported by the Polytechnic Summer Research Grant at Purdue. Beyond my dissertation work, I contribute to the research community as a reviewer for conferences such as CoDIT, CTDIAC, and IRC, and I have been invited to review for AAAI 2026. I was also involved in community building, serving as Local Organizer and Safety Chair for the 24th International Conference on Autonomous Agents and Multiagent Systems (AAMAS 2025), and continuing as Safety Chair for AAMAS 2026.

Could you give us an overview of the research you’ve carried out so far during your PhD?

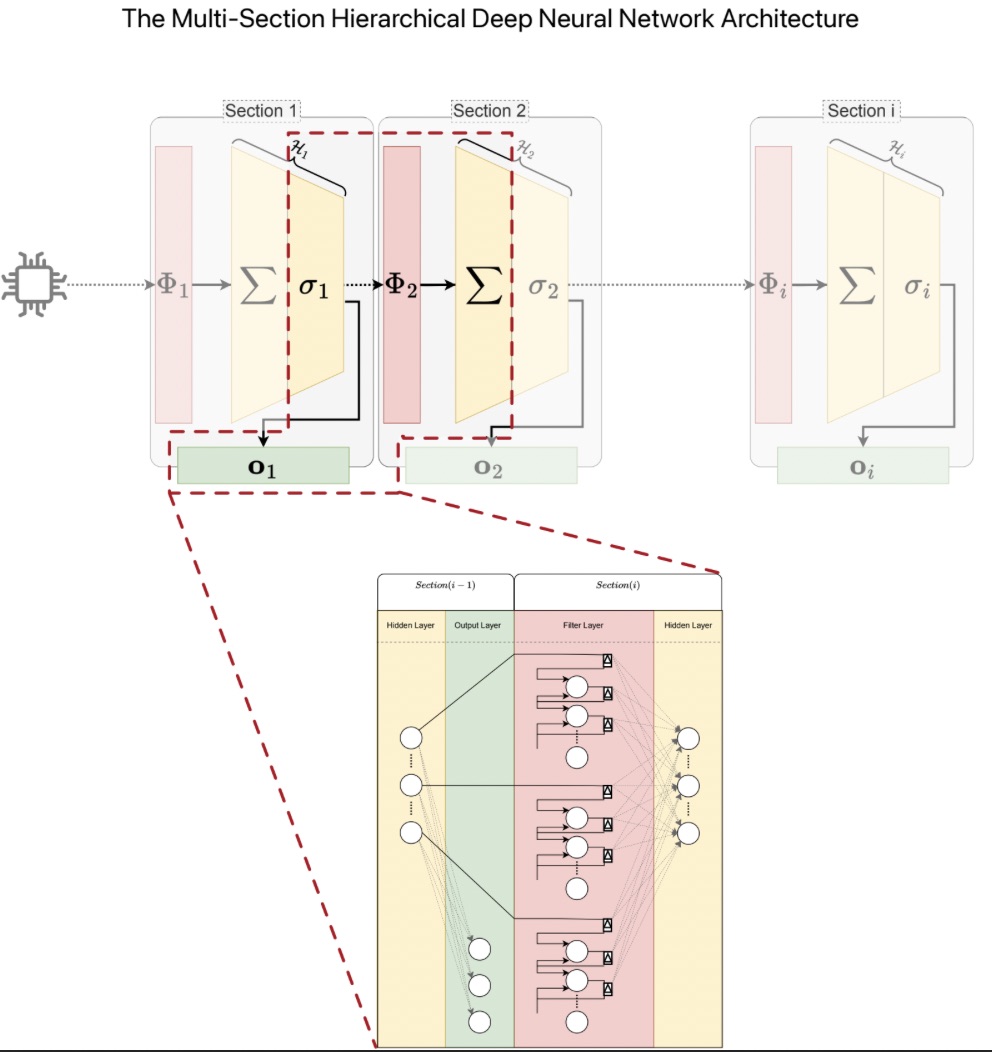

So far, my research has focused on developing a hierarchical fuzzy deep neural network that can adapt to diverse human activity recognition datasets. In my initial work, I explored a hierarchical recognition approach, where simpler activities are detected at earlier levels of the model and more complex activities are recognized at higher levels. To enhance both robustness and interpretability, I integrated fuzzy logic principles into deep learning, allowing the model to better handle uncertainty in real-world sensor data.

A key strength of this model is its simplicity and low computational cost, which makes it particularly well suited for real-time activity recognition on wearable devices. I have rigorously evaluated the framework on multiple benchmark datasets of multivariate time series and systematically compared its performance against state-of-the-art methods, where it has demonstrated both competitive accuracy and improved interpretability.

Is there an aspect of your research that has been particularly interesting?

Yes, what excites me most is discovering how different approaches can make human activity recognition both smarter and more practical. For instance, integrating fuzzy logic has been fascinating, because it allows the model to capture the natural uncertainty and variability of human movement. Instead of forcing rigid classifications, the system can reason in terms of degrees of confidence, making it more interpretable and closer to how humans actually think.

I also find the hierarchical design of my model particularly interesting. Recognizing simple activities first, and then building toward more complex behaviors, mirrors the way humans often understand actions in layers. This structure not only makes the model efficient but also provides insights into how different activities relate to one another.

Beyond methodology, what motivates me is the real-world potential. The fact that these models can run efficiently on wearables means they could eventually support personalized healthcare, elderly care, and long term activity monitoring in people’s everyday lives. And since the techniques I’m developing apply broadly to time series data, their impact could extend well beyond HAR, into areas like medical diagnostics, IoT monitoring, or even audio recognition. That sense of both depth and versatility is what makes the research especially rewarding for me.

What are your plans for building on your research so far during the PhD – what aspects will you be investigating next?

Moving forward, I plan to further enhance the scalability and adaptability of my framework so that it can effectively handle large scale datasets and support real-time applications. A major focus will be on improving both the computational efficiency and interpretability of the model, ensuring it is not only powerful but also practical for deployment in real-world scenarios.

While my current research has focused on human activity recognition, I am excited to broaden the scope to the wider domain of time series classification. I see great potential in applying my framework to areas such as sound classification, physiological signal analysis, and other time-dependent domains. This will allow me to demonstrate the generalizability and robustness of my approach across diverse applications where time-based data is critical.

In the longer term, my goal is to develop a unified, scalable model for time series analysis that balances adaptability, interpretability, and efficiency. I hope such a framework can serve as a foundation for advancing not only HAR but also a broad range of healthcare, environmental, and AI-driven applications that require real-time, data-driven decision-making.

What made you want to study AI, and in particular the area of wearables?

My interest in wearables began during my time in Paris, where I was first introduced to the potential of sensor-based monitoring in healthcare. I was immediately drawn to how discreet and non-invasive wearables are compared to video-based methods, especially for applications like elderly care and patient monitoring.

More broadly, I have always been fascinated by AI’s ability to interpret complex data and uncover meaningful patterns that can enhance human well-being. Wearables offered the perfect intersection of my interests, combining cutting-edge AI techniques with practical, real-world impact, which naturally led me to focus my research on this area.

What advice would you give to someone thinking of doing a PhD in the field?

A PhD in AI demands both technical expertise and resilience. My advice would be:

- Stay curious and adaptable, because research directions evolve quickly, and the ability to pivot or explore new ideas is invaluable.

- Investigate combining disciplines. AI benefits greatly from insights in fields like psychology, healthcare, and human-computer interaction.

- Most importantly, choose a problem you are truly passionate about. That passion will sustain you through the inevitable challenges and setbacks of the PhD journey.

Approaching your research with curiosity, openness, and genuine interest can make the PhD not just a challenge, but a deeply rewarding experience.

Could you tell us an interesting (non-AI related) fact about you?

Outside of research, I’m passionate about leadership and community building. As president of the Purdue Tango Club, I grew the group from just 2 students to over 40 active members, organized weekly classes, and hosted large events with internationally recognized instructors. More importantly, I focused on creating a welcoming community where students feel connected and supported. For me, tango is more than dance, it’s a way to bring people together, bridge cultures, and balance the intensity of research with creativity and joy.

I also apply these skills in academic leadership. For example, I serve as Local Organizer and Safety Chair for the AAMAS 2025 and 2026 conferences, which has given me hands-on experience managing events, coordinating teams, and creating inclusive spaces for researchers worldwide.

About Zahra

|

Zahra Ghorrati is a PhD candidate and teaching assistant at Purdue University, specializing in artificial intelligence and time series classification with applications in human activity recognition. She earned her undergraduate degree in Computer Software Engineering and her master’s degree in Artificial Intelligence. Her research focuses on developing scalable and interpretable fuzzy deep learning models for wearable sensor data. She has presented her work at leading international conferences and journals, including AAMAS, PAAMS, FUZZ-IEEE, IEEE Access, System and Applied Soft Computing. She has served as a reviewer for CoDIT, CTDIAC, and IRC, and has been invited to review for AAAI 2026. Zahra also contributes to community building as Local Organizer and Safety Chair for AAMAS 2025 and 2026. |

tags: AAAI, AAAI Doctoral Consortium, AAAI2025, ACM SIGAI