ΑΙhub.org

Designing value-aligned autonomous vehicles: from moral dilemmas to conflict-sensitive design

Autonomous systems increasingly face value-laden choices. This blog post introduces the idea of designing “conflict-sensitive” autonomous traffic agents that explicitly recognise, reason about, and act upon competing ethical, legal, and social values. We present the concept of Value-Aligned Operational Design Domains (VODDs) – a framework that embeds stakeholder value hierarchies and contextual handover rules into the design of autonomous decision-making.

When AI must choose between wrong and right

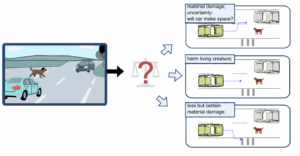

Imagine an autonomous car driving along a quiet suburban road when suddenly a dog runs onto the road. The system must brake hard and decide, within a fraction of a second, whether to swerve into oncoming traffic—where the other autonomous car might make space—to steer right and hit the roadside barrier, or to continue straight and injure the dog. The first two options risk only material damage; the last harms a living creature.

Each choice is justifiable and involves trade-offs between safety, property and ethical concerns.

However, today’s autonomous systems are not designed to explicitly take such value-laden conflicts into account. They are typically optimised for safety and efficiency – but not necessarily for social acceptance or ethical values.

Our research explores how to make these value tensions explicit and manageable throughout the design and operation of autonomous systems – a perspective we term conflict-sensitive design.

Why value alignment matters for traffic agents

Autonomous traffic agents (ATAs) – such as self-driving cars or delivery robots – are expected to obey traffic rules and ensure safety. However, human drivers do more than just follow rules: they constantly weigh up competing social and ethical values such as courtesy, environmental impact, and urgency.

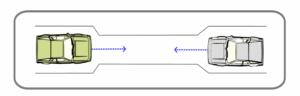

Figure 1: Two ATAs approach a narrowing road – each claiming the right of way.

If autonomous systems are to coexist with human road users, they too must learn to balance such priorities. An ATA that always prioritises efficiency may appear inconsiderate, while one that stops overcautiously whenever there is uncertainty could become a traffic hazard.

This is the essence of value alignment: ensuring that ATAs act in ways consistent with the ethical, legal, and social norms of their operating context.

From moral dilemmas to conflict sensitivity

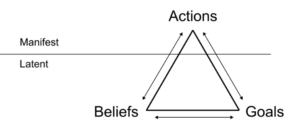

We consider a conflict to be a useful indicator that characterises particularly challenging situations that an ATA must be able to deal with. A conflict does not simply arise from incompatible goals, but when an agent believes that achieving its own goals may depend on the other compromising its goals. This interdependence between agents’ goals and beliefs creates situations in which cooperation, competition, and misconceptions can play a role.

We build on a formal model from epistemic game theory, developed by Damm et al., that represents each agent’s goals, beliefs, and actions. This allows us to analyse both actual conflicts – where goals truly clash – and believed conflicts – where the tension stems from what one agent thinks about the other.

Rather than avoiding such conflicts, we aim to make systems aware of them—to detect, reason about, and act upon value tensions in a structured way.

Figure 2: A conflict arises from the interplay between agents’ beliefs, incompatible goals, and resulting course of actions.

Value-Aligned Operational Design Domains (VODDs)

Current standards define Operational Design Domains (ODDs) – the conditions (such as road type, weather, or speed range) within which an autonomous vehicle is considered safe to operate. However, these ODDs describe functional boundaries, not ethical or social ones.

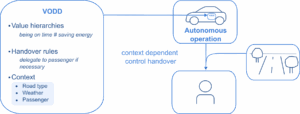

Our proposed Value-Aligned Operational Design Domains (VODDs) extend this concept. A detailed version of this approach is presented in Rakow et al. (2025), and a short version of this work will be published as part of the proceedings of EUMAS 2025.

A VODD specifies not only where and when a system can act autonomously, but also under what value priorities.

For example, when an ATA chauffeurs its passenger to an important meeting, saving fuel may take lower priority than arriving on time. In other situations, such as leisure trips or delivery orders, energy efficiency may be more important.

The key idea is that value priorities are not universally fixed. Some values can be ordered – for example, safety might always take precedence over comfort – while others vary depending on the context. In some contexts, being punctual may take precedence over saving energy, in others the opposite, and in yet others the two values are incomparable, neither has a clear precedence. VODDs capture this flexibility by specifying where and when such orderings apply, and by allowing the ATA to make context-dependent trade-offs or defer decisions to a higher authority, such as the passenger or a fleet management system.

Figure 3: Value-aligned operational design domains (VODDs) link contextual boundaries with value hierarchies and control handover mechanisms. Over time, these boundaries can evolve as ATAs monitor and learn from conflicts encountered in real-world deployment.

Designing for conflict, not perfection

To operationalise value alignment, we integrate conflict scenarios directly into the design process. As part of requirements analysis, designers are encouraged to ask: Where might value conflicts arise, and which value hierarchies should guide the system’s behaviour?

In our approach, conflict scenarios serve as core design artefacts. They reveal where value aligned decisions are required and guide developers in refining value hierarchies, system behaviour, and the boundaries of autonomy.

Towards conflict-sensitive autonomy

Our work shifts the perspective from solving moral dilemmas after deployment to anticipating and structuring value-sensitive behaviour during design. By making conflicts visible and systematically integrating them into development, we aim to foster autonomous systems whose decisions are more transparent, context-aware, and aligned with shared human values.

Find out more

This paper was recently presented at EUMAS, the 22nd European Conference on Multi-Agent Systems:

Designing Value-Aligned Traffic Agents through Conflict Sensitivity, Astrid Rakow, Joe Collenette, Maike Schwammberger, Marija Slavkovik, Gleifer Vaz Alves.

Astrid Rakow is a researcher at the German Aerospace Center (DLR), Germany.

Marija Slavkovik is a professor at the University of Bergen, Norway.

Joe Collenette is a researcher at the University of Chester, United Kingdom.

Maike Schwammberger is a professor at Karlsruhe Institute of Technology (KIT), Germany.

Gleifer Vaz Alves is a professor at the Federal University of Technology – Paraná (Universidade Tecnológica Federal do Paraná), Brazil.