ΑΙhub.org

Machine learning to scale up the quantum computer

By Dr Muhammad Usman and Professor Lloyd Hollenberg

Quantum computers are expected to offer tremendous computational power for complex problems – currently intractable even on supercomputers – in the areas of drug design, data science, astronomy and materials chemistry among others.

The high technological and strategic stakes mean major technology companies as well as ambitious start-ups and government-funded research centres are all in the race to build the world’s first universal quantum computer.

Building a quantum computer

In contrast to today’s classical computers, where information is encoded in bits (0 or 1), quantum computers process information stored in quantum bits (qubits). These are hosted by quantum mechanical objects like electrons, the negatively charged particles of an atom.

Quantum states can also be binary and can exist in one of two states, or effectively both at the same time – known as quantum superposition – offering an exponentially larger computational space with an increasing number of qubits.

This unique data crunching power is further boosted by entanglement, another magical property of quantum mechanics where the state of one qubit is able to dictate the state of another qubit without any physical connection, making them all 1s for example. Einstein called it a ‘spooky action at distance’.

Different research groups in the world are pursuing different kinds of qubits, each having its own benefits and limitations. Some qubits offer potential for scalability, while others come with very long coherence times, that is the time for which quantum information can be robustly stored.

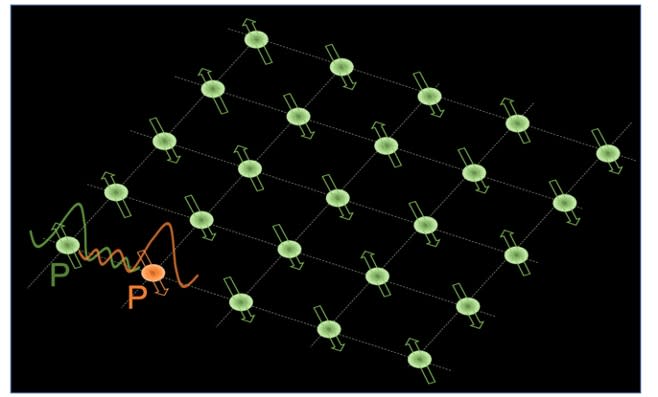

Qubits in silicon are highly promising as they offer both. Therefore, these qubits are one of the front-runners for the design and implementation of a large-scale quantum computer architecture. One way to implement large-scale quantum computer architecture in silicon is by placing individual phosphorus atoms on a two-dimensional grid.

The single and two-qubit logical operations are controlled by a grid of nanoelectronic wires, bearing some resemblance to classical logic gates for conventional microelectronic circuits. However, key to this scheme is ultra-precise placement of phosphorus atoms on the silicon grid.

The challenges

However, even with state-of-the-art fabrication technologies, placing phosphorus atoms at precise locations in a silicon lattice is a very challenging task. Small variations (of the order of one atomic lattice site) in their positions are often observed and may have a huge impact on the efficiency of two-qubit operations.

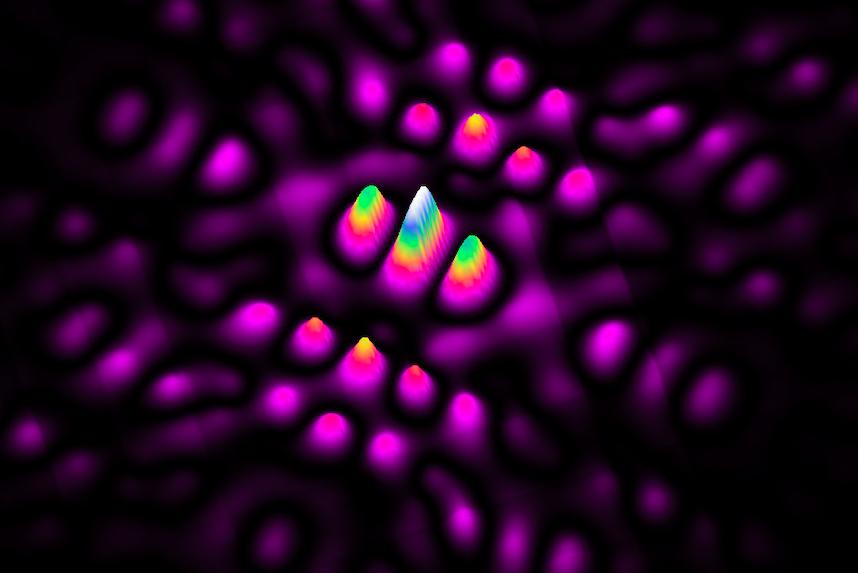

The problem arises from the ultra-sensitive dependence of the exchange interaction between the electron qubits on phosphorus atoms in silicon. Exchange interaction is a fundamental quantum mechanical property where two sub-atomic particles such as electrons can interact in real space when their wave functions overlap and make interference patterns, much like the two travelling waves interfering on a water surface.

Exchange interaction between electrons on phosphorus atom qubits can be exploited to implement fast two-qubit gates, but any unknown variation can be detrimental to the accuracy of quantum gates. Like logic gates in a conventional computer, the quantum gates are the building blocks of a quantum circuit.

For phosphorus qubits in silicon, even an uncertainty in the location of qubit atoms of the order of one atomic lattice site can alter the corresponding exchange interaction by orders of magnitude, leading to errors in two-qubit gate operations.

Such errors, accumulated over the large-scale architecture, may severely impede the efficiency of a quantum computer, diminishing any quantum advantage expected due to the quantum mechanical properties of qubits.

Finding exact coordinates of qubit atom

In 2016, we worked with Center for Quantum Computation & Communication Technology researchers at the University of New South Wales, to develop a technique that could pinpoint exact locations of phosphorus atoms in silicon.

The technique, reported in Nature Nanotechnology, was the first to use computed scanning tunneling microscope (STM) images of phosphorus atom wave functions to pinpoint their spatial locations in silicon.

The images were calculated using a computational framework which allowed electronic calculations to be performed on millions of atoms utilizing Australia’s national supercomputer facilities at the Pawsey supercomputing center.

These calculations produced maps of electron wave function patterns, where the symmetry, brightness and size of features were directly related to the position of a phosphorus atom in a silicon lattice, around which the electron was bound.

The fact that each donor atom position led to a distinct map, pinpointing qubit atom locations (known as spatial metrology), meant that single lattice site precision was achieved.

The technique worked very well at the individual qubit level. However, the next big challenge was to build a framework that could perform this exact atom spatial pinpointing with high speed and minimal human interaction coping with the requirements of a universal fault-tolerant quantum computer.

Machine learning

A carefully trained machine learning algorithm can process very large data sets with enormous efficiency.

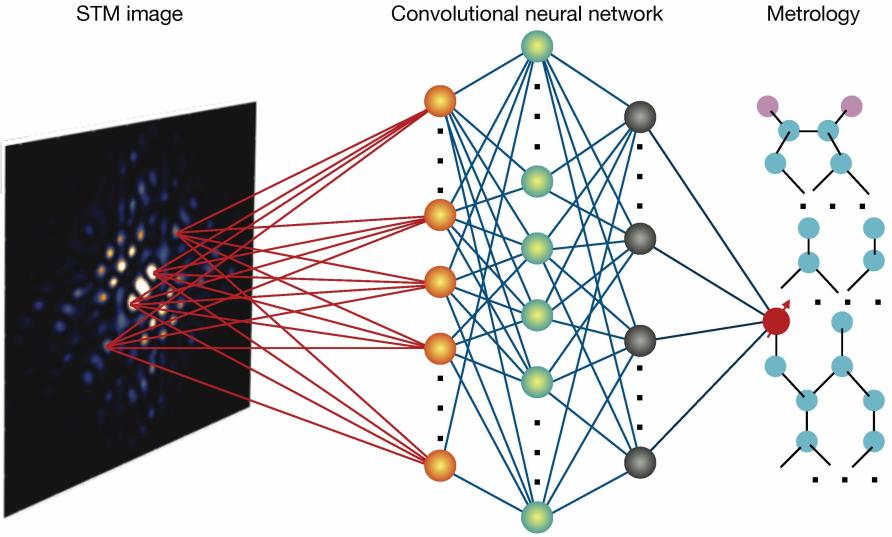

One branch of machine learning, known as convolutional neural networks (CNN), is an extremely powerful tool for image recognition and classification problems. When a CNN is trained on thousands of sample images, it can precisely recognize unknown images (including noise) and perform classifications.

Recognising that the principle underpinning the established spatial metrology of qubit atoms is basically recognising and classifying feature maps of STM images, we decided to train a CNN on the computed STM images. The work is published in the NPJ Computational Materials journal.

The training involved 100,000 STM images and achieved a remarkable learning accuracy of above 99% for the CNN. We then tested the trained CNN on 17600 test images, including blurring and asymmetry noise typically present in the realistic environments. The CNN classified the test images with an accuracy of above 98%, confirming that this machine learning-based technique could process qubit measurement data with high-throughput, high precision, and minimal human interaction.

This technique also has the potential to scale up for qubits consisting of more than one phosphorus atom, where the number of possible image configurations would exponentially increase. However, machine learning-based frameworks could readily include any number of possible configurations.

In the coming years, as the number of qubits increases and the size of quantum devices grows, qubit characterisation via manual measurements is likely to be highly challenging and onerous.

This work shows how machine learning techniques, such as developed in this work, could play a crucial role in this aspect of the realisation of a full-scale fault-tolerant universal quantum computer – the ultimate goal of the global research effort.

This article was first published on Pursuit. Read the original article.