ΑΙhub.org

Navigating the new era of commerce: Exploring the relationship between anthropomorphism in voice assistants and user safety perception

Image created by Guillermo Calahorra-Candao using ChatGPT. Prompt: “create an image of a person conversing with a virtual voice assistant (just like Alexa, Siri, or Google), while simultaneously wondering if the assistant might actually be human”.

Image created by Guillermo Calahorra-Candao using ChatGPT. Prompt: “create an image of a person conversing with a virtual voice assistant (just like Alexa, Siri, or Google), while simultaneously wondering if the assistant might actually be human”.

Introduction

In an era where technology continuously reshapes our daily interactions, the rise of virtual voice assistants (VAs) like Alexa, Google Home, and Siri represents a significant leap. Originally designed to enhance smartphone usability, these VAs have transcended their initial purpose, finding their way into various consumer devices and altering the user experience landscape. However, despite their widespread integration, a notable reluctance in adopting voice shopping persists, primarily due to concerns regarding safety. Drawing on our research, this article explores the intricate relationship between anthropomorphism in VAs and user safety perception, particularly emphasizing its impact on the acceptance and utilization of voice shopping.

The essence of anthropomorphism in voice assistants

The concept of anthropomorphism in technology revolves around endowing non-human entities with human-like attributes. In the context of VAs, this translates to human-like voices and behaviors that resonate with users on a personal level. Our study sheds light on how these human-like characteristics in VAs can significantly influence a user’s sense of safety, thereby affecting their willingness to engage in voice shopping.

Methodology: A deep dive into user perceptions

To unravel the nuances of this relationship, we employed an online survey conducted across Spain, targeting individuals familiar with VA technology and the concept of voice shopping. The participants interacted with audio clips from popular VAs – Alexa, Google Home, and Siri – and their responses to these interactions provided critical insights. The methodology incorporated a combination of exploratory factor analysis, confirmatory factor analysis, and structural equation modeling to extract and analyze the data.

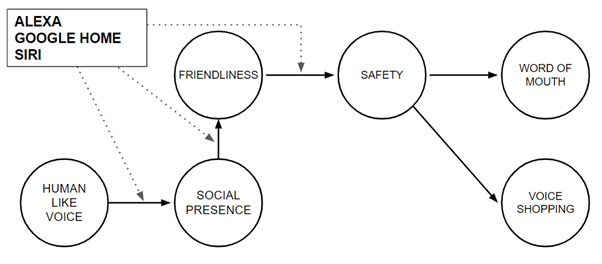

Figure: Proposed model

Figure: Proposed model

Findings: The human voice and safety perception

The study revealed several key findings:

- Human-like voice as a trust builder: The presence of a human-like voice in VAs significantly enhances the perception of social presence, making the VA appear more relatable and trustworthy.

- Social presence and friendliness: The increased social presence leads to a stronger perception of friendliness towards the VA.

- Safety in friendliness: This perceived friendliness directly influences users’ safety perceptions, making them feel more secure during interactions with the VA.

- The ripple effect of safety: A heightened sense of safety leads to a greater inclination towards voice shopping and encourages users to spread positive word-of-mouth endorsements.

These findings highlight the pivotal role of perceived safety, shaped by anthropomorphic factors, in driving user acceptance of voice shopping.

Practical implications: Shaping the future of e-commerce

The implications of this study are far-reaching for AI developers, marketers, and e-commerce platforms. The humanization of VAs, particularly through voice, emerges as a crucial factor in bridging the trust gap and enhancing user experience. For e-commerce platforms, understanding and leveraging these insights can lead to more effective strategies in encouraging voice shopping adoption.

Building on the foundations of the study

Delving deeper into the nuances of user interactions with VAs, we find that the human-like voice serves not just as a functional tool but as a bridge to more personalized and comfortable user experiences. As users engage with VAs that speak in a manner akin to human interaction, their comfort with these devices increases, opening the doors to more natural and frequent usage, particularly in the realm of voice shopping.

The human touch in technology: Beyond functionality

In an increasingly digital world, the human touch in technology becomes a powerful tool. The study underlines that voice assistants with human-like voices are not just about technological superiority but about creating a bond with the user. This bond is based on the inherent human tendency to connect with voices that sound familiar and comforting – a reminder of the deep-seated need for human connection, even in interactions with technology.

Safety perception: The bedrock of voice assistant acceptance

A pivotal aspect of our study focuses on safety perception. In a digital age where privacy concerns are paramount, the feeling of safety while interacting with technology can make or break user acceptance. The research reveals that when VAs exhibit human-like traits, especially in voice, they transcend the role of mere tools to become trusted assistants. This trust is crucial in the context of voice shopping, where sensitive transactions occur.

Voice shopping: The frontier of e-commerce evolution

Voice shopping, as a frontier in e-commerce evolution, stands at a crucial juncture. The research indicates that the anthropomorphic features in VAs can significantly influence user behavior towards voice shopping. This influence extends to the willingness to try voice shopping, the frequency of use, and the likelihood of recommending the technology to others. In essence, the more users trust their voice assistants, the more likely they are to embrace voice shopping as a regular part of their e-commerce activities. Another significant finding from the research is the role of word-of-mouth in technology adoption. The study suggests that users who perceive a higher level of safety with their VAs are more inclined to engage in positive word-of-mouth promotion. This behavior is critical in the age of social media and online communities, where user opinions can significantly impact the adoption of new technologies.

Anthropomorphism and personalization: A balancing act

While anthropomorphism in VAs enhances user experience and safety perception, it also introduces the need for a delicate balance between personalization and privacy. As VAs become more human-like, the data they collect and the interactions they engage in raise important questions about user privacy and data security. Future research could explore how to maintain this balance, ensuring that VAs provide personalized experiences without overstepping privacy boundaries.

Concluding thoughts: Embracing the future of voice assistants

Our study provides insights into understanding how anthropomorphism in voice assistants can shape user interaction and voice shopping. We hope that this research will help guide the design of future voice assistants.

Reference

The effect of anthropomorphism of virtual voice assistants on perceived safety as an antecedent to voice shopping, Guillermo Calahorra-Candao and María José Martín-de Hoyos.