ΑΙhub.org

How to regularize your regression

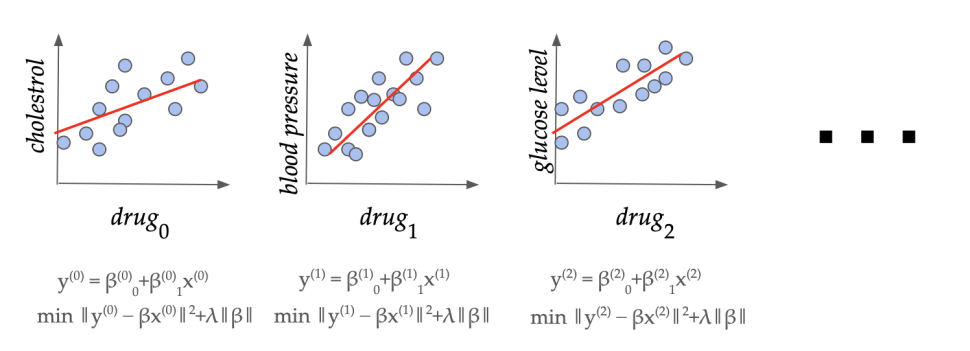

A series of regression instances in a pharmaceutical application. Can we learn how to set the regularization parameter ![]() from similar domain-specific data?

from similar domain-specific data?

Overview. Perhaps the simplest relation between a real dependent variable ![]() and a vector of features

and a vector of features ![]() is a linear model

is a linear model ![]() . Given some training examples or datapoints consisting of pairs of features and dependent variables

. Given some training examples or datapoints consisting of pairs of features and dependent variables ![]() , we would like to learn

, we would like to learn ![]() which would give the best prediction

which would give the best prediction ![]() given features

given features ![]() of an unseen example. This process of fitting a linear model

of an unseen example. This process of fitting a linear model ![]() to the datapoints is called linear regression. This simple yet effective model finds ubiquitous applications, ranging from biological, behavioral, and social sciences to environmental studies and financial forecasting, to make reliable predictions on future data. In ML terminology, linear regression is a supervised learning algorithm with low variance and good generalization properties. It is much less data-hungry than typical deep learning models, and performs well even with small amounts of training data. Further, to avoid overfitting the model to the training data, which reduces the prediction performance on unseen data, one typically uses regularization, which modifies the objective function of the linear model to reduce impact of outliers and irrelevant features (read on for details).

to the datapoints is called linear regression. This simple yet effective model finds ubiquitous applications, ranging from biological, behavioral, and social sciences to environmental studies and financial forecasting, to make reliable predictions on future data. In ML terminology, linear regression is a supervised learning algorithm with low variance and good generalization properties. It is much less data-hungry than typical deep learning models, and performs well even with small amounts of training data. Further, to avoid overfitting the model to the training data, which reduces the prediction performance on unseen data, one typically uses regularization, which modifies the objective function of the linear model to reduce impact of outliers and irrelevant features (read on for details).

The most common method for linear regression is “regularized least squares”, where one finds the ![]() which minimizes

which minimizes

![]()

Here the first term captures the error of ![]() on the training set, and the second term is a norm-based penalty to avoid overfitting (e.g. reducing impact of outliers in data). How to set

on the training set, and the second term is a norm-based penalty to avoid overfitting (e.g. reducing impact of outliers in data). How to set ![]() appropriately in this fundamental method depends on the data domain and is a longstanding open question. In typical modern applications, we have access to several similar datasets

appropriately in this fundamental method depends on the data domain and is a longstanding open question. In typical modern applications, we have access to several similar datasets ![]() from the same application domain. For example, there are often multiple drug trial studies in a pharmaceutical company for studying the different effects of similar drugs. In this work, we show that we can indeed learn a good domain-specific value of

from the same application domain. For example, there are often multiple drug trial studies in a pharmaceutical company for studying the different effects of similar drugs. In this work, we show that we can indeed learn a good domain-specific value of ![]() with strong theoretical guarantees of accuracy on unseen datasets from the same domain, and give bounds on how much data is needed to achieve this.

with strong theoretical guarantees of accuracy on unseen datasets from the same domain, and give bounds on how much data is needed to achieve this.

As our main result, we show that if the data has ![]() features (i.e., the dimension of feature vector

features (i.e., the dimension of feature vector ![]() is

is ![]() , then after seeing

, then after seeing ![]() datasets, we can learn a value of

datasets, we can learn a value of ![]() which has error (averaged over the domain) within

which has error (averaged over the domain) within ![]() of the best possible value of

of the best possible value of ![]() for the domain. We also extend our results to sequential data, binary classification (i.e.

for the domain. We also extend our results to sequential data, binary classification (i.e. ![]() is binary valued) and non-linear regression.

is binary valued) and non-linear regression.

Problem Setup. Linear regression with norm-based regularization penalty is one of the most popular techniques that one encounters in introductory courses to statistics or machine learning. It is widely used for data analysis and feature selection, with numerous applications including medicine, quantitative finance (the linear factor model), climate science, and so on. The regularization penalty is typically a weighted additive term (or terms) of the norms of the learned linear model ![]() , where the weight is carefully selected by a domain expert. Mathematically, a dataset has dependent variable

, where the weight is carefully selected by a domain expert. Mathematically, a dataset has dependent variable ![]() consisting of

consisting of ![]() examples, and predictor variables

examples, and predictor variables ![]() with

with ![]() features for each of the

features for each of the ![]() datapoints. The linear regression approach (with squared loss) consists of solving a minimization problem

datapoints. The linear regression approach (with squared loss) consists of solving a minimization problem

![]()

where the highlighted term is the regularization penalty. Here ![]() are the regularization coefficients constraining the L1 and L2 norms, respectively, of the learned linear model

are the regularization coefficients constraining the L1 and L2 norms, respectively, of the learned linear model ![]() . For general

. For general ![]() and

and ![]() the above algorithm is popularly known as the Elastic Net, while setting

the above algorithm is popularly known as the Elastic Net, while setting ![]() recovers Ridge regression and setting

recovers Ridge regression and setting ![]() corresponds to LASSO. Ridge and LASSO regression are both individually popular methods in practice, and the Elastic Net incorporates the advantages of both.

corresponds to LASSO. Ridge and LASSO regression are both individually popular methods in practice, and the Elastic Net incorporates the advantages of both.

Despite the central role these coefficients play in linear regression, the problem of setting them in a principled way has been a challenging open problem for several decades. In practice, one typically uses “grid search” cross-validation, which involves (1) splitting the dataset into several subsets consisting of training and validation sets, (2) training several models (corresponding to different values of regularization coefficients) on each training set, and (3) comparing the performance of the models on the corresponding validation sets. This approach has several limitations.

- First, this is very computationally intensive, especially with the large datasets that typical modern applications involve, as one needs to train and evaluate the model for a large number of hyperparameter values and training-validation splits. We would like to avoid repeating this cumbersome process for similar applications.

- Second, theoretical guarantees on how well the coefficients learned by this procedure will perform on unseen examples are not known, even when the test data are drawn from the same distribution as the training set.

- Finally, this can only be done for a finite set of hyperparameter values and it is not clear how the selected parameter compares to the best parameter from the continuous domain of coefficients. In particular, the loss as a function of the regularization parameter is not known to be Lipschitz.

Our work addresses all three of the above limitations simultaneously in the data-driven setting, which we motivate and describe next.

The importance of regularization

A visualization of the L1 and L2 regularized regressions.

The regularization coefficients ![]() and

and ![]() play a crucial role across fields: In machine learning, controlling the norm of model weights

play a crucial role across fields: In machine learning, controlling the norm of model weights ![]() implies provable generalization guarantees and prevents over-fitting in practice. In statistical data analysis, their combined use yields parsimonious and interpretable models. In Bayesian statistics they correspond to imposing specific priors on

implies provable generalization guarantees and prevents over-fitting in practice. In statistical data analysis, their combined use yields parsimonious and interpretable models. In Bayesian statistics they correspond to imposing specific priors on ![]() . Effectively,

. Effectively, ![]() regularizes

regularizes ![]() by uniformly shrinking all coefficients, while

by uniformly shrinking all coefficients, while ![]() encourages the model vector to be sparse. This means that while they do yield learning-theoretic and statistical benefits, setting them to be too high will cause models to under-fit to the data. The question of how to set the regularization coefficients becomes even more unclear in the case of the Elastic Net, as one must juggle trade-offs between sparsity, feature correlation, and bias when setting both

encourages the model vector to be sparse. This means that while they do yield learning-theoretic and statistical benefits, setting them to be too high will cause models to under-fit to the data. The question of how to set the regularization coefficients becomes even more unclear in the case of the Elastic Net, as one must juggle trade-offs between sparsity, feature correlation, and bias when setting both ![]() and

and ![]() simultaneously.

simultaneously.

The data-driven algorithm design paradigm

In many applications, one has access to not just a single dataset, but a large number of similar datasets coming from the same domain. This is increasingly true in the age of big data, where an increasing number of fields are recording and storing data for the purpose of pattern analysis. For example, a drug company typically conducts a large number of trials for a variety of different drugs. Similarly, a climate scientist monitors several different environmental variables and continuously collects new data. In such a scenario, can we exploit the similarity of the datasets to avoid doing cumbersome cross-validation each time we see a new dataset? This motivates the data-driven algorithm design setting, introduced in the theory of computing community by Gupta and Roughgarden as a tool for design and analysis of algorithms that work well on typical datasets from an application domain (as opposed to worst-case analysis). This approach has been successfully applied to several combinatorial problems including clustering, mixed integer programming, automated mechanism design, and graph-based semi-supervised learning (Balcan, 2020). We show how to apply this analytical paradigm to tuning the regularization parameters in linear regression, extending the scope of its application beyond combinatorial problems [1, 2].

The learning model

A model for studying repeated regression instances from the same domain.

Formally, we model data coming from the same domain as a fixed (but unknown) distribution ![]() over the problem instances. To capture the well-known cross-validation setting, we consider each problem instance of the form

over the problem instances. To capture the well-known cross-validation setting, we consider each problem instance of the form ![]() . That is, the random process that generates the datasets and the (random or deterministic) process that generates the splits given the data, have been combined under

. That is, the random process that generates the datasets and the (random or deterministic) process that generates the splits given the data, have been combined under ![]() . The goal of the learning process is to take

. The goal of the learning process is to take ![]() problem samples generated from the distribution

problem samples generated from the distribution ![]() , and learn regularization coefficients

, and learn regularization coefficients ![]() that would generalize well over unseen problem instances drawn from

that would generalize well over unseen problem instances drawn from ![]() . That is, on an unseen test instance

. That is, on an unseen test instance ![]() , we will fit the model

, we will fit the model ![]() using the learning regularization coefficients

using the learning regularization coefficients ![]() on

on ![]() , and evaluate the loss on the set

, and evaluate the loss on the set ![]() . We seek the value of

. We seek the value of ![]() that minimizes this loss, in expectation over the draw of the random test sample from

that minimizes this loss, in expectation over the draw of the random test sample from ![]() .

.

How much data do we need?

The model ![]() clearly depends on both the dataset

clearly depends on both the dataset ![]() , and the regularization coefficients

, and the regularization coefficients ![]() . A key tool in data-driven algorithm design is the analysis of the “dual function”, which is the loss expressed as a function of the parameters, for a fixed problem instance. This is typically easier to analyze than the “primal function” (loss for a fixed parameter, as problem instances are varied) in data-driven algorithm design problems. For Elastic Net regression, the dual is the validation loss on a fixed validation set for models trained with different values of

. A key tool in data-driven algorithm design is the analysis of the “dual function”, which is the loss expressed as a function of the parameters, for a fixed problem instance. This is typically easier to analyze than the “primal function” (loss for a fixed parameter, as problem instances are varied) in data-driven algorithm design problems. For Elastic Net regression, the dual is the validation loss on a fixed validation set for models trained with different values of ![]() (i.e. two-parameter function) for a fixed training set. Typically the dual functions in combinatorial problems exhibit a piecewise structure, where the behavior of the loss function can have sharp transitions across the pieces. For example, in clustering this piecewise behavior could correspond to learning a different cluster in each piece. Prior research has shown that if we can bound the complexity of the boundary and piece functions in the dual function, then we can give a sample complexity guarantee, i.e. we can answer the question “how much data is sufficient to learn a good value of the parameter?”

(i.e. two-parameter function) for a fixed training set. Typically the dual functions in combinatorial problems exhibit a piecewise structure, where the behavior of the loss function can have sharp transitions across the pieces. For example, in clustering this piecewise behavior could correspond to learning a different cluster in each piece. Prior research has shown that if we can bound the complexity of the boundary and piece functions in the dual function, then we can give a sample complexity guarantee, i.e. we can answer the question “how much data is sufficient to learn a good value of the parameter?”

An illustration of the piecewise structure of the Elastic Net dual loss function. Here ![]() and

and ![]() are polynomial boundary functions, and

are polynomial boundary functions, and ![]() are piece functions which are fixed rational functions given the signs of boundary functions.

are piece functions which are fixed rational functions given the signs of boundary functions.

Somewhat surprisingly, we show that the dual loss function exhibits a piecewise structure even in linear regression, a classic continuous optimization problem. Intuitively, the pieces correspond to different subsets of the features being “active”, i.e. having non-zero coefficients in the learned model ![]() . Specifically, we show that the piece boundaries of the dual function are polynomial functions of bounded degree, and the loss within each piece is a rational function (ratio of two polynomial functions) again of bounded degree. We use this structure to establish a bound on the learning-theoretic complexity of the dual function; more precisely, we bound its pseudo-dimension (a generalization of the VC dimension to real-valued functions).

. Specifically, we show that the piece boundaries of the dual function are polynomial functions of bounded degree, and the loss within each piece is a rational function (ratio of two polynomial functions) again of bounded degree. We use this structure to establish a bound on the learning-theoretic complexity of the dual function; more precisely, we bound its pseudo-dimension (a generalization of the VC dimension to real-valued functions).

Theorem. The pseudo-dimension of the Elastic Net dual loss function is ![]() , where

, where ![]() is the feature dimension.

is the feature dimension.

![]() notation here means we have an upper bound of

notation here means we have an upper bound of ![]() as well as a lower bound

as well as a lower bound ![]() on the pseudo-dimension. Roughly speaking, the pseudo-dimension captures the complexity of the function class from a learning perspective, and corresponds to the number of samples needed to guarantee small generalization error (average error on test data). Remarkably, we show an asymptotically tight bound on the pseudo-dimension by establishing a

on the pseudo-dimension. Roughly speaking, the pseudo-dimension captures the complexity of the function class from a learning perspective, and corresponds to the number of samples needed to guarantee small generalization error (average error on test data). Remarkably, we show an asymptotically tight bound on the pseudo-dimension by establishing a ![]() lower bound which is technically challenging and needs an explicit construction of a collection of “hard” instances. Tight lower bounds are not known for several typical problems in data-driven algorithm design. Our bound depends only on

lower bound which is technically challenging and needs an explicit construction of a collection of “hard” instances. Tight lower bounds are not known for several typical problems in data-driven algorithm design. Our bound depends only on ![]() (the number of features) and is independent of the number of datapoints

(the number of features) and is independent of the number of datapoints ![]() . An immediate consequence of our bound is the following sample complexity guarantee:

. An immediate consequence of our bound is the following sample complexity guarantee:

Theorem. Given any distribution ![]() (fixed, but unknown), we can learn regularization parameters

(fixed, but unknown), we can learn regularization parameters ![]() which obtain error within any

which obtain error within any ![]() of the best possible parameter with probability

of the best possible parameter with probability ![]() using only

using only ![]() problem samples.

problem samples.

One way to understand our results is to instantiate them in the cross-validation setting. Consider the commonly used techniques of leave-one-out cross validation (LOOCV) and Monte Carlo cross validation (repeated random test-validation splits, typically independent and in a fixed proportion). Given a dataset of size ![]() , LOOCV would require

, LOOCV would require ![]() regression fits which can be computationally expensive for large datasets. Alternately, we can consider draws from a distribution

regression fits which can be computationally expensive for large datasets. Alternately, we can consider draws from a distribution ![]() which generates problem instances P from a fixed dataset

which generates problem instances P from a fixed dataset ![]() by uniformly selecting

by uniformly selecting ![]() and setting

and setting ![]() . Our result now implies that roughly

. Our result now implies that roughly ![]() iterations are enough to determine an Elastic Net parameter

iterations are enough to determine an Elastic Net parameter ![]() with loss within

with loss within ![]() (with high probability) of the parameter

(with high probability) of the parameter ![]() obtained from running the full LOOCV. Similarly, we can define a distribution

obtained from running the full LOOCV. Similarly, we can define a distribution ![]() to capture the Monte Carlo cross validation procedure and determine the number of iterations sufficient to get an

to capture the Monte Carlo cross validation procedure and determine the number of iterations sufficient to get an ![]() -approximation of the loss corresponding parameter selection with an arbitrarily large number of runs. Thus, in a very precise sense, our results answer the question of how much cross-validation is enough to effectively implement the above techniques.

-approximation of the loss corresponding parameter selection with an arbitrarily large number of runs. Thus, in a very precise sense, our results answer the question of how much cross-validation is enough to effectively implement the above techniques.

Sequential data and online learning

A more challenging variant of the problem assumes that the problem instances arrive sequentially, and we need to set the parameter for each instance using only the previously seen instances. We can think of this as a game between an online adversary and the learner, where the adversary wants to make the sequence of problems as hard as possible. Note that we no longer assume that the problem instances are drawn from a fixed distribution, and this setting allows problem instances to depend on previously seen instances which is typically more realistic (even if there is no actual adversary generating worst-case problem sequences). The learner’s goal is to perform as well as the best fixed parameter in hindsight, and the difference is called the “regret” of the learner.

To obtain positive results, we make a mild assumption on the smoothness of the data: we assume that the prediction values ![]() are drawn from a bounded density distribution. This captures a common data pre-processing step of adding a small amount of uniform noise to the data for model stability, e.g. by setting the jitter parameter in the popular Python library scikit-learn. Under this assumption, we show further structure on the dual loss function. Roughly speaking, we show that the location of the piece boundaries of the dual function across the problem instances do not concentrate in a small region of the parameter space.This in turn implies (using Balcan et al., 2018) the existence of an online learner with average expected regret

are drawn from a bounded density distribution. This captures a common data pre-processing step of adding a small amount of uniform noise to the data for model stability, e.g. by setting the jitter parameter in the popular Python library scikit-learn. Under this assumption, we show further structure on the dual loss function. Roughly speaking, we show that the location of the piece boundaries of the dual function across the problem instances do not concentrate in a small region of the parameter space.This in turn implies (using Balcan et al., 2018) the existence of an online learner with average expected regret ![]() , meaning that we converge to the performance of the best fixed parameter in hindsight as the number of online rounds

, meaning that we converge to the performance of the best fixed parameter in hindsight as the number of online rounds ![]() increases.

increases.

Extension to binary classification, including logistic regression

Linear classifiers are also popular for the task of binary classification, where the ![]() values are now restricted to

values are now restricted to ![]() or

or ![]() . Regularization is also crucial here to learn effective models by avoiding overfitting and selecting important variables. It is particularly common to use logistic regression, where the squared loss above is replaced by the logistic loss function,

. Regularization is also crucial here to learn effective models by avoiding overfitting and selecting important variables. It is particularly common to use logistic regression, where the squared loss above is replaced by the logistic loss function,

![]()

The exact loss minimization problem is significantly more challenging in this case, and it is correspondingly difficult to analyze the dual loss function. We overcome this challenge by using a proxy dual function which approximates the true loss function, but has a simpler piecewise structure. Roughly speaking, the proxy function considers a fine parameter grid of width ![]() and approximates the loss function at each point on the grid. Furthermore, it is piecewise linear and known to approximate the true loss function to within an error of

and approximates the loss function at each point on the grid. Furthermore, it is piecewise linear and known to approximate the true loss function to within an error of ![]() at all points (Rosset, 2004).

at all points (Rosset, 2004).

Our main result for logistic regression is that the generalization error with ![]() samples drawn from the distribution

samples drawn from the distribution ![]() is bounded by

is bounded by ![]() , with (high) probability

, with (high) probability ![]() over the draw of samples.

over the draw of samples. ![]() here is the size of the validation set, which is often small or even constant. While this bound is incomparable to the pseudo-dimension-based bounds above, we do not have lower bounds in this setting and tightness of our results in an interesting open question.

here is the size of the validation set, which is often small or even constant. While this bound is incomparable to the pseudo-dimension-based bounds above, we do not have lower bounds in this setting and tightness of our results in an interesting open question.

Beyond the linear case: kernel regression

Beyond linear regression. Source: xkcd.

So far, we have assumed that the dependent variable ![]() has a linear dependence on the predictor variables. While this is a great first thing to try in many applications, very often there is a non-linear relationship between the variables. As a result, linear regression can result in poor performance in some applications. A common alternative is to use Kernelized Least Squares Regression, where the input

has a linear dependence on the predictor variables. While this is a great first thing to try in many applications, very often there is a non-linear relationship between the variables. As a result, linear regression can result in poor performance in some applications. A common alternative is to use Kernelized Least Squares Regression, where the input ![]() is implicitly mapped to high (or even infinite) dimensional feature space using the “kernel trick”. As a corollary of our main results, we can show that the pseudo-dimension of the dual loss function in this case is

is implicitly mapped to high (or even infinite) dimensional feature space using the “kernel trick”. As a corollary of our main results, we can show that the pseudo-dimension of the dual loss function in this case is ![]() , where

, where ![]() is the size of the training set in a single problem instance. Our results do not make any assumptions on the

is the size of the training set in a single problem instance. Our results do not make any assumptions on the ![]() samples within a problem instance/dataset; if these samples within problem instances are further assumed to be i.i.d. draws from some data distribution (distinct from problem distribution

samples within a problem instance/dataset; if these samples within problem instances are further assumed to be i.i.d. draws from some data distribution (distinct from problem distribution ![]() ), then well-known results imply that

), then well-known results imply that ![]() samples are sufficient to learn the optimal LASSO coefficient (

samples are sufficient to learn the optimal LASSO coefficient (![]() denotes the number of non-zero coefficients in the optimal regression fit).

denotes the number of non-zero coefficients in the optimal regression fit).

Some final remarks

We consider how to tune the norm-based regularization parameters in linear regression. We pin down the learning-theoretic complexity of the loss function, which may be of independent interest. Our results extend to online learning, linear classification, and kernel regression. A key direction for future research is developing an efficient implementation of the algorithms underlying our approach.

More broadly, regularization is a fundamental technique in machine learning, including deep learning where it can take the form of dropout rates, or parameters in the loss function, with significant impact on the performance of the overall algorithm. Our research opens up the exciting question of tuning learnable parameters even in continuous optimization problems. Finally, our research captures an increasingly typical scenario with the advent of the data era, where one has access to repeated instances of data from the same application domain.

For further details about our cool results and the mathematical machinery we used to derive them, check out our papers linked below!

[1] Balcan, M.-F., Khodak, M., Sharma, D., & Talwalkar, A. (2022). Provably tuning the ElasticNet across instances. Advances in Neural Information Processing Systems, 35.

[2] Balcan, M.-F., Nguyen, A., & Sharma, D. (2023). New Bounds for Hyperparameter Tuning of Regression Problems Across Instances. Advances in Neural Information Processing Systems, 36.

This article was initially published on the ML@CMU blog and appears here with the authors’ permission.

tags: deep dive