ΑΙhub.org

Machine learning for atomic-scale simulations: balancing speed and physical laws

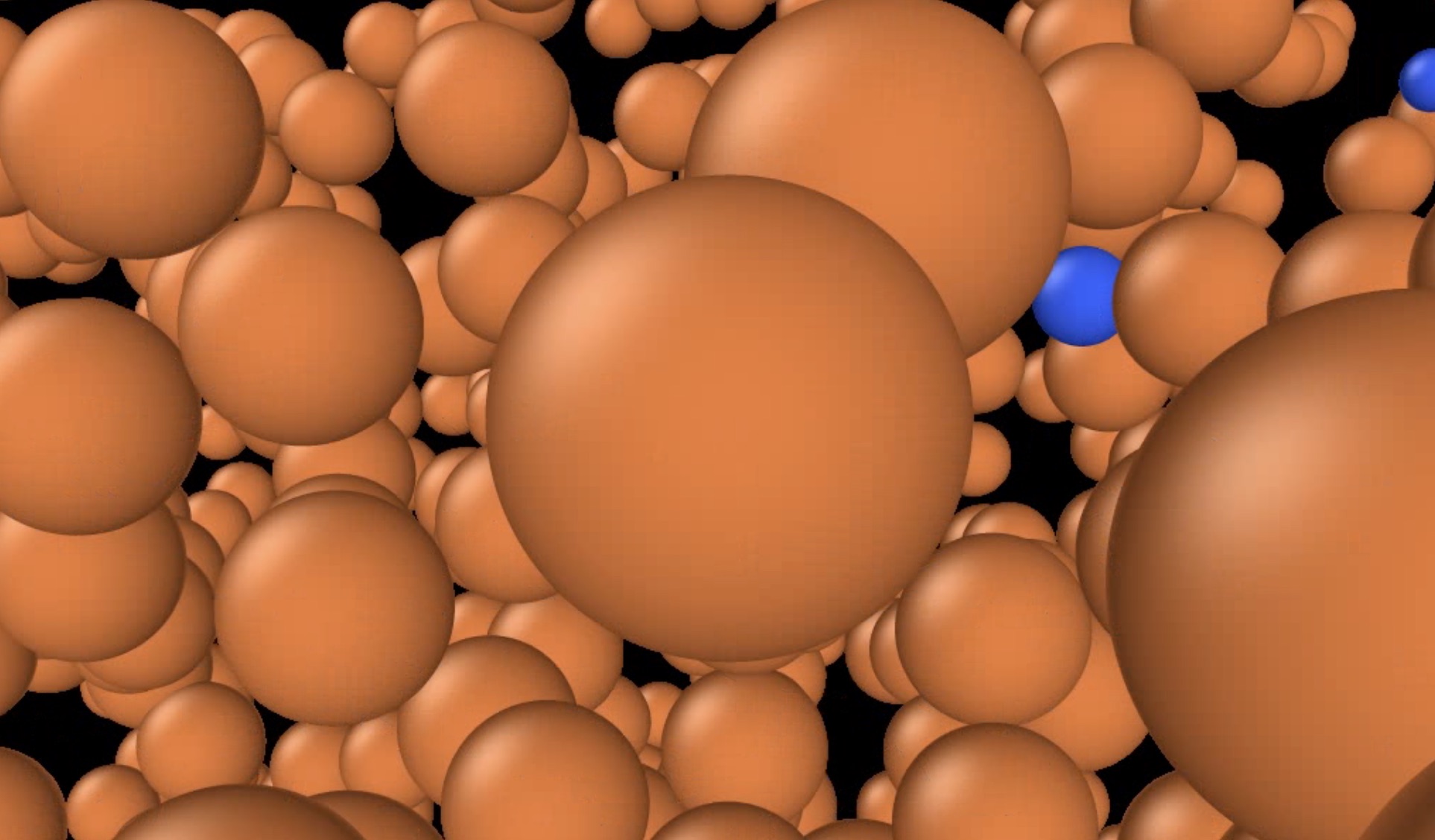

Taken from simulation of a nitrogen molecule on an iron surface exploding with non-conservative forces. See below for the full simulation.

Taken from simulation of a nitrogen molecule on an iron surface exploding with non-conservative forces. See below for the full simulation.

When we want to understand how matter behaves, the real action happens at the atomic scale. Heating of water, a chemical reaction in a battery, the way proteins fold in our cells, or how a catalyst works to convert carbon dioxide into useful fuels, all of these processes are governed by the motions and interactions of atoms.

Atomic-scale simulations give us a way to explore the microscopic behavior of matter, by tracking how atoms move under the laws of quantum mechanics. These simulations have become essential across physics, chemistry, biology, and materials science. They test hypotheses that experiments cannot easily probe and help design new materials before they are synthesized and tested in the lab.

The catch is that accuracy comes at a huge computational cost. Simulating even a few hundred atoms with quantum mechanical calculations can be so expensive that the simulation covers only millionths of a second of real time. That’s not enough to see most interesting processes unfold. To capture chemical reactions, protein dynamics, or long-term materials stability, we would need simulations that run thousands or even millions of times longer.

The role of machine learning

Machine learning (ML) has transformed this picture. Instead of solving the equations of quantum mechanics at every simulation step, we can train ML models to mimic them. These machine-learned interatomic potentials (MLIPs) learn the relationship between the arrangement of atoms and the forces they exert on each other, which ultimately drive their dynamics.

With MLIPs, simulations that once took months on a supercomputer can now be run in days or even hours, often with comparable accuracy. This acceleration has made it possible to explore larger systems and longer timescales than were ever practical with first-principles calculations. But, as is often the case in ML, there is a tension between speed and fidelity. How much of the underlying physics can we safely “shortcut” without breaking the simulation? At the heart of this tension lies the question of whether machine learning models should faithfully enforce physical laws, or whether approximations that break them might be acceptable if the result is faster or more accurate predictions.

The “dark side” of non-conservative forces

One shortcut that has gained popularity is to predict atomic forces directly, rather than computing them as derivatives of an energy with respect to the atomic positions. This avoids a computationally expensive differentiation step and makes models faster to train and run. However, forces computed as derivatives automatically conserve energy, while directly predicted ones do not. In physics, such forces are called non-conservative. And if energy conservation is broken, the entire simulation can fail catastrophically.

In the paper The dark side of the forces: assessing non-conservative force models for atomistic machine learning, we investigate what happens when these non-conservative forces are used in practice and propose practical and efficient solutions to fix the resulting problems. We find that simulations driven by non-conservative forces can quickly become unstable. Geometry optimizations, which are used to find the most stable atomic structures, may fail to converge. Molecular dynamics runs — meant to simulate motion of atoms — can exhibit runaway heating, with energy drifting at rates that correspond to billions of degrees per second. Clearly, no real physical system behaves this way and this makes purely non-conservative models unreliable for production use.

However, we also identify a promising solution: hybrid models. By pre-training models on direct forces to gain efficiency, and then fine-tuning them with conservative forces, it is possible to recover stability while still enjoying almost all the computational speed-up. Similarly, when using the model to perform simulations, most evaluations can be made with the fast direct forces, using conservative forces only rarely as a correction. In other words, non-conservative forces are not useless — they just need to be combined carefully with physically grounded methods to avoid their “dark side”.

Lessons and outlook

This work highlights the opportunities and challenges of optimizing the speed of machine learning for atomic-scale simulations. On one hand, shortcuts that ignore physics can lead to spectacular failures — unstable trajectories, unphysical heating, unreliable predictions. On the other hand, only ML approaches that are physics-aware (up to some point) are the only ones which can provide physically-correct simulations. The likely path forward is not to entirely sacrifice physics with ML, but to combine the two. Hybrid approaches that merge machine learning efficiency with physical constraints can provide the best of both worlds.

Looking ahead, there are also further opportunities to rethink the very framework of molecular dynamics with machine learning. In a follow-up work, FlashMD: long-stride, universal prediction of molecular dynamics, we explore how ML can be used not just to accelerate force calculations, but to directly predict atomic trajectories over much longer time steps. This approach allows simulations to reach timescales that are otherwise completely out of reach, while still incorporating mechanisms to enforce energy conservation and preserve qualitative physical behavior.

As these methods mature, researchers will be able to simulate larger systems, over longer timescales, and at higher accuracy than ever before. This will accelerate discoveries in energy storage, drug design, catalysis, and countless other areas where atomic-scale insight is key. Machine learning for atomic simulations is not just about speed — it’s about finding the right balance between efficiency and physical truth. By staying grounded in the laws of nature while embracing the flexibility of ML, we can move closer to solving pressing scientific and technological challenges.

tags: ICML, ICML2025