ΑΙhub.org

2025 AAAI / ACM SIGAI Doctoral Consortium interviews compilation

Contributors to the 2025 Doctoral Consortium series. Authors pictured in order of their interview publication date (left to right, top to bottom).

Each year, a small group of PhD students are chosen to participate in the AAAI/SIGAI Doctoral Consortium. This initiative provides an opportunity for the students to discuss and explore their research interests and career objectives in an interdisciplinary workshop together with a panel of established researchers. During 2025, we met with some of the students to find out more about their research and the doctoral consortium experience. Here, we collate the interviews.

Interview with Kunpeng Xu: Kernel representation learning for time series

Kunpeng Xu completed his PhD at the Université de Sherbrooke and is now a postdoctoral fellow at McGill University. His research focuses on time series analysis, kernel learning, and self-representation learning, with applications in forecasting, concept drift adaptation, and interpretability. During his PhD, he explored various aspects of kernel representation learning for time series.

Interview with Kayla Boggess: Explainable AI for more accessible and understandable technologies

Kayla Boggess is a PhD student at the University of Virginia. Her research is at the intersection of explainable artificial intelligence, human-in-the-loop cyber-physical systems, and formal methods. She is interested in developing human-focused cyber-physical systems using natural language explanations for various single and multi-agent domains such as search and rescue and autonomous vehicles.

Interview with Tunazzina Islam: Understand microtargeting and activity patterns on social media

Earlier this year, Tunazzina Islam completed her PhD in Computer Science from Purdue University. Her primary research interests lie in computational social science, natural language processing, and social media mining and analysis. During her PhD, she focussed on understanding microtargeting and activity patterns on social media by developing computational approaches and frameworks.

Interview with Lea Demelius: Researching differential privacy

Researching differential privacy, Lea Demelius is a PhD student at the University of Technology Graz in Austria. She is investigating the trade-offs and synergies that arise between various requirements for trustworthy AI, in particular privacy and fairness, with the goal of advancing the adoption of responsible machine learning models.

Interview with Joseph Marvin Imperial: aligning generative AI with technical standards

Joseph Marvin Imperial is studying for his PhD at the University of Bath, focusing on aligning generative AI with technical standards for regulatory and operational compliance. He has explored evaluating and benchmarking the current capabilities of GenAI, specifically LLMs, on how they can capture standard constraints through prompting-based approaches such as in-context learning.

Interview with Amina Mević: Machine learning applied to semiconductor manufacturing

Based at the University of Sarajevo, Amina Mević is carrying out her PhD in collaboration with Infineon Technologies Austria. Her research focuses on developing an explainable multi-output virtual metrology system based on machine learning to predict the physical properties of metal layers in semiconductor manufacturing.

Interview with Onur Boyar: Drug and material design using generative models and Bayesian optimization

Onur Boyar is a PhD candidate at Nagoya University in Japan and a researcher at RIKEN. His work focuses on the design of novel molecules and crystals using generative models, latent space representations, and advanced optimization techniques such as Bayesian optimization. He is contributing to a Japanese project with the aim of building an AI robot that can handle the entire drug discovery and development process.

Interview with Ananya Joshi: Real-time monitoring for healthcare data

Having competed her PhD earlier this year, Ananya Joshi is now Assistant Professor at Johns Hopkins. In her doctoral research, she developed a human-in-the-loop system and related methods that are used daily by public health domain experts to identify and diagnose data events from large volumes of streaming data.

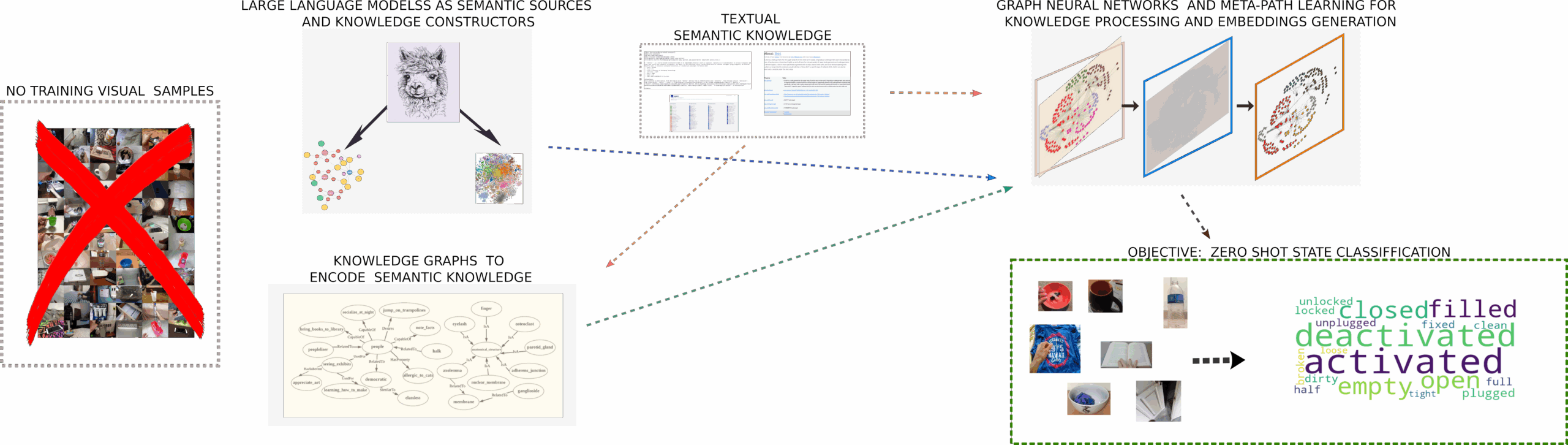

Interview with Filippos Gouidis: Object state classification

Based in Greece, Filippos Gouidis is a postdoctoral researcher at the Foundation for Research and Technology – Hellas (FORTH) in Crete, having completed his PhD at the University of Crete in early 2025. His PhD focused on zero-shot object state classification, and, more generally, his research interests lie in knowledge representation, neurosymbolic integration, and zero-shot learning.

Interview with Debalina Padariya: Privacy-preserving generative models

Debalina Padariya is currently pursuing a PhD at De Montfort University, UK. Her research primarily focuses on privacy-preserving generative models. The aim is to develop a novel privacy-preserving framework that will contribute to the practical advancements of synthetic data generation across industry and the public sector.

Interview with Amar Halilovic: Explainable AI for robotics

Amar Halilovic is a PhD student at Ulm University in Germany, where he focuses on explainable AI for robotics. His research investigates how robots can generate explanations of their actions in a way that aligns with human preferences and expectations, particularly in navigation tasks.

Interview with Mahammed Kamruzzaman: Understanding and mitigating biases in large language models

Mahammed Kamruzzaman is pursuing his PhD at the University of South Florida. His research focuses on understanding and mitigating biases in large language models (LLMs), particularly how these biases manifest across various sociodemographic and cultural dimensions.

Interview with Kate Candon: Leveraging explicit and implicit feedback in human-robot interactions

Based at Yale University, Kate Candon is a PhD student interested in understanding how we can create interactive agents that are more effectively able to help people. She is particularly interested in enabling robots to better learn from natural human feedback so that they can become better collaborators.

Interview with Aneesh Komanduri: Causality and generative modeling

Aneesh Komanduri is a final-year PhD student at the University of Arkansas. His research lies at the intersection of causal inference, representation learning, and generative modeling, with a broader focus on trustworthiness and explainability in artificial intelligence.

Interview with Shaghayegh (Shirley) Shajarian: Applying generative AI to computer networks

A PhD student at North Carolina A&T State University, Shaghayegh (Shirley) Shajarian is focused on applying generative AI to computer networks. She is developing AI-driven agents that assist with some network operations, such as log analysis, troubleshooting, and documentation.

Interview with Flávia Carvalhido: Responsible multimodal AI

Flávia Carvalhido is a PhD student at the University of Porto, working on responsible multimodal AI. The goal of her research project is to enhance the responsible usage of multimodal AI for medical image report generation by employing stress testing techniques on state-of-the-art models in order to find their limitations.

Interview with Haimin Hu: Game-theoretic integration of safety, interaction and learning for human-centered autonomy

Haimin Hu is an Assistant Professor of Computer Science at Johns Hopkins University, having completed his PhD this year at Princeton University. His research focuses on the algorithmic foundations of human-centered autonomy. His work aims to ensure autonomous systems are performant, verifiable, and trustworthy when deployed in human-populated space.

Interview with Benyamin Tabarsi: Computing education and generative AI

A PhD student at North Carolina State University, Benyamin Tabarsi is investigating computing education and generative AI. He is focused on developing intelligent support systems for computer science students and instructors. He leads the development of MerryQuery, an AI assistant that provides tailored support for students and gives instructors enhanced insights and control.

Interview with Yezi Liu: Trustworthy and efficient machine learning

Yezi Liu’s research focuses on trustworthy and efficient machine learning, spanning fairness, privacy, interpretability, and scalability in both graph neural networks and large language models. She has developed methods for fairness, privacy-preserving learning, dynamic GNN explainability, and graph condensation, and is a PhD student at the University of California, Irvine.

Interview with Zahra Ghorrati: developing frameworks for human activity recognition using wearable sensors

Zahra Ghorrati is pursuing her PhD at Purdue University, where her dissertation focuses on developing scalable and adaptive deep learning frameworks for human activity recognition (HAR) using wearable sensors. Her goal is to design deep learning models that are not only computationally efficient and interpretable but also robust to the variability of real-world data.

Interview with Janice Anta Zebaze: using AI to address energy supply challenges

Janice Anta Zebaze is studying for her PhD at the University of Yaounde I in Cameroon, with a focus on renewable energy systems, tribology, and artificial intelligence. The aim of her research is to address energy supply challenges in developing countries by leveraging AI to evaluate resource availability and optimize energy systems.

tags: AAAI, AAAI Doctoral Consortium, AAAI2025, ACM SIGAI