ΑΙhub.org

Navigating to objects in the real world

Empirical study: We evaluated three approaches for robots to navigate to objects in six visually diverse homes.

TLDR: Semantic navigation is necessary to deploy mobile robots in uncontrolled environments like our homes, schools, and hospitals. Many learning-based approaches have been proposed in response to the lack of semantic understanding of the classical pipeline for spatial navigation. But learned visual navigation policies have predominantly been evaluated in simulation. How well do different classes of methods work on a robot? We present a large-scale empirical study of semantic visual navigation methods comparing representative methods from classical, modular, and end-to-end learning approaches. We evaluate policies across six homes with no prior experience, maps, or instrumentation. We find that modular learning works well in the real world, attaining a 90% success rate. In contrast, end-to-end learning does not, dropping from 77% simulation to 23% real-world success rate due to a large image domain gap between simulation and reality. For practitioners, we show that modular learning is a reliable approach to navigate to objects: modularity and abstraction in policy design enable Sim-to-Real transfer. For researchers, we identify two key issues that prevent today’s simulators from being reliable evaluation benchmarks — (A) a large Sim-to-Real gap in images and (B) a disconnect between simulation and real-world error modes.

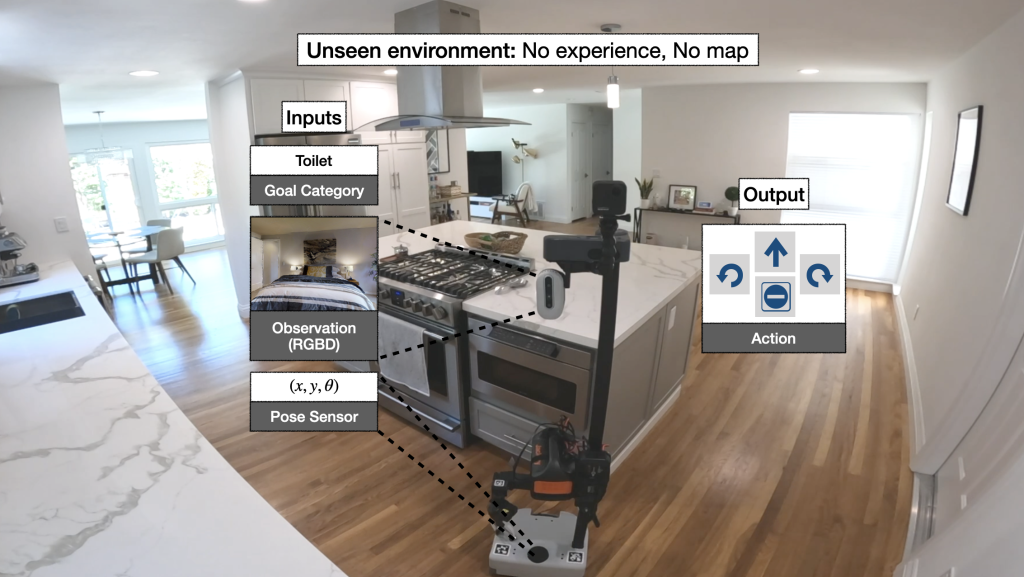

Object goal navigation

We instantiate semantic navigation with the Object Goal navigation task [Anderson 2018], where a robot starts in a completely unseen environment and is asked to find an instance of an object category, let’s say a toilet. The robot has access to only a first-person RGB and depth camera and a pose sensor (computed with LiDAR-based SLAM).

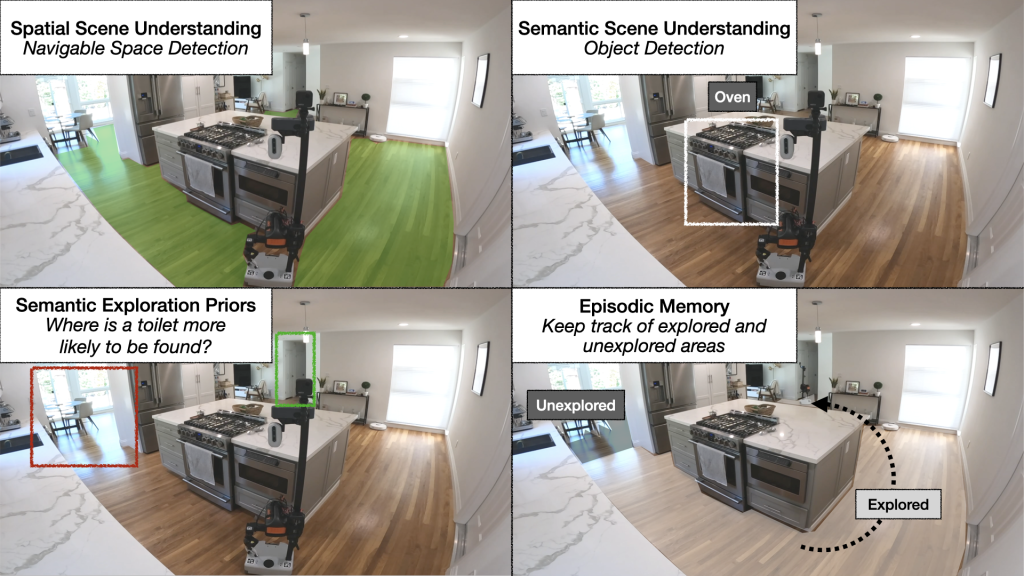

This task is challenging. It requires not only spatial scene understanding of distinguishing free space and obstacles and semantic scene understanding of detecting objects, but also requires learning semantic exploration priors. For example, if a human wants to find a toilet in this scene, most of us would choose the hallway because it is most likely to lead to a toilet. Teaching this kind of spatial common sense or semantic priors to an autonomous agent is challenging. While exploring the scene for the desired object, the robot also needs to remember explored and unexplored areas.

Methods

So how do we train autonomous agents capable of efficient navigation while tackling all these challenges? A classical approach to this problem builds a geometric map using depth sensors, explores the environment with a heuristic, like frontier exploration [Yamauchi 1997], which explores the closest unexplored region, and uses an analytical planner to reach exploration goals and the goal object as soon as it is in sight. An end-to-end learning approach predicts actions directly from raw observations with a deep neural network consisting of visual encoders for image frames followed by a recurrent layer for memory [Ramrakhya 2022]. A modular learning approach builds a semantic map by projecting predicted semantic segmentation using depth, predicts an exploration goal with a goal-oriented semantic policy as a function of the semantic map and the goal object, and reaches it with a planner [Chaplot 2020].

Large-scale real-world empirical evaluation

While many approaches to navigate to objects have been proposed over the past few years, learned navigation policies have predominantly been evaluated in simulation, which opens the field to the risk of sim-only research that does not generalize to the real world. We address this issue through a large-scale empirical evaluation of representative classical, end-to-end learning, and modular learning approaches across 6 unseen homes and 6 goal object categories (chair, couch, plant, toilet, TV).

Results

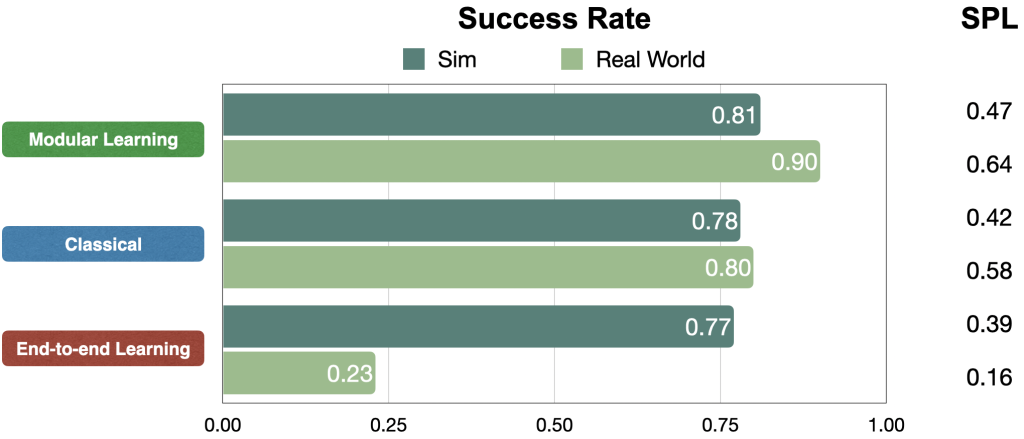

We compare approaches in terms of success rate within a limited budget of 200 robot actions and Success weighted by Path Length (SPL), a measure of path efficiency. In simulation, all approaches perform comparably. But in the real world, modular learning and classical approaches transfer really well while end-to-end learning fails to transfer.

We illustrate these results qualitatively with one representative trajectory.

Result 1: Modular learning is reliable

We find that modular learning is very reliable on a robot, with a 90% success rate.

Result 2: Modular learning explores more efficiently than the classical approach

Modular learning improves by 10% real-world success rate over the classical approach. With a limited time budget, inefficient exploration can lead to failure.

Result 3: End-to-end learning fails to transfer

While classical and modular learning approaches work well on a robot, end-to-end learning does not, at only 23% success rate.

Analysis

Insight 1: Why does modular transfer while end-to-end does not?

Why does modular learning transfer so well while end-to-end learning does not? To answer this question, we reconstructed one real-world home in simulation and conducted experiments with identical episodes in sim and reality.

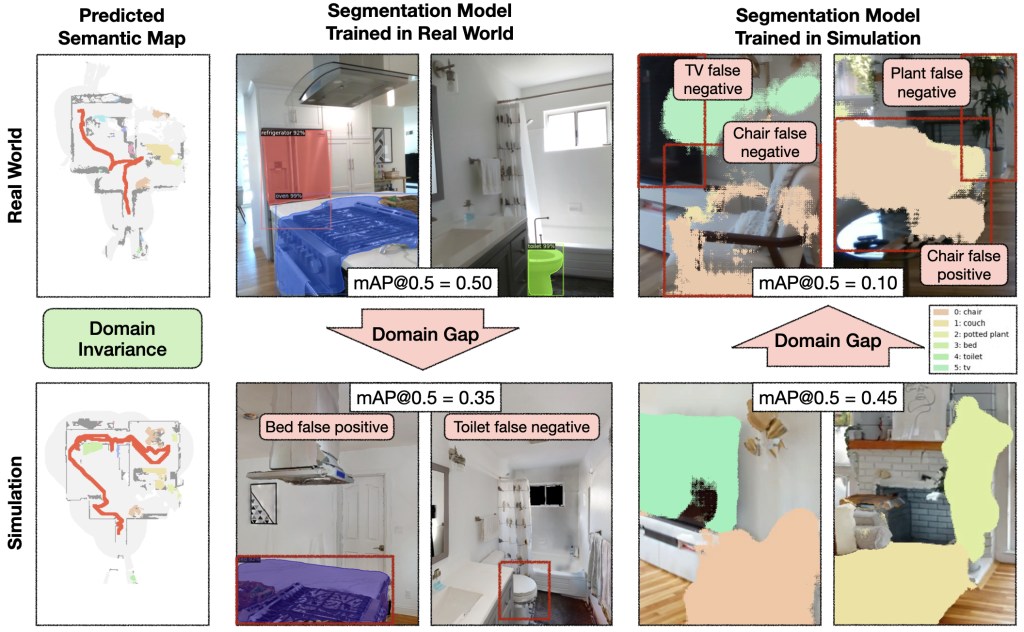

The semantic exploration policy of the modular learning approach takes a semantic map as input, while the end-to-end policy directly operates on the RGB-D frames. The semantic map space is invariant between sim and reality, while the image space exhibits a large domain gap.

The semantic map domain invariance allows the modular learning approach to transfer well from sim to reality. In contrast, the image domain gap causes a large drop in performance when transferring a segmentation model trained in the real world to simulation and vice versa. If semantic segmentation transfers poorly from sim to reality, it is reasonable to expect an end-to-end semantic navigation policy trained on sim images to transfer poorly to real-world images.

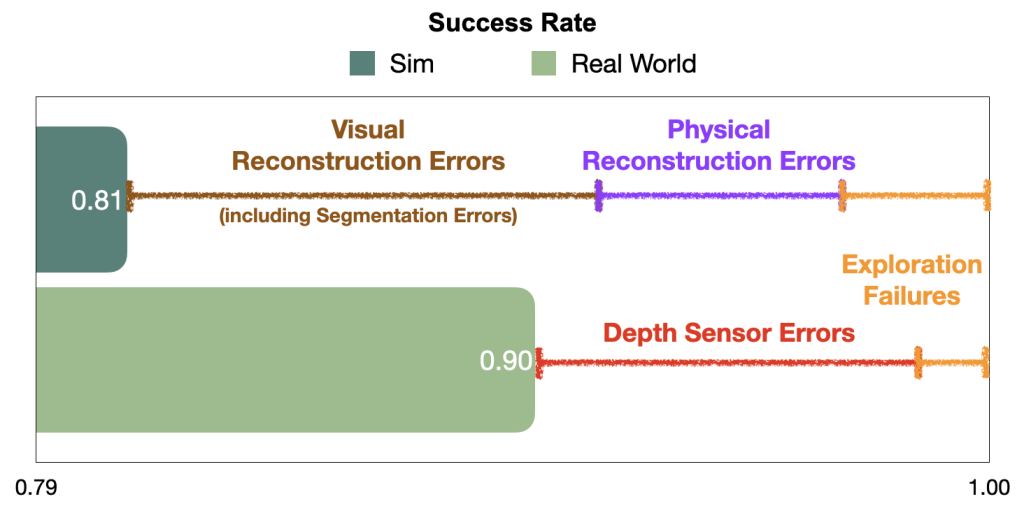

Insight 2: Sim vs real gap in error modes for modular learning

Surprisingly, modular learning works even better in reality than simulation. Detailed analysis reveals that a lot of the failures of the modular learning policy that occur in sim are due to reconstruction errors, both visual and physical, which do not happen in reality. In contrast, failures in the real world are predominantly due to depth sensor errors, while most semantic navigation benchmarks in simulation assume perfect depth sensing. Besides explaining the performance gap between sim and reality for modular learning, this gap in error modes is concerning because it limits the usefulness of simulation to diagnose bottlenecks and further improve policies. We show representative examples of each error mode and propose concrete steps forward to close this gap in the paper.

Takeaways

For practitioners:

- Modular learning can reliably navigate to objects with 90% success

For researchers:

- Models relying on RGB images are hard to transfer from sim to real => leverage modularity and abstraction in policies

- Disconnect between sim and real error modes => evaluate semantic navigation on real robots

If you’ve enjoyed this post and would like to learn more, please check out the Science Robotics 2023 paper and talk. Code coming soon. Also, please don’t hesitate to reach out to Theophile Gervet!

This article was initially published on the ML@CMU blog and appears here with the authors’ permission.

tags: deep dive