ΑΙhub.org

Enhancing controlled query evaluation through epistemic policies

by Gianluca Cima, Domenico Lembo, Lorenzo Marconi, Riccardo Rosati and Domenico Fabio Savo

In an era where data privacy is paramount, the challenge of sharing information without compromising sensitive details has become more relevant than ever.

Here, we consider the framework known as Controlled Query Evaluation (CQE), an innovative approach that safeguards confidentiality while still providing maximal query answers.

We present an extension of this framework that enhances its expressivity through the use of rich forms of data protection rules. We explore the practical importance of these rules and some of the technical underpinnings that make this system effective. We then study some computational properties when data are managed through ontologies specified in DL-LiteR, a popular language designed for efficient reasoning in data-intensive applications. DL-LiteR underpins OWL2 QL, one of the tractable fragments of the W3C OWL2 standard.

What is Controlled Query Evaluation (CQE)?

CQE is a framework designed to protect sensitive information by filtering the answers to user queries. It operates under a data protection policy that specifies what information is confidential, ensuring that users cannot deduce sensitive details through their queries. A distinguishing feature of this framework is its declarativeness: for protecting the data, the system engineer does not have to design a specific algorithm, but just specify a policy consisting of logical rules.

The role of the policy language

The expressiveness of the policy depends on the specific language in which it can be declared. The policy language defines which rules can be specified for governing what information can be disclosed and what must remain confidential. As always happens in computer science, the more expressive this language is, the more complicated it is to design a system that can handle it effectively and efficiently. In logical frameworks like the one in question, some practical challenges are not uncommon.

Traditional approaches adopt the so-called denials as rules for restricting the system’s ability to convey information. Syntactically, denials are logical implications having a conjunction of atoms (that is, the only logical operator allowed is AND, indicated as “∧”) on the left-hand side and ⊥ (which means false) on the right. Intuitively, this kind of formula forbids any instantiation of the conjunction to be true (or, in our case, to be revealed!).

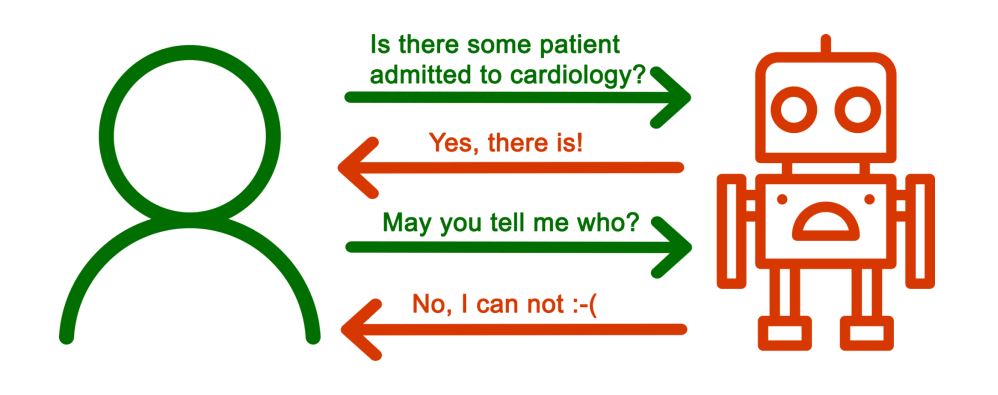

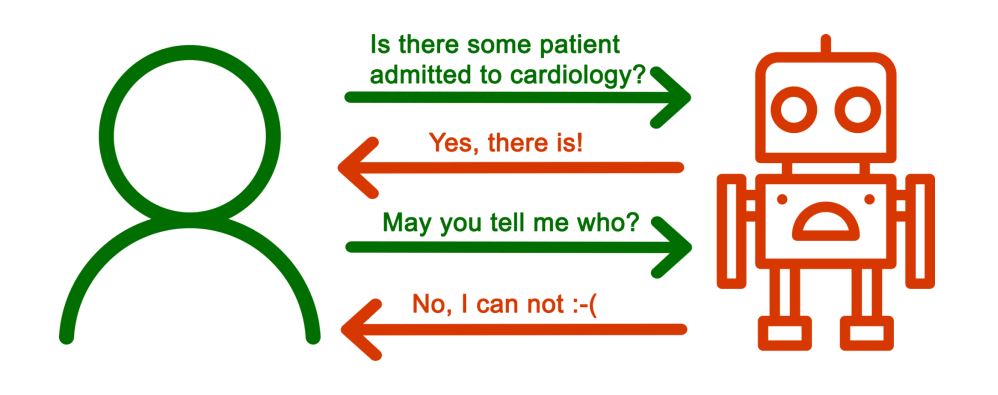

For example, consider a policy that states: “It is confidential whether there exists a patient admitted to a hospital department”. This rule can be written as follows:

∀ x ∀ y (Patient(x) ∧ admitted(x,y) → ⊥)

While this effectively hides patient identities, it can also be considered overly restrictive. Such a policy would prohibit inferring the existence of any admitted patients, which is not practical or meaningful in real-world scenarios, as it is generally obvious that hospitals have admitted patients. A more plausible protection rule could be: “It is confidential which patients have been admitted to a hospital department.”

A new approach: epistemic dependencies

To enhance the expressiveness of CQE, we exploit epistemic dependencies (EDs). These allow for a more refined expression of confidentiality policies by capturing the knowledge state of the user. Rather than simply stating what cannot be known, EDs also provide a way of articulating what the system can reveal without breaching confidentiality.

In formal terms, EDs are logical formulas using the so-called epistemic operator K. In the above example, to properly hide the identities of hospital’s patients while still acknowledging their existence, the following ED could be specified in place of the denial:

∀ x (K ∃ y (Patient(x) ∧ admitted(x,y)) → ⊥)

As opposed to classical denials, EDs allow to specify a conjunction of logical atoms also on the right-hand side of the formula, still enclosed in the scope of the epistemic operator K. In very simple terms, a rule like K C1 → K C2 would force the system to reveal C1 only if C2 can also be revealed.

For instance, an ED might specify that the system cannot reveal the list of admitted patients unless they have signed a consent form. In formal terms:

∀ x (K ∃ y (patient(x) ∧ admitted(x,y)) → K consent(x))

This formula stipulates that if a user knows a patient is admitted, they must also know that the patient has consented to disclose that information. This layered approach to confidentiality is a significant leap from previous models and leads to more practical and realistic implementations of data protection.

Censors: the gatekeepers of confidentiality

To operationalize CQE, we need to define its semantics, which is where censors come into play. A censor acts as a filter, ensuring that only non-sensitive information is shared. Distinct censors are simply distinct ways of ensuring that the exposed knowledge is compliant with the protection policy. Generally, given a set of data and a policy, several censors may exist.

In math terms, a censor is a set of all the logical sentences (in a specific language, called the censor language) that are inferable by the original knowledge and, at the same time, do not violate the policy. When one such set is maximal (that is, it cannot contain any additional sentence in the censor language without violating the policy), it is called optimal censor.

We then should establish which strategy to adopt for answering a user query. We mainly focus on skeptical entailment under censors (or SC-entailment). Under this semantics, the system should compute query results returning all and only the answers that can be disclosed according to every optimal censor. If even a single optimal censor hides an answer, it should not be returned.

Boolean conjunctive queries

Before presenting our results, we introduce Boolean Conjunctive Queries (BCQs), which we both use as query language and as censor language. The first language expresses what the user can ask the system, whereas the second expresses which sentences the censor can contain.

BCQs are a well-known type of query that can be posed to a database or a knowledge base, where “Boolean” means that the answer can be just yes or no (true or false). They are logical sentences consisting of a conjunction of atoms and can be understood in terms of the basic relational algebra operations: selection, projection, and join.

Besides BCQs, we also consider unions of BCQs (BUCQs) as query language, which also allow the logical operator OR (∨), which, in terms of relational algebra corresponds to the union operator.

Insights on indistinguishability

A key aspect of ensuring confidentiality in CQE is the principle of indistinguishability. The idea behind this principle can be explained in simple terms as follows: if a system containing sensitive data responds to user queries in the same way as a system without sensitive data, the two systems are indistinguishable from the user, thereby preserving confidentiality. Our findings show that, for DL-LiteR ontologies, SC-entailment maintains this property for BCQs. However, when BUCQs are asked, the property doesn’t hold as robustly.

To overcome this issue, we explore IC-entailment—an approximation of SC-entailment that involves querying the intersection of all optimal censors (remember, censors are sets!) instead of querying them separately. Interestingly, we found that, for DL-LiteR ontologies, IC-entailment still preserves the property of indistinguishability, even when BUCQs are posed to the system.

Computational complexity and the aciclicity condition

Let us now focus on how complex the approach is from a computational point of view. Specifically, we consider SC-entailment and IC-entailment of BUCQs for the case of DL-LiteR ontologies when the censor language is the language of BCQs, and study the data complexity of the problems, that is, how the amount of required computing resources varies when increasing the size of the input data. Understanding the data complexity of SC-and IC-entailment is crucial for ensuring that our approach remains efficient and thus truly applicable in practice.

Our finding is that, in general, both the entailment problems we consider are coNP-complete. This is bad news since coNP-complete problems are known to be hard to solve. Indeed, this means that there is no known algorithm that can solve it in less than exponential time with respect to the size of the data. For comparison, most SQL queries are evaluated on a database in less than polynomial time with respect to the size of the data.

To identify a feasible and still practically relevant case, we specifically study an acyclicity condition for EDs. In very simple terms, EDs that are “connected” by common predicates should not draw a cycle involving such predicates. For example, the following policy:

∀ x (K ∃y (A(x, y) ∧ B(x)) → K ∃z C(z, x)),

∀ x (K ∃y C(y, x) → K D(x)),

∀ x (K D(x) → K ∃y A(y, x))

is not acyclic because of the cycle A→C→D→A. There are problems for which a condition like the one above can help a lot in reducing the computational complexity, and this is one such case. Indeed, for acyclic policies, DL-LiteR ontologies and BCQs as censor language, we prove both SC- and IC-entailment of BUCQs enjoy the highly desirable first-order rewritability property. In simple terms, this means that both problems can be reduced to standard evaluation of a first-order query (that is an SQL query) over a database, through a query reformulation mechanism that does not depend on the data.

This means that the two problems can be solved very efficiently with respect to the size of the input data, and relying on consolidated relational database technology. This is a practically relevant result, demonstrating that it is possible to develop ontology-based data management systems capable of efficiently preserving data confidentiality according to a principled approach like Controlled Query Evaluation.

This work won a distinguished paper award at IJCAI 2024. Read the paper in full here: Enhancing Controlled Query Evaluation through Epistemic Policies.

tags: deep dive, IJCAI, IJCAI2024